Updated March 15, 2023

Introduction to Logstash Multiple Pipelines

Logstash multiple pipelines is the feature provided by Logstash with the help of the configuration file named pipelines.yml, enabling us to execute one or more pipelines inside the same process. In this article, we will study the Logstash multiple pipelines by learning its subtopics: Logstash multiple pipelines overviews, creating Logstash multiple pipelines, configuring Logstash multiple pipelines, and examples of Logstash multiple pipelines, and conclusion about the same.

Logstash multiple pipelines overviews

Multiple pipelines in Logstash are the way to execute pipelines that are more than one in number inside the same process as per our requirement, which can be achieved by using a file named pipelines.yml that should be positioned and placed in the folder named path. Settings as it is a configuration file.

Multiple pipelines are used when the events in the configuration don’t have the same common set of filters and inputs being shared among themselves. At the same time, conditionals and tags are used to separate all the outputs generated from them.

Suppose we have a single instance that contains multiple pipelines. In that case, events can have varied parameters for durability and performance, such as different setting values set for the persistent queues and different for pipeline workers. This means that the separation will result in the exertion of no pressure between the blocked outputs of multiple pipelines.

It is very important to handle the resource competition among various pipelines if, by default, the values are set only for a single pipeline. One of the examples that will help you understand this scenario is that we apply the measure of assigning a single worker to each of the CPU cores to reduce the pipeline workers used by the pipelines.

The namespace of the location for dead-letter queues and persistent queues are completely segregated and isolated for each of the pipelines and are specified by using the pipeline.id value for identification.

Create Logstash multiple pipelines

The first step is ensuring that the configuration file of pipelines.yml is set correctly.

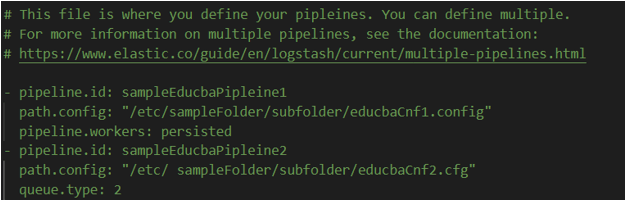

The structure of the pipelines.yml file is somewhat as shown below. That contains the details of each pipeline we will use for that process.

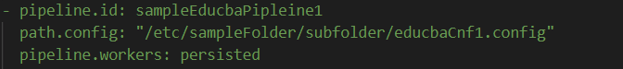

For a single pipeline, the sample definition can be as shown below –

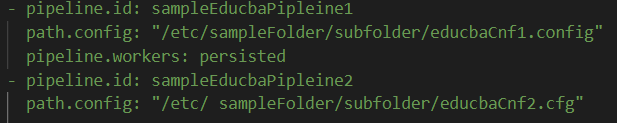

You can add as many pipelines as you want per your requirement for multiple pipelines. The sample definition can be as shown below –

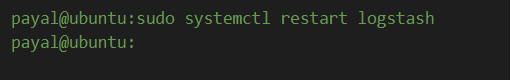

The last step is to restart the logstash by using the command –

Sudo systemctl restart logstash

The output of the command is –

As we can observe above, the format followed for defining this configuration file is YAML, which has a list of dictionaries. Inside each dictionary is the pipeline description where all the key-value pairs are present. These pairs help describe the configuration’s path and their respective identifiers. The above content as a sample specifies two pipelines containing the path of configuration and their respective identifiers. If we fail to specify the setting’s value for the respective pipeline, then the default value as set in the file of Logstash.yml will be considered as the tn settings file. When no arguments are specified while starting the Logstash, the process will involve reading the file named pipelines.yml for configurations and will initialize the specified pipelines in the file. If we use options such as -f or -e, the pipelines.yml settings file is completely ignored, and a warning is logged for the same.

Configure Logstash multiple pipelines

The configuration of multiple pipelines and the description of their contents must be specified in the file named pipelines.yml, as shown above. The steps required to configure Logstash multiple pipelines using the YAML file are as specified below –

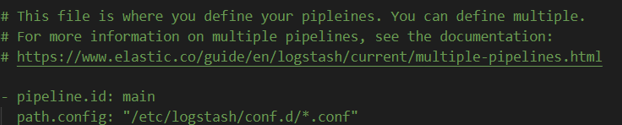

- Our configuration file for multiple pipelines is inside the directory named etc/ search for the file named pipelines.yml. In case you don’t find it there, check where you have installed the Logstash; you will find it there. The default location where Logstash is installed and where you will find the configuration file is /etc/Logstash/pipelines.yml if you are using the Linux platform. The file looks as shown below –

- The next step is to open ay editor; in my case, I am using nano so that we can go for the modification of the file. We will execute the following command, which will allow us to modify the pipelines.yml file where we will place the content for now, as shown in the above sample where we created two pipelines out of which one was persisted while the other one has two workers –

Sudo nano edit pipelines.yml

The above command’s execution will open up the pipelines.yml file where we have made the changes per our requirement. The final output file looks like this –

- We can click on ctrl+ O, which will save the file’s contents, and ctrl+X will make us exit the editor of nano that we opened.

Examples Logstash multiple pipelines

We can go for creating multiple pipelines with the help of a config map. Let us consider an example. We will follow certain steps to do so, which are –

- configMap containing the input, output, and filter details should be created.

We have created the following sample configMap –

cat end.conf

input {

file {

path => "/tmp/end"

}

}

output {

stdout { }

}

cat start.conf

input {

file {

path => "/tmp/start"

}

}

output {

stdout { }

}

cat pipelines.yml

- pipeline.id: start

path.config: "https://cdn.educba.com/opt/bitnami/Logstash/config/start.conf"

- pipeline.id: end

path.config: "https://cdn.educba.com/opt/bitnami/Logstash/config/end.conf"

kubectl create cm multipleconfig --from-file=pipelines.yml --from-file=start.conf --from-file=end.conf

- Along with the parameter of enableMultiplePipelines, try to execute the below command, with will help in deploying the helm chart.

helm install Logstash --set existingConfiguration=multipleconfig --set enableMultiplePipelines=true

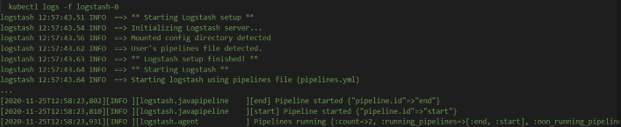

We will get the following output after executing the above command –

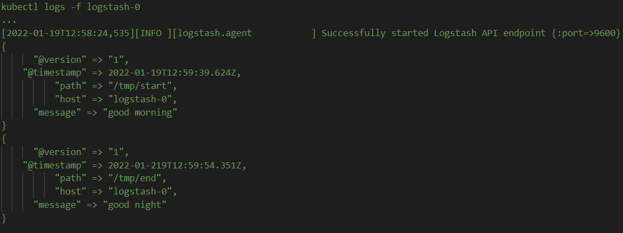

- Go to the tracked file and create the dummy events inside it. Also, give a check in the output of the result generated by Logstash by using the commands –

kubectl exec -ti Logstash-0 -- bash -c 'echo good morning >> /tmp/start'

kubectl exec -ti Logstash-0 -- bash -c 'echo good night >> /tmp/end'

- Check whether there is a proper reflection of the events in the output of the logs –

Conclusion

Logstash multiple pipelines are very helpful in scenarios where the configurations contain the events that do not have common filters or inputs. Instead, the conditionals and tags are present, which help complete output segregation for each event flow.

Recommended Articles

This is a guide to Logstash Multiple Pipelines. Here we discuss the Introduction overviews; Create Logstash multiple pipelines Examples with code implementation. You may also have a look at the following articles to learn more –