Updated March 14, 2023

Introduction to Logstash split

The Logstash split is one of the filters that can be used to measure the data manipulation and create the event for parsing the data format as JSON with a readable format which will already create the column fields to use the JSON as the user input format codec and it will be filtered on the table using the Apache-Access default plugins to manage the events on the SQL transaction.

What is logstash split?

Split filters are mainly used to identify the duplicates on the event with any of the fields and copy with each value of the real content that is also cloned with each other. We used string or array type of values on the separate field. It can be used to divide the array fields in all the events into separate child events. Mainly the usage of the lists for to organize the data will join together with prevalent data models like JSON and XML.

How to Use logstash split?

Because the field data results will do not exist the data for to map and retrieve the data work to make it for to parsing the JSON from the data file which is producing the fields. A split filter is mainly used for calling the message into the multiple messages containing the element from one place to another place like arrays etc. If we want to split the strings into arrays or some other formats then we used the split option on the mutate filter. Mutate filter is also the type of filter to perform the data operations on the Logstash because it’s one of the open-source technology and it is a server-side data processing pipeline that can be injected into the user data transformed and sent to the output console. The logstash pipeline will follow the formats to perform the data operations with different formats like the time field column to display the data and time on the table sheet. We used CSV and XLSX format for matching the user data like XML and JSON document where is to be mapped with the specified fields DAX, SMI, FTSE, time, CAC these are some formats for mapping the user values to the specified data rows and columns of the database table. Additionally, we used plugin filters to copy and clone the data with the data field column type corresponding to the cloning array format.

logstash split message

The message fields are the default field that can be used to plugin the most type of inputs to place the data payload that can be received from the network. We used the message field to read from the file and its hot helpful for the convention using the message field with the server timestamp and zone based on the priority and severity levels. The logstash split method and the filter will be hosted on the machine using hostname the actual message is extracted during the timestamp with http logs on the free text messages. Logstash is used server-side data processing pipelines for ingesting and utilizing multiple sources along with the Kibana users for visualizing datas on charts and graphs. Elastic Stack is one of the most important evolutions of the logstash stack including ELK Elasticsearch and Kibana. The messages to be splitted with the various formats like arrays, objects, a text files with new fields datas will be measured and manipulated the events with Apache-Access the filter plugins to be managed using the logstash events with Aggregate filter conditions during the SQL transaction on the database computing log times. Message fields have the mutate, key-value pairs, hash codes with exception hash messages target and source datas are different special characters and symbols are called and value splits are iterated and shown on the output console.

Example #1

input {

file {

path => "${HOME}/first.csv"

a => "first inputs"

b => "/usr/siva"

}

}

filter {

split{

csv {

m => ["january","February","March","April","May",June","July","August","September","October","November","December"]

seps => ","

outs => {

'February' => 'int'

'March' => 'int'

'April' => 'int'

'May' => 'int'

}

}

}

months {

inps => ['january', 'Inputs']

}

res {

varss => ['c', 'd']

}

if [type] == 'c' {

prune {

ps => [ "c"]

}

mutate {

add_field => { "[@metadata][type]" => "tets" }

}

}

else if [type] == 'd' {

prune {

ps => [ "d"]

}

mutate {

add_field => { "[@metadata][type]" => "exam" }

}

}

}

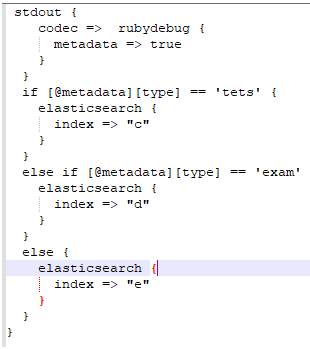

Output:

In the first example we used a Split filter along with elastic search and logstash pipeline with the specified indexes and the ranges. Here we used a CSV file to store and extract the datas from the pipeline.

Example #2

filter {

split{

metricize {

vars => [ "January", "february", "March","April","May","June","July","August","September","October","November","December" ]

}

}

}

Output:

In the above example, I used metricize filter additionally with the Split filter on the Logstash pipeline. Here I have not used any CSV files on the pipeline.

Conclusion

Logstash is one of the useful tools and it will helpful for monitoring the user data with real-time scenarios. It has been equipped with a powerful engine for performing the user inputs and outputs operations along with the filter conditions like split, metricize for user data operations.

Recommended Articles

This is a guide to Logstash split. Here we discuss the Introduction, What is logstash split, How to use logstash split? Examples with code implementation. You may also have a look at the following articles to learn more –