Updated February 28, 2023

Introduction to Pig Data Types

Apache Hadoop is a file system it stores data but to perform data processing we need SQL like language which can manipulate data or perform complex data transformation as per our requirement this manipulation of data can be achieved by Apache PIG. It is a high-level scripting language like SQL used with Hadoop and is called as Pig Latin. Pig Data Types works with structured or unstructured data and it is translated into number of MapReduce job run on Hadoop cluster.

To understand Operators in Pig Latin we must understand Pig Data Types. Any data loaded in pig has certain structure and schema using structure of the processed data pig data types makes data model. Data model get defined when data is loaded and to understand structure data goes through a mapping. pig can handle any data due to SQL like structure it works well with Single value structure and nested hierarchical datastructure.

Pig’s Data Types

Since, pig Latin works well with single or nested data structure. Its data type can be broken into two categories:

Scalar/Primitive Types: Contain single value and simple data types.

ComplexTypes: Contains otherNested/Hierarchical data types.

Scalar Data Types

Pig’s scalar data types are also called as primitive datatypes, this is a simple data types that appears in programming languages.

| Simple Type | Description and Size | Example |

| Int | 32 -bit signed integer | 26 |

| Long | 64 -bit signed integer | 26L or 26l |

| Float | 32 -bit floating point | 26.5F or 26.5f or 26.5e2f or 26.5E2F |

| Double | 64 -bit floating point | 26.5 or 26.5e2 |

| Chararray | Character array (string) in Unicode UTF-8 format | Welcome To educba |

| Bytearray | Blob (Byte array) | |

| Boolean | Boolean | True/False |

| Datetime | Date-time | 1970-01-01T00:00:00.000+00:00 |

| Biginteger | Java BigIneger | 67499292089 |

| bigdecimal | Java BIgDecimal | 150.76376256272893883 |

All datatypes are represented in java.lang classes except byte arrays. Default datatype is byte array in pig if type is not assigned. If schema is given in load statement, load function will apply schema and if data and datatype is different than loader will load Null values or generate error. Explicit casting is not supported like cast chararray to float. Pig does not support list or set type to store an items.

Complex Data Types

Complex datatypes are also termed as collection datatype. There are 3 complex datatypes:

- MAP

- TUPLES

- BAGS

1. MAP

Map is set of key-value pair data element mapping. Data in key-value pair can be of any type, including complex type.

Key: Index to find an element, key should be unique and must be an chararray.

Value: Any type of data can be stored in value and each key has certain dataassociated with it.Map are formed using bracket and a hash between key and values.Commas to separate more than one key-value pair.

Code:

['keyname'#'valuename']

Code:

['resource'#'EDUCBA', 'year'#2019]

Explanation: Above example creates a Map withKeys as : ‘resource’ and ‘year’ andValue as :EDUCBA and 2019

2. TUPLE

Tuple is an fixed length, ordered collection of fields formed by grouping scalar datatypes. It is similar to ROW in SQL table with field representing sql columns.

fields need not to be of same datatypes and we can refer to the field by its position as it is ordered.Tuple may or may not have schema provided with it for representing each fields type and name.

Fields: Can be of any type, field is just single/piece of data. Tuple is enclosed in parenthesis

Code:

(field[,fields....])

Code:

(EduCba,2019,kabir,1,2)

3. BAGS

A bag is an unordered collection of non-unique tuples. Bag may or may not have schema associated with it and schema is flexible as each tuple can have a number of fields with any type.Bag is used to store collection when grouping and bag do not need to fit into memory it can spill bags to disks if needed.

Bag is constructed using braces and tuples are separated by commas.

Code:

{tuple,[,tuple...]}

Code:

{('Hadoop',2.7),('Hive','1.13'),('Spark',2.0)}

Null Values: A null value is a non-existent or unknown value and any type of data can null. Pig treats null value the same as SQL. Pig gets Null values if data is missing or error occurred during the processing of data. Also, null can be used as a placeholder for optional values.

Examples to Implement Pig Data Types

Below are the examples:

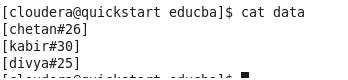

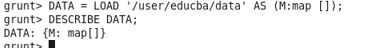

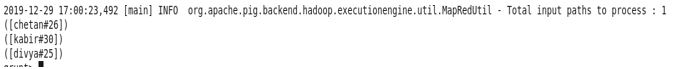

Example #1

Sample Data:

Load Data:

DATA = LOAD ‘/user/educba/data’ AS (M:map []);

DESCRIBE DATA;

Viewing The Data:

DUMP DATA;

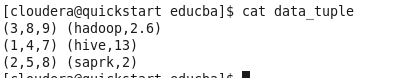

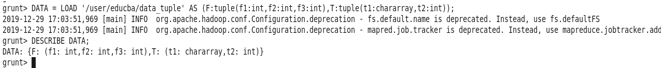

Example #2

Sample Data:

Load Data:

DATA= LOAD ‘/user/educba/data_tuple’ AS((F:tuple(f1:int,f2:int,f3:int),T:tuple(t1:chararray,t2:int));

DESCRIBE DATA;

Viewing The Data:

DUMP DATA;

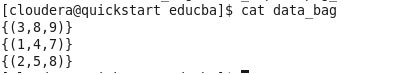

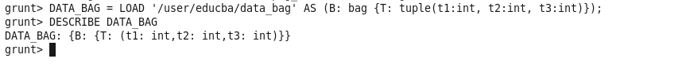

Example #3

Sample Data:

Load Data:

DATA_BAG= LOAD ‘/user/educba/data_bag’ AS (B: bag {T: tuple(t1:int, t2:int, t3:int)});

DESCRIBE DATA_BAG;

Viewing The Data:

DUMP DATA_BAG;

Conclusion

Apache pig is a part of the Hadoop ecosystem which supports SQL like structure and also It supports data types used in SQL which are represented in java.lang classes. Because of complex data types pig is used for tasks involving structured and unstructured data processing. Yahoo uses around 40% of their jobs for search as Pig extract the data, perform operations, and dumps data in the HDFS file system.

Recommended Articles

This is a guide to Pig Data Types. Here we discuss the introduction to Pig Data Types along with complex data types and examples for better understanding. You can also go through our other related articles to learn more –