Updated April 12, 2023

Introduction to PySpark Alias

PySpark Alias is a function in PySpark that is used to make a special signature for a column or table that is more often readable and shorter. We can alias more as a derived name for a Table or column in a PySpark Data frame / Data set. The aliasing gives access to the certain properties of the column/table which is being aliased to in PySpark. The Alias function can be used in case of certain joins where there be a condition of self-join of dealing with more tables or columns in a Data frame. The Alias gives a new name for the certain column and table and the property can be used out of it.

Syntax of PySpark Alias

Given below is the syntax mentioned:

from pyspark.sql.functions import col

b = b.select(col("ID").alias("New_IDd"))

b.show()Explanation:

- b: The PySpark Data Frame to be used.

- alias (“”): The function used for renaming the column of Data Frame with the new column name.

- select (col(“Column name”)): The column to be used for aliasing.

Output:

Working of Alias in PySpark

Given below shows the working of alias in PySpark:

- The function just gives a new name as the reference that can be used further for the data frame in PySpark. The alias can be used to rename a column in PySpark. Once assigning the aliasing the property of the particular table or data is frame is assigned it can be used to access the property of the same. While operating with join the aliasing can be used to join the column based on Table column operation.

- The alias function can be used as a substitute for the column or table in PySpark which can be further used to access all its properties. They are just like a Temporary name. This makes the column name easier accessible. When the column name or table name is big enough aliasing can be used for the same. The Alias can be called a correlation name for the table or the column in a PySpark Data Frame.

Examples of PySpark Alias

Given below are the examples mentioned:

Let’s start by creating simple data in PySpark.

Code:

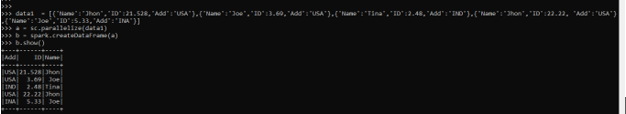

data1 = [{'Name':'Jhon','ID':21.528,'Add':'USA'},{'Name':'Joe','ID':3.69,'Add':'USA'},{'Name':'Tina','ID':2.48,'Add':'IND'},{'Name':' ','ID':22.22, 'Add':'USA'},{'Name':'Joe','ID':5.33,'Add':'INA'}]A sample data is created with Name, ID, and ADD as the field.

a = sc.parallelize(data1)RDD is created using sc. parallelize.

b = spark.createDataFrame(a)

b.show()Created Data Frame using Spark.createDataFrame.

Output:

Alias Function to cover it over the data frame.

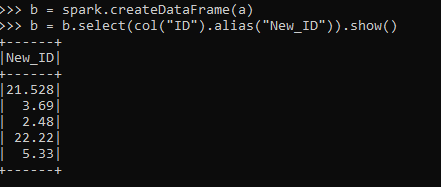

The Alias was issued to change the name of the column ID to a new Name New_Id.

Code:

b = b.select(col("ID").alias("New_ID")).show()Output:

The data frame can be used by aliasing to a new data frame or name.

Code:

b.alias("New_Name")Output:

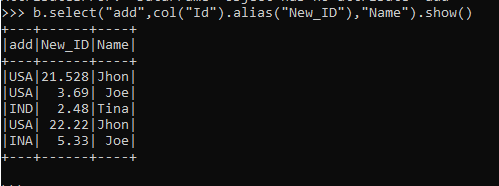

The aliasing function can be used to change a column name in the existing data frame also.

In the above data frame, the same column can be renamed to a new column as New_id by using the alias function and the result can have the new column as data.

Code:

b.select("add",col("Id").alias("New_ID"),"Name").show()Output:

The alias function can also be used while using the PySpark SQL operation the SQL operation when used for join operation or for select operation generally aliases the table and the column value can be used by using the Dot(.) operator. The tablename. the column name is used to access the particular column of a table, in the same way, the alias name as A.columname can be used for the same purpose in PySpark SQL function.

The Aliasing there can be done simply put putting the name after the element whose aliasing needs to be done or just simply using the table name AS function followed by the Alias name.

Code:

Spark.sql("Select * from Demo d where d.id = "123"")The example shows the alias d for the table Demo which can access all the elements of the table Demo so the where the condition can be written as d.id that is equivalent to Demo.id.

Conclusion

From the above article, we saw the use of alias Operation in PySpark. From various example and classification, we tried to understand how the Alias method works in PySpark and what are is used at the programming level. We also saw the internal working and the advantages of having Alias in PySpark Data Frame and its usage in various programming purpose. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

This is a guide to PySpark Alias. Here we discuss the introduction, working of alias in PySpark and examples for better understanding. You may also have a look at the following articles to learn more –