Updated April 11, 2023

Introduction to PySpark Create DataFrame from List

PySpark Create DataFrame from List is a way of creating of Data frame from elements in List in PySpark. This conversion includes the data that is in the List into the data frame which further applies all the optimization and operations in PySpark data model. The iteration and data operation over huge data that resides over a list is easily done when converted to a data frame, several related data operations can be done by converting the list to a data frame.

Duplicate values can be allowed using this list value and the same can be created in the data frame model for data analysis purposes. Data Frame is optimized and structured into a named column that makes it easy to operate over PySpark model. Here we will try to analyze the various ways of using the Create DataFrame from List operation PySpark.

Syntax of PySpark Create DataFrame from List

Given below is the syntax mentioned:

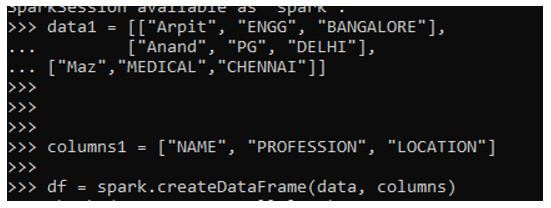

data1 = [["Arpit", "ENGG", "BANGALORE"],

... ["Anand", "PG", "DELHI"],

... ["Maz","MEDICAL","CHENNAI"]]

columns1 = ["NAME", "PROFESSION", "LOCATION"]

df = spark.createDataFrame(data, columns)- Data1: The list of data that is passed to be created as a Data frame.

- Columns1: The column schema name that needs to be pass on.

- df: spark.createDataframe to be used for the creation of dataframe. This takes up two-parameter the one with data and column schema that will be created.

Screenshot:

Working of DataFrame from List in PySpark

Given below shows how to Create DataFrame from List works in PySpark:

- The list is an ordered collection that is used to store data elements with duplicates values allowed. The data are stored in the memory location in a list form where a user can iterate the data one by one are can traverse the list needed for analysis purposes. This iteration or merging of data with another list sometimes is a costly operation so the Spark.createdataframe function takes the list element as the input with a schema that converts a list to a data frame and the user can use all the data frame-related operations thereafter.

- They are converted in a data frame and the data model is much more optimized post creation of data frame, this can be treated as a table element where certain SQL operations can also be done. The data frame post-analysis of result can be converted back to list creating the data element back to list items.

Examples of PySpark Create DataFrame from List

Given below shows some examples of how PySpark Create DataFrame from List operation works:

Example #1

Let’s start by creating a simple List in PySpark.

List Creation:

Code:

data1 = [["Arpit", "ENGG", "BANGALORE"],

... ["Anand", "PG", "DELHI"],

... ["Maz","MEDICAL","CHENNAI"]]Let’s create a defined Schema that will be used to create the data frame.

columns1 = ["NAME", "PROFESSION", "LOCATION"]The Spark.createDataFrame in PySpark takes up two-parameter which accepts the data and the schema together and results out data frame out of it.

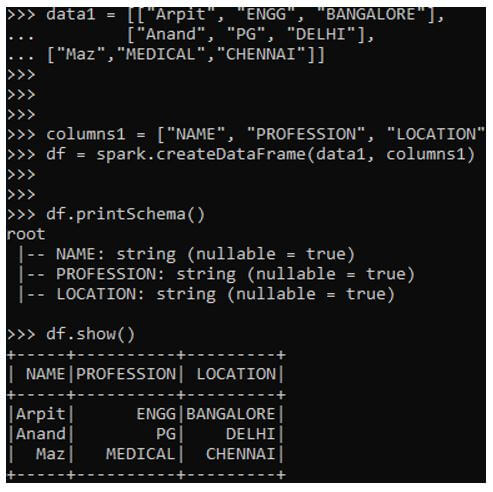

df = spark.createDataFrame(data1, columns1)The schema is just like the table schema that prints the schema passed. It is the name of columns that is embedded for data processing.

df.printSchema()

root

|-- NAME: string (nullable = true)

|-- PROFESSION: string (nullable = true)

|-- LOCATION: string (nullable = true)Let’s check the data by using the data frame .show() that prints the converted data frame in PySpark data model.

df.show()Output:

Example #2

The creation of a data frame in PySpark from List elements.

The struct type can be used here for defining the Schema. The schema can be put into spark.createdataframe to create the data frame in the PySpark.

Let’s import the data frame to be used.

Code:

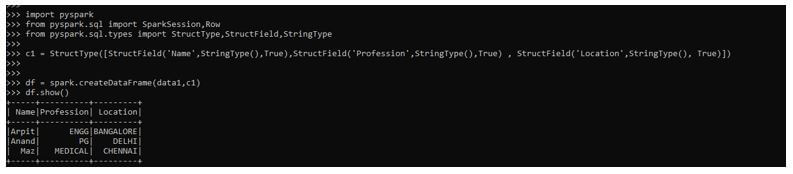

import pyspark

from pyspark.sql import SparkSession, Row

from pyspark.sql.types import StructType,StructField, StringType

c1 = StructType([StructField('Name',StringType(),True),StructField('Profession',StringType(),True) , StructField('Location',StringType(), True)])

df = spark.createDataFrame(data1,c1)

df.show()Output:

Example #3

Using the row type as List. Insert the list elements as the Row Type and pass it to the parameter needed for the creation of the data frame in PySpark.

Code:

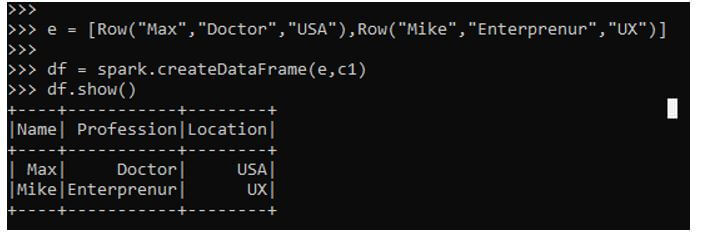

e = [Row("Max","Doctor","USA"),Row("Mike","Enterprenur","UX")]

df = spark.createDataFrame(e,c1)

df.show()Output:

These are the method by which a list can be created to Data Frame in PySpark.

Conclusion

From the above article, we saw the working of DataFrame from List Function in PySpark. From various examples and classification, we tried to understand how this DataFrame is Created From List in PySpark and what are is used at the programming level. The various methods used showed how it eases the pattern for data analysis and a cost-efficient model for the same. We also saw the internal working and the advantages of List to DataFrame in PySpark Data Frame and its usage for various programming purposes. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

We hope that this EDUCBA information on “PySpark Create Dataframe from List” was beneficial to you. You can view EDUCBA’s recommended articles for more information.