Updated April 11, 2023

Introduction to PySpark FlatMap

PySpark FlatMap is a transformation operation in PySpark RDD/Data frame model that is used function over each and every element in the PySpark data model. It is applied to each element of RDD and the return is a new RDD. This transformation function takes all the elements from the RDD and applies custom business logic to elements. This logic can be applied to each element in RDD. It flattens the RDD by applying a function to all the elements on an RDD and returns a new RDD as result. The return type can be a list of elements it can be 0 or more than 1 based on the business transformation applied to the elements. It is a one-to-many transformation model used.

Syntax of PySpark FlatMap

The syntax for PySpark FlatMap function is:

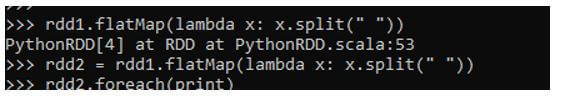

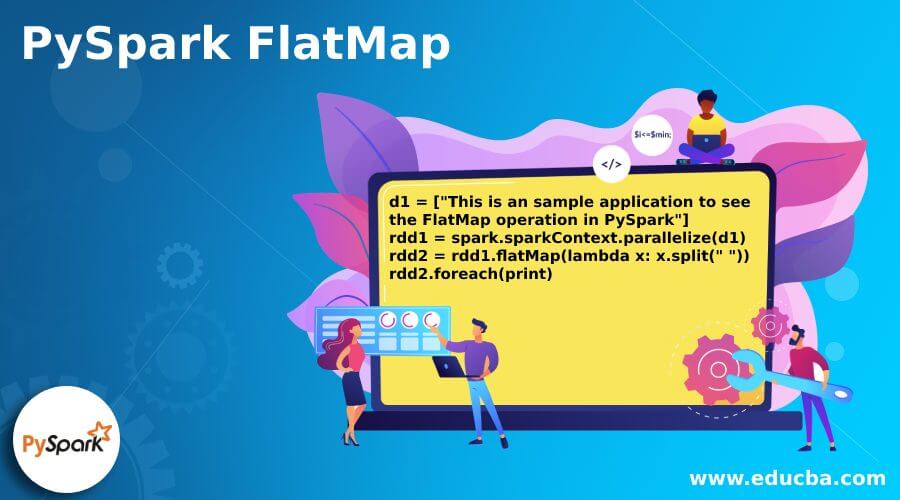

d1 = ["This is an sample application to see the FlatMap operation in PySpark"]

rdd1 = spark.sparkContext.parallelize(d1)

rdd2 = rdd1.flatMap(lambda x: x.split(" "))

rdd2.foreach(print)It takes the input data frame as the input function and the result is stored in a new column value.

Output:

Working of FlatMap in PySpark

- FlatMap is a transformation operation that is used to apply business custom logic to each and every element in a PySpark RDD/Data Frame. This FlatMap function takes up one element as input by iterating over each element in PySpark and applies the user-defined logic into it. This returns a new RDD with a length that can vary from the previous length.

- It is a one-to-many transformation in the PySpark data model. A function is applied to each element in RDD and the output is a new flattened RDD of different sizes. The transformation of each element can be 0 or more than that. This can be applied to the data frame also in PySpark the model being the same as RDD Model and output is returned. We can define our own custom logic as well as an inbuilt function also with the flat map function and can obtain the result needed.

Examples of PySpark FlatMap

Given below are the examples mentioned:

Example #1

Start by creating data and a Simple RDD from this PySpark data.

Code:

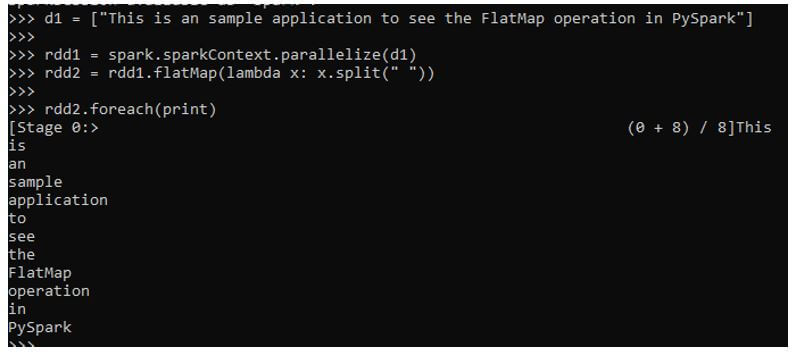

d1 = ["This is an sample application to see the FlatMap operation in PySpark"]The spark.sparkContext.parallelize function will be used for the creation of RDD from that data.

rdd1 = spark.sparkContext.parallelize(d1)Post creation of RDD we can use the flat Map operation to embed a custom simple user-defined function that applies to each and every element in an RDD.

rdd2 = rdd1.flatMap(lambda x: x.split(" "))This function splits the data based on space value and the result is then collected into a new RDD.

rdd2.foreach(print)Output:

Example #2

Let us check one more example where we will use Python defined function to collect the range and check the result in a new RDD.

Code:

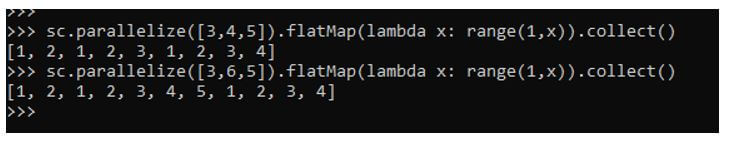

sc.parallelize([3,4,5]).flatMap(lambda x: range(1,x)).collect()This results in output as taking each element.

This function uses the range function and collects the data using the FlatMap operation. This takes each element one by one and takes the range of the Python.

sc.parallelize([3,6,5]).flatMap(lambda x: range(1,x)).collect()Output:

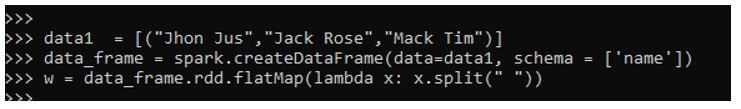

The same FlatMap operation can be used for PySpark Data Frame operation also. This data frame needs to be converted into RDD first and then the flat Map operation can be applied over it.

data1 = [("Jhon Jus","Jack Rose","Mack Tim")]

data_frame = spark.createDataFrame(data=data1, schema = ['name'])

w = data_frame.rdd.flatMap(lambda x: x.split(" "))The sample FlatMap can be written over the data frame and data can be collected thereafter.

Output:

Conclusion

From the above article, we saw the working of FlatMap in PySpark. From various examples and classifications, we tried to understand how this FlatMap function is used in PySpark and what are is used at the programming level. The various methods used showed how it eases the pattern for data analysis and a cost-efficient model for the same. We also saw the internal working and the advantages of FlatMap in PySpark Data Frame and its usage in various programming purposes. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

We hope that this EDUCBA information on “PySpark FlatMap” was beneficial to you. You can view EDUCBA’s recommended articles for more information.