Updated February 20, 2023

Introduction to PySpark lit()

The Pyspark lit() function is used to add the new column to the data frame already created; we are creating a new column by assigning a constant or literal value. The lit function returns the return type as a column. We can import the function of PySpark lit by importing the SQL function. Suppose we need to add a new column in the data frame, then the lit function is useful.

What is PySpark lit()?

In python, the PySpark module provides processing similar to using the data frame. A lit function is used to create the new column by adding constant values to the column in a data frame of PySpark. It helps interfacing RDDs, which is achieved using a library of py4j. Pyspark is nothing but a library that was developed by applying the analysis of massive amounts of unstructured and structured data. To use the lit function in python, we require the python version as 3.0 and the apache spark version as 3.1.1 or higher.

Where to Use PySpark lit() function?

We can use the lit function where we need to add the column in the data frame created. The below syntax shows where we can use the lit function as follows.

Syntax:

lit(val) .alias(name_of_column)In the above example, the column name is the name of the column we are adding to the dataset. Value is nothing but the constant value which was we are adding to a new column. We are using the lit function to add the new column to the dataset.

The below example shows when we use the lit function as follows.

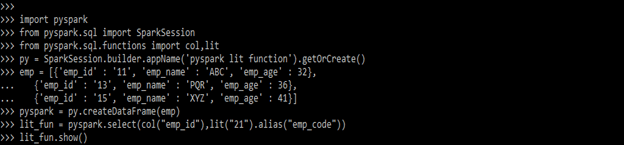

We are importing the pyspark, spark session, col, and lit modules in the example below. We are defining the py variable for creating the data frame. After creating the data frame, we add the column name emp_code column to the data frame.

Code:

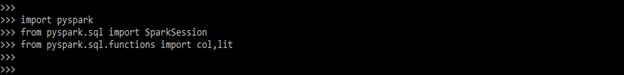

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, lit

py = SparkSession.builder.appName('pyspark lit function').getOrCreate()

emp = [{'emp_id' : '11', 'emp_name' : 'ABC', 'emp_age' : 32},

{'emp_id' : '13', 'emp_name' : 'PQR', 'emp_age' : 36},

{'emp_id' : '15', 'emp_name' : 'XYZ', 'emp_age' : 41}]

pyspark = py.createDataFrame(emp)

lit_fun = pyspark.select(col("emp_id"),lit("21").alias("emp_code"))

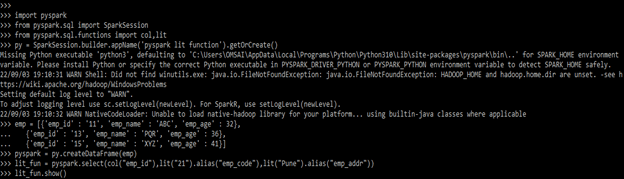

lit_fun.show()In the below example, we are adding two columns to the emp dataset. We are adding the emp_code and emp_addr columns to the emp dataset as follows.

Code:

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, lit

py = SparkSession.builder.appName('pyspark lit function').getOrCreate()

emp = [{'emp_id' : '11', 'emp_name' : 'ABC', 'emp_age' : 32},

{'emp_id' : '13', 'emp_name' : 'PQR', 'emp_age' : 36},

{'emp_id' : '15', 'emp_name' : 'XYZ', 'emp_age' : 41}]

pyspark = py.createDataFrame(emp)

lit_fun = pyspark.select(col("emp_id"),lit("21").alias("emp_code"),lit("Pune").alias("emp_addr"))

lit_fun.show()How to Use PySpark lit()?

Basically, we are using the lit function to add a new column with a constant value to the dataset. The below steps show how we can use the lit function as follows.

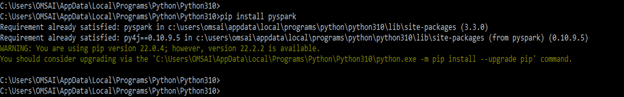

For using the PySpark lit function we need to install PySpark in our system.

- In the first step, we are installing the PySpark module in our system. We are installing this module by using the pip command as follows.

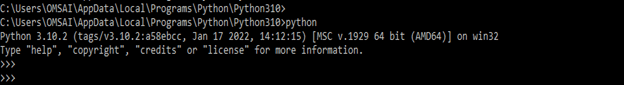

pip install pyspark- After installing the module now in this step we log in to python by using the python command as follows.

python- After login in python, in this step, we import the col, lit, PySpark, and SparkSession module. We are importing all modules by using the import keyword.

import pyspark

from pyspark.sql import SparkSession

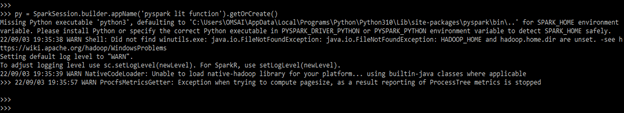

from pyspark.sql.functions import col, lit- After importing the module in this step we are creating the application name as pyspark lit function. We are defining the application variable name as py.

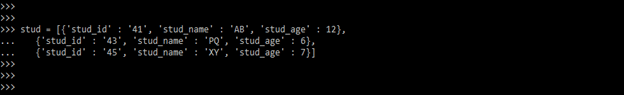

py = SparkSession.builder.appName('pyspark lit function').getOrCreate()- In this step, we are creating the stud data frame. We are creating the data with three rows as follows.

stud = [{'stud_id' : '41', 'stud_name' : 'AB', 'stud_age' : 12},

{'stud_id' : '43', 'stud_name' : 'PQ', 'stud_age' : 6},

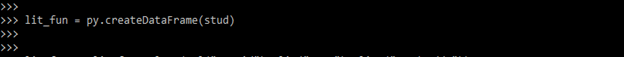

{'stud_id' : '45', 'stud_name' : 'XY', 'stud_age' : 7}]- In this step we are creating a data frame by using stud data, we are defining the variable of a data frame as lit_fun.

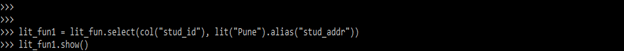

lit_fun = py.createDataFrame(stud)- In this step, we are adding the stud_addr column in the stud dataset by using the lit function. At the time of adding a new column, we are also giving a constant value to the column.

lit_fun1 = lit_fun.select(col("stud_id"), lit("Pune").alias("stud_addr"))

lit_fun1.show()Examples

Below are the different examples:

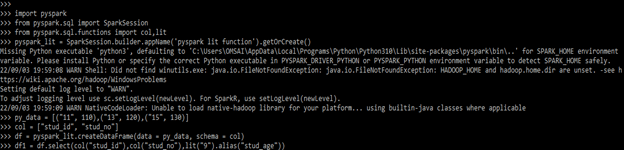

Example #1

In the below example, we are adding a new column name as stud_addr as follows.

Code:

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, lit

pyspark_lit = SparkSession.builder.appName (pyspark lit function').getOrCreate()

py_data = [("11", 110), ("13", 120), ("15", 130)]

col = ["stud_id", "stud_no"]

df = pyspark_lit.createDataFrame(data = py_data, schema = col)

df1 = df.select(col("stud_id"),col("stud_no"),lit("9").alias("stud_age"))

df1.show(truncate=False)Output:

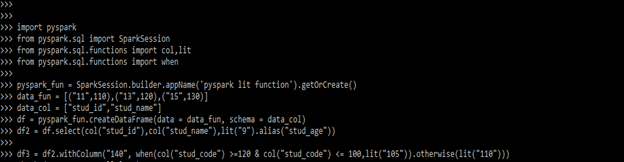

Example #2

Here we are adding a new column conditionally.

Code:

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, lit

from pyspark.sql.functions import when

pyspark_fun = SparkSession.builder.appName ('pyspark lit function').getOrCreate()

data_fun = [("11", 110), ("13", 120), ("15", 130)]

data_col = ["stud_id", "stud_code"]

df = pyspark_fun.createDataFrame (data = data_fun, schema = data_col)

df2 = df.select (col("stud_id"), col("stud_code"), lit("9").alias("stud_age"))

df3 = df2.withColumn ("140", when(col("stud_code") >= 120 & col ("stud_code") <= 100, lit("105")).otherwise (lit("110")))

df3.show(truncate=False)Output:

Key Takeaways

- The lit function is used to add the new column to the data set which was already created in pyspark.

- We can add a constant value at the time of adding a new column in the data frame. We can also add conditions while using the lit function.

FAQ

Given below is the FAQ mentioned:

Q1. What is the use of the lit function in python?

Answer: Basically, the lit function in python is used to add a new column in the data frame which was already created.

Q2. Which modules we are using in pyspark lit function?

Answer: We are using col, lit, when, spark session, and pyspark module at the time of using the lit function in python.

Q3. What is the difference between the lit and typedlit functions in pyspark?

Answer: The lit and typedlit function is used to add the column in the data frame which was already created. Both functions does the same work in pyspark.

Conclusion

Lit function is used to create the new column by adding constant values to the column in the data frame of pyspark. Pyspark lit function is used to add the new column to the data frame, which was already created, we are creating a new column by assigning a constant or literal value.

Recommended Articles

This is a guide to PySpark lit(). Here we discuss the introduction and how to use the PySpark lit() function along with different examples. You may also have a look at the following articles to learn more –