Updated April 7, 2023

Introduction to PySpark Logistic Regression

PySpark Logistic Regression is a type of supervised machine learning model which comes under the classification type . This algorithm defines the relation among the data and classify the data according the relation among them . The logistic regression is the fundamental technique in classification that is relatively faster and easier to compute.

It is based on the training and testing of data model in machine learning model of PySpark. It predicts the probability of the dependent variable . It is an predictive analysis that describes the data and explain the relationship among the variable of that. In this article we will try to analyze the various method used in Logistic Regression with the data in PySpark. Let us try to see about pyspark logistic regression in some more details

Syntax for PySpark Logistic Regression

The syntax for PYSPARK LOGISTIC REGRESION function is :

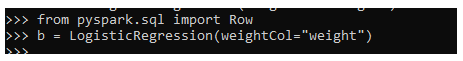

from pyspark.sql import Row

from pyspark.ml.classification import LogisticRegression

b = LogisticRegression(weightCol="weight").Import statement that is used.

LogisticRegression: The model used with the column name to be used on.

Output:

Working of Logistic Regression in Pyspark

Let us see some how logistic regression operation works in pyspark:-

- It is an classification algorithm that predicts the dependency of the data variable. The logistic regression predicts the binary outcome where only two scenarios happens up one that it is present either a yes or no it is not present.

- It works on some assumptions of the dependent and independent variable that is used . These assumptions are then used for training purpose. Like the independent variables must be independent of each other , the size of data must be powerful and strong . The independent variables comes under those variable which can influence the result further.

- There are several types of Logistic Regression that includes the Binary regression which predicts the relation ship between dependent and the independent variables .

- Then we can have the Multinomial regression which can have two or more discrete outcome.

- The PySpark data frame has the columns containing labels , features ,and the column name that needs to be used for the regression model technique calculation.

- Let’s check the creation and working of logistic regression function with some coding examples.

Example of PySpark Logistic Regression

Let us see some Example how PYSPARK logistic regression operation works :-

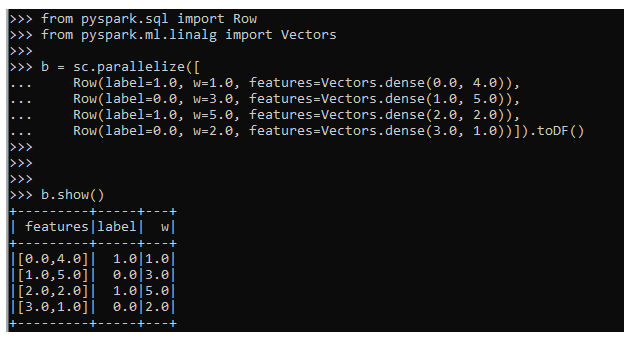

Let’s start by creating a simple data frame in PySpark on which we can use the model. We will be using the sc. Parallelize method to create the data frame followed by the toDF method in PySpark which contains the data needed for creating the Regression model.

The necessary imports are used to create the features and Row in PySpark.

from pyspark.sql import Row

from pyspark.ml.linalg import Vectors

b = sc.parallelize([

Row(label=1.0, w=1.0, features=Vectors.dense(0.0, 4.0)),

Row(label=0.0, w=3.0, features=Vectors.dense(1.0, 5.0)),

Row(label=1.0, w=5.0, features=Vectors.dense(2.0, 2.0)),

Row(label=0.0, w=2.0, features=Vectors.dense(3.0, 1.0))]).toDF()

b.show()Output:

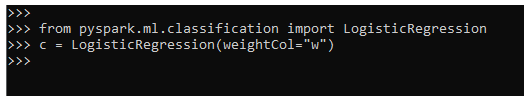

We can import the Logistic Regression model to classify and start the operation over the data frame

from pyspark.ml.classification import LogisticRegressionThis applies over the column of the PySpark dataframe.

c = LogisticRegression(weightCol="w")Output:

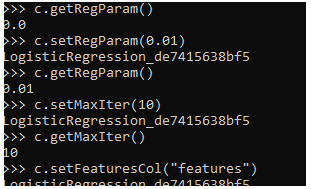

Now lets try to analyze some of the methods that are used in Logistic Regression model.

1:- To get the regParam or its default value.

c.getRegParam()2:- To set the regParam by the regression function.

c.setRegParam(0.01)3:- To get the updated value.

c.getRegParam()4:- To set the MaxIter by the regression function

c.setMaxIter(10)5:- To get the updated value of the iterator.

c.getMaxIter()

c.setFeaturesCol("features")These are the Logistics Regression models that are used with the PySpark data frame model and result can be evaluated back from the model . Note the model cannot be used for the continuous data and the accuracy can vary if the data size of the model is small. The model is comparatively faster and most trusted and renowned used model for prediction.

Output:

These are some of the Examples of PYSPARK logistic Regression in PySpark.

Note:

- PySpark logistic Regression is a Machine learning model used for data analysis.

- PySpark logistic Regression is an classification that predicts the dependency of data over each other in PySpark ML model.

- PySpark logistic Regression is faster way of classification of data and works fine with larger data set with accurate result.

- PySpark logistic Regression is well used with discrete data where data is uniformly separated.

- PySpark logistic Regression uses the dependent and independent variable model for analysis.

Conclusion

From the above article we saw the working of logistic Regression in PySpark. From various example and classification we tried to understand how this regression operation happens in PySpark data frame and what are is use in the programming level. The various methods used showed how it eases the pattern for data analysis and a cost efficient model for the same. We also saw the internal working and the advantages of logistic regression in PySpark Data Frame and its usage in various programming purpose. Also the syntax and examples helped us to understand much precisely over the function.

Recommended Articles

This is a guide to PySpark Logistic Regression. Here we also discuss the introduction and working of logistic regression in pyspark along with an example. You may also have a look at the following articles to learn more –