Updated April 4, 2023

Introduction to PySpark Persist

PySpark Persist is an optimization technique that is used in the PySpark data model for data modeling and optimizing the data frame model in PySpark. It helps in storing the partial results in memory that can be used further for transformation in the PySpark session. This takes up the data over storage location and can be used for further data processing and data modeling in PySpark.

The Storage level used for using the PySpark persist can be over various levels that are used to store the data based on it. This data can be further used for other data processing and optimization of data. It saves the result and these results are then used for analysis purposes.

In this article, we will try to analyze the various ways of using the PYSPARK Persist operation PySpark. Let us try to see about PYSPARK Persist operation in some more detail.

Syntax For PYSPARK Persist

The syntax for the PYSPARK Persist function is:-

b.persist()B: The data frame to be used in PySpark needs to persist.

Persisit(): The Persist statement to be used for optimizing the spark dataframe.

Path Folder: The Path that needs to be passed on for writing the file to the location.

Screenshot:-

Working of Persist in Pyspark

Let us see how PYSPARK Persist works in PySpark:-

PYSPARK persist is a data optimization model that is used to store the data in-memory model. It is a time and cost-efficient model that saves up a lot of execution time and cuts up the cost of the data processing.

It stores the data that is stored at a different storage level the levels being MEMORY and DISK. It can store both the serialized as DE serialize data based on the level provided. The data that is stored can be used in further data processing models in subsequent action. They are fault-tolerant in nature so in case of any breakage that data is automatically recomputed using the actual transformation used.

Let’s check the creation and working of Persist with some coding examples.

Example

Let us see some examples of how Persist operation works:

Let’s start by creating a sample data frame in PySpark.

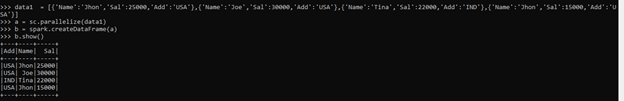

data1 = [{'Name':'Jhon','Sal':25000,'Add':'USA'},{'Name':'Joe','Sal':30000,'Add':'USA'},{'Name':'Tina','Sal':22000,'Add':'IND'},{'Name':'Jhon','Sal':15000,'Add':'USA'}]The data contains Name, Salary, and Address that will be used as sample data for Data frame creation.

a = sc.parallelize(data1)The sc.parallelize will be used for creation of RDD with the given Data.

b = spark.createDataFrame(a)Post creation we will use the createDataFrame method for creation of Data Frame.

This is how the Data Frame looks.

b.show()Screenshot:-

Now let us use the persist method to persist the data in memory only.

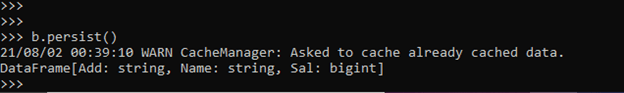

b.persist()This keeps up the data in memory and the data is kept in memory for further usage.

Screenshot:-

The Storage level in persisting can be divided into a different level that stores the data into different levels. Let’s try to see the different levels needed.

MEMORY ONLY

This storage level is used to store the RDD / Data frame into the JVM memory of the PySpark. If there is enough it will not save some partitions of the data and these will be recomputed when required for processing.

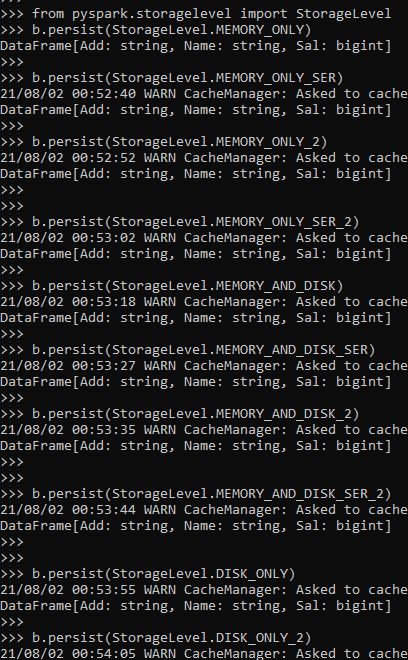

b.persist(StorageLevel.MEMORY_ONLY)MEMORY_ONLY_SER

This is the same as above but the data is stored as a serialized object into JVM Memory.

b.persist(StorageLevel.MEMORY_ONLY_SER)MEMORY_ONLY_2

This is the same as Memory only but the replica of each partition is replicated to two cluster nodes.

b.persist(StorageLevel.MEMORY_ONLY_2)MEMORY_ONLY_SER_2

This is the same as MEMORY_ONLY_SER but the only difference is the replica of each partition is replicated to two cluster nodes.

b.persist(StorageLevel.MEMORY_ONLY_SER_2)MEMORY_AND_DISK

It is the default statement of the Data frame / Data set the data is first stored in memory and in case of excess data the data is written back to disk that is read up when data is required. The data here is unsterilized in this case.

b.persist(StorageLevel.MEMORY_AND_DISK)MEMORY_AND_DISK_SER

This is the same as MEMORY_AND_DISK only the difference is the data is serialized in this case.

b.persist(StorageLevel.MEMORY_AND_DISK_SER)MEMORY_AND_DISK_SER_2

This is the same as MEMORY_AND_DISK_SER but the only difference is the replica of each partition is replicated to two cluster nodes.

b.persist(StorageLevel.MEMORY_AND_DISK_SER_2)DISK ONLY

This storage level stores the data over disk only.

b.persist(StorageLevel.DISK_ONLY)DISK_ONLY_2

This is the same as DISK_ONLY but the only difference is the replica of each partition is replicated to two cluster nodes.

b.persist(StorageLevel.DISK_ONLY_2)Screenshot:-

These are some of the Examples of Persist in PySpark.

Note:-

- Persist is an optimization technique that is used to catch the data in memory for data processing in PySpark.

- PySpark Persist has different STORAGE_LEVEL that can be used for storing the data over different levels.

- Persist the data that can be further reused for further actions.

- PySpark Persist stores the partitioned data in memory and the data is further used as other action on that dataset.

Conclusion

From the above article, we saw the working of Persist in PySpark. From various examples and classification, we tried to understand how this Persist function is used in PySpark and what are is used at the programming level. The various methods used showed how it eases the pattern for data analysis and a cost-efficient model for the same.

We also saw the internal working and the advantages of Persist in PySpark Data Frame and its usage in various programming purpose. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

This is a guide to PySpark persist. Here we discuss the internal working and the advantages of Persist in PySpark Data Frame and its usage in various programming purpose. You may also have a look at the following articles to learn more –