Updated April 11, 2023

Introduction to PySpark TimeStamp

PySpark TIMESTAMP is a python function that is used to convert string function to TimeStamp function. This time stamp function is a format function which is of the type MM – DD – YYYY HH :mm: ss. sss, this denotes the Month, Date, and Hour denoted by the hour, month, and seconds.

The columns are converted in Time Stamp, which can be further used for data analysis purposes. It is a conversion that can be used to obtain the accurate date with proper month followed by Hour, Month, and Second in PySpark. It is much used during data analysis because it records the exact time stamp the data was loaded and can be used for further analysis.

In this article, we will try to analyze the various ways of using the PYSPARK TIMESTAMP operation PySpark.

Let us try to see about PYSPARK TIMESTAMP in some more detail.

Syntax:

The syntax for the PySpark TimeStamp function is. The to_timestamp function is a function for the conversion of the column function into TimeStamp.

df1.withColumn("Converted_timestamp",to_timestamp("input_timestamp")).show(3,False)- df1: The dataframe used.

- withColumn: Function used to introduce new column value. It takes the new Column name as the parameter, and the to_timestamp function is passed that converts the string to a timestamp value.

It takes the input data frame as the input function, and the result is stored in a new column value.

Screenshot:

Working of Timestamp in PySpark

Let us see how PYSPARK TIMESTAMP works in PySpark:

The timestamp function is used for the conversion of string into a combination of Time and date. It is a precise function that is used for conversion, which can be helpful in analytical purposes.

The timestamp function has 19 fixed characters. This includes the format as:

YYYY-MM-DD HH:MM:SS

Whenever the input column is passed for conversion into a timestamp, it takes up the column value and returns a data time value based on a date. The conversion takes place within a given format, and then the converted time stamp is returned as the output column. We can also convert the time stamp function into Date Time by using a cast. It accepts a date expression, and the time value is added up, returning the time stamp data. The pattern can also be explicitly passed on as an argument defining the pattern over the column data.

Let’s check the creation and working of PySpark TIMESTAMP with some coding examples.

Examples

Let us see some examples of how the PySpark TIMESTAMP operation works. Let’s start by creating a simple data frame in PySpark.

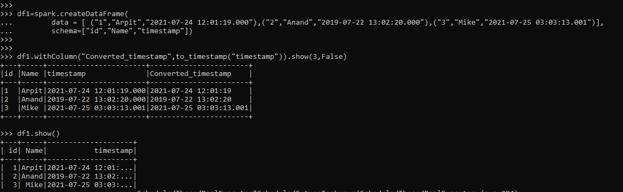

df1=spark.createDataFrame(

data = [ ("1","Arpit","2021-07-24 12:01:19.000"),("2","Anand","2019-07-22 13:02:20.000"),("3","Mike","2021-07-25 03:03:13.001")],

schema=["id","Name","timestamp"])

df1.printSchema()

df1.show()This data frame column timestamp will be used to convert the column in to timestamp function.

df1.withColumn("Converted_timestamp",to_timestamp("timestamp")).show(3,False)

df1.show()Here a new column is introduced with a new name Converted_timestamp. That converts the string to timestamp.

Screenshot:

We can also explicitly pass the format time stamp function that will be used for conversion.

df1.withColumn("Converted_timestamp",to_timestamp(lit(‘2021-07-24 12:01:19.000’),'MM-dd-yyyy HH:mm:ss.SSSS')).show(3,False)Lets us check one more example over the conversion to the Time stamp function:

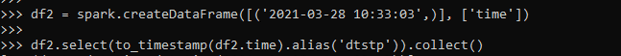

df2 = spark.createDataFrame([('2021-03-28 10:33:03',)], ['time'])

df2.select(to_timestamp(df2.time).alias('dtstp')).collect()This converts the date into a timestamp.

Screenshot:

The same to_timestamp function can also be used in the PySpark SQL function also that can be used for conversion. The spark.sql accepts the to_timestamp function inside the spark function and converts the given column in the timestamp.

These are some of the Examples of PySpark TIMESTAMP in PySpark.

Note:

1. It is used to convert the string function into a timestamp.

2. It takes the format as YYYY-MM-DD HH:MM: SS

3. PySpark TIMESTAMP accurately considers the time of data by which it changes up that is used precisely for data analysis.

4. It takes the data frame column as a parameter for conversion.

Conclusion

From the above article, we saw the working of TIMESTAMP in PySpark. From various example and classification, we tried to understand how this TIMESTAMP FUNCTION ARE USED in PySpark and what are is used in the programming level. The various methods used showed how it eases the pattern for data analysis and a cost-efficient model for the same.

We also saw the internal working and the advantages of TIMESTAMP in PySpark Data Frame and its usage for various programming purposes. Also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

We hope that this EDUCBA information on “PySpark TimeStamp” was beneficial to you. You can view EDUCBA’s recommended articles for more information.