Updated April 11, 2023

Introduction to PySpark to_Date

PySpark To_Date is a function in PySpark that is used to convert the String into Date Format in PySpark data model. This to_Date function is used to format a string type column in PySpark into the Date Type column. This is an important and most commonly used method in PySpark as the conversion of date makes the data model easy for data analysis that is based on date format. This to_Date method takes up the column value as the input function and the pattern of the date is then decided as the second argument which converts the date to the first argument. The converted column is of the type pyspark.sql.types.DateType .

In this article, we will try to analyze the various ways of using the PYSPARK To_Date operation PySpark.

Syntax:

The syntax for PySpark To_date function is:

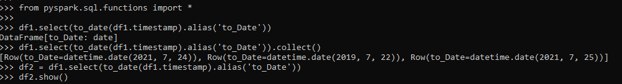

from pyspark.sql.functions import *

df2 = df1.select(to_date(df1.timestamp).alias('to_Date'))

df.show()The import function in PySpark is used to import the function needed for conversion.

- Df1:- The data frame to be used for conversion

- To_date:- The to date function taking the column value as the input parameter with alias value as the new column name.

- Df2:- The new data frame selected after conversion.

Screenshot:

Working of To_Date in PySpark

Let’s check the creation and working of PySpark To_Date with some coding examples.

Examples

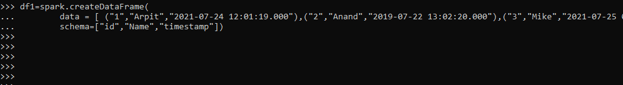

Let’s start by creating a simple data frame in PySpark.

df1=spark.createDataFrame(

data = [ ("1","Arpit","2021-07-24 12:01:19.000"),("2","Anand","2019-07-22 13:02:20.000"),("3","Mike","2021-07-25 03:03:13.001")],

schema=["id","Name","timestamp"])

df1.printSchema()Output:

df1.show()Screenshot:

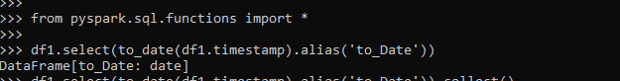

Now we will try to convert the timestamp column using the to_date function in the data frame.

We will start by importing the required functions from it.

from pyspark.sql.functions import *This will import the necessary function out of it that will be used for conversion.

df1.select(to_date(df1.timestamp).alias('to_Date'))We will start by selecting the column value that needs to be converted into date column value. Here the df1.timestamp function will be used for conversion. This will return a new data frame with the alias value used.

Screenshot:

df1.select(to_date(df1.timestamp).alias('to_Date')).collect()We will try to collect the data frame and check the converted date column.

[Row(to_Date=datetime.date(2021, 7, 24)), Row(to_Date=datetime.date(2019, 7, 22)), Row(to_Date=datetime.date(2021, 7, 25))]We will try to store the converted data frame into a new data frame and will analyze the result out of it.

df2 = df1.select(to_date(df1.timestamp).alias('to_Date'))This will convert the column value to date function and the result is stored in the new data frame. which can be further used for data analysis.

df2.show()Let us try to check this with one more example giving the format of the date before conversion.

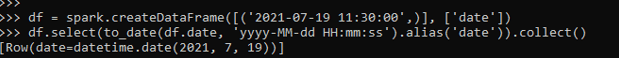

df = spark.createDataFrame([('2021-07-19 11:30:00',)], ['date'])This is used for creation of Date frame that has a column value as a date which we will use for conversion in which we can pass the format that can be used for conversion purposes.

df.select(to_date(df.date, 'yyyy-MM-dd HH:mm:ss').alias('date')).collect()This converts the given format into To_Date and collected as result.

Screenshot:

This to date function can also be used with PySpark SQL function using the to_Date function in the PySpark. We just need to pass this function and the conversion is done.

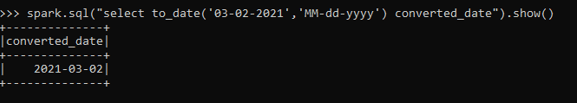

spark.sql("select to_date('03-02-2021','MM-dd-yyyy') converted_date").show()This is the converted date used that can be used and this gives up the idea of how this to_date function can be used using the Spark.sql function.

Screenshot:

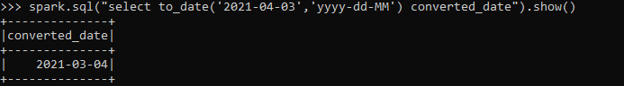

spark.sql("select to_date('2021-04-03','yyyy-dd-MM') converted_date").show()The format can be given the way we want and we can use it for further conversion purposes.

Screenshot:

These are some of the Examples of PySpark to_Date in PySpark.

Note:

1. It is used to convert the string function into Date.

2. It takes the format as an argument provided.

3. It accurately considers the date of data by which it changes up that is used precisely for data analysis.

4. It takes date frame column as a parameter for conversion.

Conclusion

From the above article, we saw the working of TO_DATE in PySpark. From various example and classification, we tried to understand how this TO_DATE FUNCTION ARE USED in PySpark and what are is used in the programming level. The various methods used showed how it eases the pattern for data analysis and a cost-efficient model for the same.

We also saw the internal working and the advantages of TO_DATE in PySpark Date Frame and its usage for various programming purposes. And also, the syntax and examples helped us to understand much precisely the function.

Recommended Articles

We hope that this EDUCBA information on “PySpark to_Date” was beneficial to you. You can view EDUCBA’s recommended articles for more information.