Updated February 22, 2023

Introduction to PySpark Write CSV

PySpark provides different features; the write CSV is one of the features that PySpark provides. In PySpark, we can write the CSV file into the Spark DataFrame and read the CSV file. In addition, the PySpark provides the option() function to customize the behavior of reading and writing operations such as character set, header, and delimiter of CSV file as per our requirement.

Key Takeaways

- PySpark CSV helps us to minimize the input and output operation.

- It supports reading and writing the CSV file with a different delimiter.

- It provides a different save option to the user.

- The CSV files are slow to import and phrase the data per our requirements.

Code:

df.write.CSV ("specified path ")Here we are trying to write the DataFrame to CSV with a header, so we need to use option () as follows.

Code:

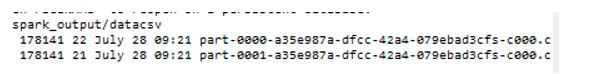

df.write.option("header", true).CSV ("C:/result/output/data")In this case, we have 2 partitions of DataFrame, so it created 3 parts of files, the end result of the above implementation is shown in the below screenshot.

Output:

In the above example, we can see the CSV file. Normally, Contingent upon the number of parts you have for DataFrame, it composes a similar number of part records in a catalog determined as a way. You can get the parcel size by utilizing the underneath bit.

Let’s see how we can use options for CSV files as follows:

We know that Spark DataFrameWriter provides the option() to save the DataFrame into the CSV file as well as we are also able to set the multiple options as per our requirement.

Code:

df.write.option("header",True)

.option("delimiter","||")

.CSV ("specified path of CSV ")

df.write.options(Map("header"->"true", "delimiter"->","))

.CSV ("specified path of CSV ")Explanation:

In the above code, we have different parameters as shown:

- Header: With the help of the header option, we can save the Spark DataFrame into the CSV with a column heading. By default, this option is false.

- Delimiter: Using a delimiter, we can differentiate the fields in the output file; the most used delimiter is the comma.

- Quote: If we want to separate the value, we can use a quote.

- Compression: PySpark provides the compression feature to the user; if we want to compress the CSV file, then we can easily compress the CSV file while writing CSV. We also have the other options we can use as per our requirements.

PySpark Write CSV – Export File

Let’s see how we can export the CSV file as follows:

We know that PySpark is an open-source tool used to handle data with the help of Python programming. So first, we need to create an object of Spark session as well as we need to provide the name of the application as below.

Code:

SparkSession.builder.appName(sampledemo).getOrCreate()Now in the next step, we need to create the DataFrame with the help of createDataFrame() method as below.

Code:

spark.createDataFrame(provide specified data)Now in the next, we need to display the data with the help of the below method as follows.

Code:

dataframe.show()Let’s see how we can create the dataset as follows:

Code:

import pyspark

from pyspark.sql import SparkSession

spark=SparkSession.builder.appName('sampledemo'). getOrCreate()

data=[{'name':'Ram', 'class':'first', 'city':'Mumbai'},

{'name':'Sham', 'class':'first', 'city':'Mumbai'}]

dataf = spark.createDataFrame(data)

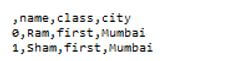

dataf.show()Output:

Let’s see how we can export data into the CSV file as follows:

Code:

import pyspark

from pyspark.sql import SparkSession

spark=SparkSession.builder.appName('sampledemo'). getOrCreate()

data=[{'name':'Ram', 'class':'first', 'city':'Mumbai'},

{'name':'Sham', 'class':'first', 'city':'Mumbai'}]

dataf = spark.createDataFrame(data)

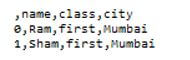

dataf.write.CSV ("sampledata")Explanation:

- As shown in the above example, we just added one more write method to add the data into the CSV file. The result of the above implementation is shown in the below screenshot.

Output:

PySpark Save CSV File Options

Let’s see what are the different options available in pyspark to save:

- Save using CSV file: Spark provides the single option and set the multiple options per the requirement as shown in the code below.

Code:

df.write.option("header",True)

.option("delimiter","||")

.CSV ("specified path of CSV ")

df.write.options(Map("header"->"true", "delimiter"->","))

.CSV ("specified path of CSV ")- Save dataframe as CSV: We can save the Dataframe to the Amazon S3, so we need an S3 bucket and AWS access with secret keys.

- Save CSV to HDFS: If we are running on YARN, we can write the CSV file to HDFS to a local disk.

- Modes of save: Spark also provides the mode () method, which uses the constant or string.

FAQ

Given below is the FAQ mentioned:

Q1. Does spark support the CSV file format or not?

Answer:

Yes, it supports the CSV file format as well as JSON, text, and many other formats.

Q2. What is the meaning of CSV in spark?

Answer:

CSV means we can read and write the data into the data frame from the CSV file.

Q3. Can we create a CSV file from the Pyspark dataframe?

Answer:

Yes, we can create with the help of dataframe.write.CSV (“specified path of file”).

Conclusion

In this article, we are trying to explore PySpark Write CSV. In this article, we saw the different types of Pyspark write CSV and the uses and features of these Pyspark write CSV. Another point from the article is how we can perform and set up the Pyspark write CSV.

Recommended Articles

This is a guide to PySpark Write CSV. Here we discuss the introduction and how to use dataframe PySpark write CSV file. export file and FAQ. You may also have a look at the following articles to learn more –