Updated April 6, 2023

Introduction to PyTorch adam

We know that PyTorch is an open-source deep learning framework and it provides a different kind of functionality to the user, in deep learning sometimes we need to perform the optimization of the different algorithms at that we can use the PyTorch adam() method to optimize the different types of algorithms as per our requirement. Basically, adam() included the AdaGrad and RMSProp algorithm and it is used to optimize the different algorithms that can handle some noisy problems such as sparse gradients, etc. The implementation of adam() is very simple and straightforward and it requires less memory.

Overview of PyTorch adam

The decision of advancement calculation for our profound learning model can mean the contrast between great outcomes in minutes, hours, and days.

The Adam advancement calculation is an expansion to stochastic angle drop that has as of late seen more extensive reception for profound learning applications in PC vision and normal language handling.

- Toward the finish of this article, we can comprehend the accompanying focuses about the adam as follows.

- What the Adam calculation is and a few advantages of utilizing the strategy to improve your models.

Adam is an advanced calculation that can be utilized rather than the traditional stochastic slope drop technique to refresh network loads iteratively situated in preparing information.

Adam was introduced by Diederik Kingma from OpenAI and Jimmy Ba from the University of Toronto in their 2015 ICLR paper (banner) named “Adam: A Method for Stochastic Optimization”. I will state generously from their paper in this post, except if expressed in any case.

The calculation is called Adam. It’s anything but an abbreviation and isn’t composed as “ADAM”.

How the Adam calculation functions and how it is not quite the same as the connected techniques for AdaGrad and RMSProp.

How the Adam calculation can be designed and usually utilized arrangement boundaries.

The adam provides the different types of benefits as follows.

1. The implementation of adam is very simple and straightforward.

2. It provides computational efficiency to the user.

3. As compared to the other algorithm it required less memory for implementation.

4. It is suitable for nonstationary objectives.

5. Mainly its focuses on the noisy problems

How to use PyTorch adam?

Now let’s see how we can use PyTorch Adam as follows.

Adam is diverse to old-style stochastic slope plunge.

Stochastic inclination plummet keeps a solitary learning rate (named alpha) for all weight refreshes and the learning rate doesn’t change during preparation.

A learning rate is kept up with for each organization weight (boundary) and independently adjusted as learning unfurls.

Basically, there are two ways to implement the PyTorch adam as follows.

- Adaptive Gradient Algorithm: That keeps a for each boundary learning rate that further develops execution on issues with scanty slopes.

- Root Mean Square Propagation: That likewise keeps up with per-boundary learning rates that are adjusted depending on the normal of late sizes of the inclinations for the weight

Instead of adjusting the boundary learning rates dependent on the normal first second (the mean) as in RMSProp, Adam likewise utilizes the normal of the second snapshots of the inclinations (the uncentered change).

In particular, the calculation ascertains an outstanding moving normal of the slope and the squared angle, and the boundaries beta1 and beta2 control the rot paces of these moving midpoints.

The underlying worth of the moving midpoints and beta1 and beta2 values near 1.0 (suggested) bring about an inclination of second gauges towards nothing. This inclination is overwhelmed by first computing the one-sided gauges before then ascertaining predisposition adjusted appraisals.

Adam Class

Now let’s see the different types of classes as follows.

- AdaDelta Class: In this class, there is no requirement of an initial learning rate constant that means we can use without any torch method that means by using function.

- AdaGrad Class: Adagrad (short for versatile inclination) punishes the learning rate for boundaries that are often refreshed, all things considered; it gives a serious learning rate to meager boundaries, boundaries that are not refreshed as much of the time.

- Adam Class: This is one of the most popular optimization classes and it combines all properties of Adadelts, an RMSprop optimizer, into a single class.

- AdamW Class: It provides some improvement into an Adam class that we called AdamW. The weight rot or regularization term doesn’t wind up in the moving midpoints and in yields, it is simply corresponding to the actual weight.

- SparseAdam Class: It is a lazy version of the Adam algorithm and is suitable for sparse tensors.

- Adamax Class: It is used to implement the Adamax algorithms that are supported by the infinity norms.

- LBFGS class: By using this function, we can implement the L-BFGS algorithm and it is taken from the minFunc.

- RMSprop class: By using this class, we can implement the RMSprop algorithm.

- Rprop class: By using this class we can implement the resilient backpropagation algorithm.

- SGD Class: SGD means stochastic gradient descent and it is a very basic algorithm used now.

- ASGD class: By using this class we can implement the Averaged Stochastic Gradient Descent algorithm as per our requirement.

PyTorch adam Examples

Now let’s see the example of Adam for better understanding as follows.

Code:

import torch

import torch.nn as nn

import torch.optim as optm

from torch.autograd import Variable

X = 3.25485

Y = 5.26526

er = 0.2

Num = 50 # number of data points

A = Variable(torch.randn(Num, 1))

t_value = X * A + Y + Variable(torch.randn(Num, 1) * er)

model_data = nn.Linear(1, 1)

optimi_data = optm.Adam(model_data.parameters(), lr=0.05)

loss_fn = nn.MSELoss()

num2 = 20

for _ in range(0, num2):

optimi_data.zero_grad()

predict = model_data(A)

loss_value = loss_fn(predict, t_value)

loss_value.backward()

optimi_data.step()

print("-" * 20)

print("Data of X = {}".format(list(model_data.parameters())[0].data[0, 0]))

print("Data of Y = {}".format(list(model_data.parameters())[1].data[0]))Explanation

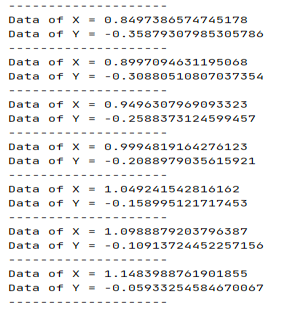

In the above example, we try to implement the Adam optimizer as shown, here we first import the packages. After that, we set the value of data points as well as we also set data with error value as shown. Finally, we run the training data set for assigned values. The final output of the above program we illustrated by using the following screenshot as follows.

So in this way, we can write the code for all classes as per requirement.

Conclusion

We hope from this article you learn more about the Pytorch Adam. From the above article, we have taken in the essential idea of the Pytorch Adam and we also see the representation of the Pytorch Adam. From this article, we learned how and when we use the Pytorch Adam.

Recommended Articles

We hope that this EDUCBA information on “PyTorch adam” was beneficial to you. You can view EDUCBA’s recommended articles for more information.