Updated April 5, 2023

Introduction to PyTorch BERT

Basically, Pytorch is used for deep learning, so in deep learning, sometimes we need to transform the data as per the requirement that is nothing but the BERT. Normally BERT is a library that provides state of art to train the model for implementation of Natural Language Processing. The full form of BERT is that of bidirectional Encoder representation with transformers. In other words, we can say that BERT is used to extract the required patterns from the raw data, or we can say that the data passes through the encoder. The transformation of data is an important concept to get the predicted outcome in deep learning.

What is PyTorch bert?

BERT means “Bidirectional Encoder Representation with Transformers.” BERT extricates examples or portrayals from the information or word embeddings by placing them in basic words through an encoder. The encoder itself is a transformer engineering that is stacked together. A bidirectional transformer implies that it thinks about the setting from both left and right of the jargon to separate examples or portrayals during preparation.

BERT utilizes two preparing ideal models: Pre-preparing and Fine-tuning.

During pre-preparing, the model is prepared on an enormous dataset to extricate designs. However, this is by and large a solo learning task where the model is prepared on an unlabelled dataset like the information from a major corpus like Wikipedia.

During tweaking, the model is prepared for downstream assignments like Classification, Text-Generation, Language Translation, Question-Answering, etc. So basically, you can download a pre-prepared model and afterward Transfer-become familiar with the model on your information.

How to use pytorch bert?

Now let’s see how we can use Pytorch BERT as follows.

Sometimes we need to use a pre-trained BERT model in deep learning to train unleveled layers and create a new model. For what reason does this as opposed to preparing to train particular profound learning models (a CNN, BiLSTM, and so on).

In the first place, the pre-prepared BERT model loads as of now encode a great deal of data about our language. Therefore, it sets aside substantially less effort to prepare our calibrated model – maybe we have effectively prepared the base layers of our organization broadly and just need to delicately tune them while utilizing their yield as highlights for our grouping task.

Moreover and maybe comparably significant, as a result of the pre-prepared loads, this strategy permits us to calibrate our errand on a much more modest dataset than would be needed in a model that is worked without any preparation. A significant downside of NLP models working without any preparation is that we frequently need a restrictively enormous dataset to prepare our organization for sensible exactness, which means a ton of time and energy must be placed into dataset creation. By calibrating BERT, we are currently ready to pull off preparing a model to great execution on a much more modest measure of preparing information.

At long last, this basic calibrating method (regularly adding one completely associated layer on top of BERT and preparing for a couple of ages) was displayed to accomplish cutting edge results with insignificant assignment explicit changes for a wide assortment of undertakings: grouping, language deduction, semantic compatibility, question responding to, and so forth Rather than executing custom and some of the time dark architectures displayed to function admirably on a particular undertaking, just calibrating BERT is demonstrated to be a superior (or possibly equivalent) elective.

PyTorch bert models

Now let’s see BERT models as follows.

- BERT Model: The BERT model is basically designed for the pre-train dataset, and it is a bidirectional representation. The pre-prepared BERT model can be adjusted with only one extra yield layer to make best-in-class models for a wide scope of assignments, for example, question addressing and language deduction, without significant undertaking explicit design alterations.

- ELMo: BERT gets one more thought from ELMo, which represents Embeddings from Language Model. ELMo was presented by Peters et. al. in 2017, which managed the possibility of context-oriented agreement. The manner in which ELMo works is that it utilizes bidirectional LSTM to sort out the unique situation. Since it thinks about words from the two bearings, it can assign diverse word installations to words that are spelled correspondingly; however, various implications have.

- ULM-FiT: Already, we were utilizing pre-prepared models for word-embeddings that just designated the main layer of the whole model, for example, the installing layers, and the entire model was prepared from scratch; this was tedious, and not a great deal of achievement was found around here.

- OpenAI GPT: Generative Pre-prepared Transformer or GPT was presented by OpenAI’s group: Radford, Narasimhan, Salimans, and Sutskever. They introduced a model that mainly uses decoders from the transformer rather than encoders in a unidirectional methodology.

PyTorch bert Examples

Now let’s see the different examples of BERT for better understanding as follows.

Code:

import torch

data = 2222

torch.manual_seed(data)

torch.backends.cudnn.deterministic = True

from transformers import BertTokenizer

token = BertTokenizer.from_pretrained('bert-base-uncased')

len(token)

result = token.tokenize('Hi!! Welcome in BERT Pytorch')

print(result)

index_value = token.convert_tokens_to_ids(result)

print(index_value)

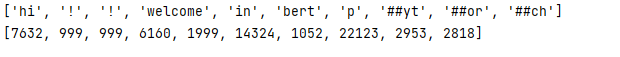

Explanation

In the above example, we try to implement the BERT model as shown. Here first, we import the torch and transformers as shown; after that, we declare the seed value with the already pre-trained BERT model that we use in this example. In the next line, we declared the vocabulary for index mapping. The final result of the above program we illustrated by using the following screenshot as follows.

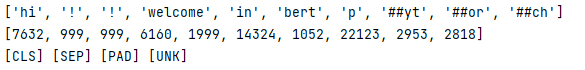

We likewise need to give a contribution to the BERT in a similar arrangement in which BERT has been pre-prepared. BERT utilizes two uncommon tokens signified as [CLS] and [SEP]. [CLS] token is utilized as the main token in the information grouping, and [SEP] signifies the finish of a sentence. So add the following code as follows.

cls_token = token.cls_token

sep_token = token.sep_token

pad_token = token.pad_token

unk_token = token.unk_token

print(cls_token, sep_token, pad_token, unk_token)

Explanation

The final result of the above program we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the Pytorch bert. From the above article, we have taken in the essential idea of the Pytorch bert, and we also see the representation and example of Pytorch bert. From this article, we learned how and when we use the Pytorch bert.

Recommended Articles

We hope that this EDUCBA information on “PyTorch BERT” was beneficial to you. You can view EDUCBA’s recommended articles for more information.