Updated April 5, 2023

Introduction to PyTorch bmm

PyTorch bmm is used for matrix multiplication in batches where the scenario involves that the matrices to be multiplied have the size of 3 dimensions that is x, y, and z and the dimension of the first dimension for matrices to be multiplied should be the same. Broadcasting is not supported by using this way of matrix multiplication. In this article, we will try to gain more knowledge about PyTorch bmm and will study what is PyTorch bmm, PyTorch bmm function, PyTorch bmm code example, the difference between PyTorch mm and bmm, and conclusion about the same.

What is PyTorch bmm?

PyTorch bmm is used for matrix multiplication in cases where the dimensions of both matrices are 3 dimensional and the value of dimension for the last dimension for both matrices is the same. The syntax of the bmm function that can be used in PyTorch is as shown below –

Torch.bmm(input tensor 1, input tensor 2, deterministic = false, out = None)The parameter named deterministic has a Boolean value specified in it which specified whether to go for non-deterministic that is a value set to false which usually is faster in calculations or go for the slow calculations where the value of the parameter is set to true and process of matrix multiplication is carried out in a deterministic way.

It is important the input tensor 1 and input tensor 2 parameters that are supplied should have a tensor of 3 dimensions so that both can have an equal number of matrices.

For example, when the input tensor 1 will be (b * n * m) and the input tensor 2 is (b * m * p) then the resultant matrix obtained by matrix multiplication of both the input tensors will be (b * n * p) tensor.

Outi = input tensor 1 i @input tensor 2 iThe bmm matrix multiplication does not support broadcast. When you want to have the matrix multiplication that support broadcasting you can go for torch.matmul() function.

PyTorch bmm function

The bmm function can be used by following the below syntax –

Torch.bmm(input tensor 1, input tensor 2, deterministic = false, out = None)The parameters used in the above syntax are described below in detail –

- Input tensor 1: This is tensor value that acts as the first batch that contains the matrices which are to be further multiplied with other ones.

- Input tensor 2: This is tensor value that acts as the second batch that contains the matrices which are to be multiplied with previous ones.

- Deterministic: It is an optional Boolean parameter which when not specified ahs its default value set to false which means that the default behavior is nondeterministic for faster calculations. When you set it to true the calculations of matrix multiplication are made at a deterministic and in slower pace. We can only make use of this argument when dealing with sparse dense CUDA bmm.

- Out: This is also an optional tensor parameter that helps us to specify the reference where the output tensor after matrix multiplication of tensor 1 and tensor 2 will be created.

PyTorch bmm Code Example

Example #1

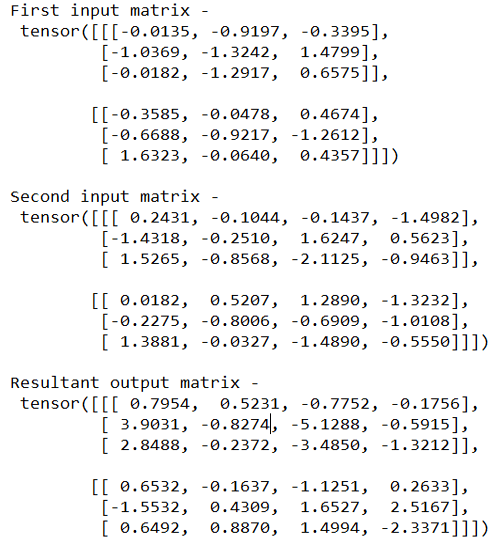

Let us try to understand the implementation of bmm matrix multiplication with the help of a simple example where we will create two random valued tensors of 3-dimensional size that are to be multiplied and will print the output tensor after bmm matrix multiplication –

Code:

import torch

#We will take two matrices of 3 dimensional size which are to be multiplied

sampleEducbaMatrix1 = torch.randn(2, 3, 3)

sampleEducbaMatrix2 = torch.randn(2, 3, 4)

print("First input matrix - \n",sampleEducbaMatrix1)

print("\nSecond input matrix - \n",sampleEducbaMatrix2)

print("\nResultant output matrix - \n",torch.bmm(sampleEducbaMatrix1,sampleEducbaMatrix2))The output of the code after executing is as shown below. Note that due to random generation of tensors the values may differ each time you run for input matrices as well as output matrix.

Example #2

Now, let us consider one example where we will observe the change in the size of the resulting tensor depending on the input tensor matrices –

Code:

sampleInputMatrix1 = torch.randn(10, 3, 4)

sampleInputMatrix2 = torch.randn(10, 4, 5)

sampleOutputtMatrix = torch.bmm(sampleInputMatrix1, sampleInputMatrix2)

sampleOutputtMatrix.size()After executing the above code snippet, we get the following output –

Example #3

Let’s take one more example to understand the changes in the size of the tensor batches when the dimensions are specified.

Code:

educbaBatchTensor1 = torch.randn(10, 3, 4)

educbaBatchTensor2 = torch.randn(10, 4, 5)

resultingTensor = torch.bmm(educbaBatchTensor1, educbaBatchTensor2)

resultingTensor.size()After the execution of the above program, we get the following output –

We can observe that in both examples 2 and 3 when the input first tensor will be (b * n * m) and the second input tensor is (b * m * p) then the resultant matrix obtained by matrix multiplication of both the input tensors will be (b * n * p) tensor

Difference between PyTorch mm and bmm

Let us try to understand the difference that lies between the two functions of PyTorch namely mm and bmm by using the comparison table –

| Torch.mm() | Torch.bmm() |

| Matrix multiplication is carried out between the tensor of m*n and n*p size. | Matrix multiplication is carried out between the matrices of size (b * n * m) and (b * m * p) where b is the size of the batch. |

| It is only used for matrix multiplication where both matrices are 2 dimensional. | It is only used for matrix multiplication where both matrices are 3 dimensional. |

| It is not necessary for the first dimension of both matrices to be of the same value. | The first dimension of both the input matrices should be of the same value. |

| Broadcasting where tensors of different shapes are allowed and smaller ones are broadcasted to the bigger ones for suiting the shape is not supported. | Broadcasting is not supported here as well. |

Conclusion

PyTorch bmm is used for the matrix multiplication of batches where the tenors or matrices are 3 dimensional in nature. Also, one more condition for matrix multiplication is that the first dimension of both the matrices being multiplied should be the same. The bmm matrix multiplication does not support broadcasting.

Recommended Articles

We hope that this EDUCBA information on “PyTorch bmm” was beneficial to you. You can view EDUCBA’s recommended articles for more information.