Updated April 6, 2023

Introduction to PyTorch CNN

Basically, PyTorch is a geometric library that is used to implement the deep learning concept, or we can say that irregular input data such as cloud, graph, etc. Pytorch CNN means Convolution Neural Networks, so with the help of PyTorch CNN, we can make an image classification model as per our requirement. Using CNN, we can make the possibilities that mean whether the image is simple classification or has more advanced such an object. Finally, we can say that it is an important thing to handle data for any scientific reason. By using CNN, we solve the image classification problem with deep learning, but it has some limitations, so that limitation improves in CNN.

PyTorch CNN Overviews

Basic neural networks are consistently a decent beginning stage when we’re taking care of an image arrangement issue utilizing deep learning. Yet, they do have constraints, and the model’s presentation neglects to work on after a specific point. This is where convolutional neural networks (CNNs) have changed the background and helped us make the right model. They are universal in PC vision applications. Furthermore, it’s really an idea I feel each PC vision fan should get rapidly. Deep learning is a division of AI and is considered as a significant advance taken by analysts in many years. The instances of profound learning execution incorporate applications like picture acknowledgment and discourse acknowledgment.

There are two main types of deep neural networks as follows:

- Convolutional Neural Networks

- Recurrent Neural Networks

Convolutional neural networks are intended to deal with information through different layers of clusters. This kind of neural network is utilized in applications like picture acknowledgment or face acknowledgment. The essential distinction between CNN and some other conventional neural networks is that CNN accepts input as a two-dimensional cluster and works straightforwardly on the pictures as opposed to zeroing in on highlight extraction, which other neural networks center around.

Each and every convolutional neural network has some basic idea that includes some important points as follows:

- Local Fields: Basically, CNN uses existing relationships within the input data; here, the neural network connects each concurrent layer with some input neurons, a reason we can call a local field reason.

- Convolution: In convolution, we have the weight of hidden neurons that are connected with another layer. The individual neurons shifted from time to time. That means the mapping of the input layer is hidden.

- Pooling: CNN uses a pooling layer, and it accepts input from the user as a feature map and prepares the condensed map.

Why Need PyTorch CNN?

Given below shows why we need PyTorch CNN as follows:

- PyTorch is an improved tensor library fundamentally utilized for Deep Learning applications utilizing GPUs and CPUs. It is an open-source AI library for Python, primarily created by the Facebook AI Research group. It is one of the broadly utilized Machine learning libraries, others being TensorFlow and Keras. Here is the Google Search Trends, which shows that the prevalence of the PyTorch library is moderately higher contrasted with TensorFlow and Keras. PyTorch is fabricated dependent on python and light library, which upholds calculations of tensors on Graphical Processing Units.

- CNNs are utilized for picture arrangement and acknowledgment due to their high exactness. It was proposed by PC researcher Yann LeCun in the last part of the 90s when he was roused from the human visual impression of perceiving things. Thus, we can think about Convolutional Neural Networks, or CNNs, as element extractors that assist to extricate highlights from pictures.

- In a straightforward neural network, we convert a 3-dimensional picture to a solitary measurement, correct? In 1-D and 2 –D, the image is the same, but it looks different, but the structure is the same.

PyTorch CNN Model

CNN is a profound learning model for handling information with a lattice design, like pictures, which is propelled by the association of creature visual cortex [11, 16] and intended to naturally and adaptively learn spatial orders of elements from low-to undeniable level examples.

First, we need to import the different libraries as follows.

Code:

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoaderNow we need to define the input data in 1 dimensional, 2 dimensional, and 3 dimensional, which is used to represent the image.

Code:

1d_input = torch.tensor([1, 2, 3, 4, 5, 6, 7, 8, 9, 10,11,12], dtype = torch.float)

2d_input = torch.tensor([[1, 2, 3, 4, 5,7],[ 6, 7, 8, 9, 10,11]], dtype = torch.float)

3d_input = torch.tensor([[[1, 2, 3, 4],[4,6,0 5,7],[ 6, 7, 8,],[ 9, 10,11,12]]], dtype = torch.float)Explanation:

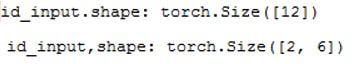

- In the above example, we specify the different input types with different dimensions, as shown.

- The final output of the above input data is shown in the following screenshot as follows.

Output:

So in this way, we can also see the 3-D output. So in this way, we can implement the CNN model. We also need to train the database.

Types of CNN

Given below are the types of CNN:

1. LeNet

LeNet is a convolutional neural network structure proposed by Yann LeCun et al. in 1989. As a general rule, LeNet alludes to LeNet-5 and is a straightforward convolutional neural network. Convolutional neural networks are a sort of feed-forward neural network whose counterfeit neurons can react to a piece of the encompassing cells in the inclusion go and perform well in huge scope picture handling.

2. AlexNet

AlexNet, the champ of the ImageNet ILSVRC-2012 contest, had the option to decrease the best 5 mistake rate to 15.3 %, contrasted with the blunder pace of the sprinters up of that opposition which accomplished a mistake pace of 26.2%. The network is like the LeNet Architecture; however, it has an enormous no. of channels contrasted with the first LeNet and consequently had the option to group among a huge class of items.

3. VGGNet 16

This specific network engineering was the sprinter up of the ILSVRC-2014 competition; it was able to accomplish a best 5 mistake pace of 5.1%. However, it may look confounded with an entire bundle of boundaries to be dealt with; it is, in reality, extremely basic.

4. GoogleNet

The GoogleNet or the Inception Network was the victor of the ILSVRC 2014 rivalry, accomplishing a main 5 mistake pace of 6.67%, which was almost equivalent to human-level execution, stunning right. The model was created by Google and incorporates a more intelligent execution of the first LeNet engineering. This depends on the possibility of beginning the module.

Two Additional Layers

Given below are the 2 additional layers in CNN as follows:

Basically, there are several layers in CNN that we already seen, but CNN also provides some additional layers as follows:

1. Fully Connected Layer

Neurons in this layer have a full network with all neurons in the previous and succeeding layers as found in normal CNN. This is the reason it tends to be figured as common by a network augmentation followed by an inclination impact. The FC layer assists with planning the portrayal between the information and the yield.

2. Non-Linearity Layers

Since convolution is a straight activity and pictures are a long way from direct, non-linearity layers are frequently positioned straightforwardly after the convolutional layer to acquaint non-linearity with the enactment map.

There are different types of nonlinearity layers as follows:

- Sigmoid

- Tanh

- ReLU

Conclusion

From the above article, we have taken in the essential idea of the PyTorch CNN, and we also saw the representation and example of PyTorch CNN. From this article, we saw how and when we use the PyTorch CNN.

Recommended Articles

We hope that this EDUCBA information on “PyTorch CNN” was beneficial to you. You can view EDUCBA’s recommended articles for more information.