Updated April 6, 2023

Introduction to PyTorch GAN

Pytorch gan means generative adversarial network; basically, it uses the two networks that are generator and discriminator. By using ptorch gan, we can produce synthetic information, or we can say that we can generate good structure data from the real data. For example, we can say that by using pytorch gan, we create fake images of different objects such as animals or people, etc. Basically, Pytorch does not have inbuilt packages even though it is open source to implement the GAN network. So by using different primitives of pytorch, we can create the GAN network as per our requirement.

What is PyTorch GAN?

A generative ill-disposed network (GAN) is a class of AI systems imagined in 2014 by Ian Good fellow and his partners. Two neural networks (Generator and Discriminator) rival each other like in a game. This procedure determines how to produce new information utilizing the same measurements as that of the preparation set, given a preparation set.

GANs are a system for showing a DL model to catch the preparation information’s dispersion so we can create new information from that equivalent appropriation. GANs were developed by Ian Good fellow in 2014 and first depicted in the paper Generative Adversarial Nets. They are made of two particular models, a generator and a discriminator. The work of the generator is to bring forth ‘counterfeit’ pictures that appear as though the preparation pictures. The work of the discriminator is to check out a picture and yield whether it is a genuine preparing picture or a phony picture from the generator.

During preparation, the generator is continually attempting to outmaneuver the discriminator by creating better and better fakes, while the discriminator is attempting to improve as an analyst and accurately arrange the genuine and phony pictures. The harmony of this game is the point at which the generator is creating amazing fakes that look as though they came straightforwardly from the preparation information, and the discriminator is left to consistently speculate half certainty that the generator yield is genuine or counterfeit.

Two neural networks

Now let’s have two neural network types of GAN as follows.

1. Discriminator

This is a classifier that examines information given by the generator and attempts to recognize if it is either phony created or genuine information. Preparing is performed utilizing genuine information occurrences, sure models, and phony information cases from the generator, which are used as bad models.

The utilizes a misfortune work that punishes a misclassification of genuine information case as phony or a phony occurrence as a genuine one. With each preparation cycle, the discriminator refreshes its neural network loads utilizing backpropagation, in view of the discriminator’s misfortune work, and improves and is better at recognizing the phony information cases.

2. Generator

The generator figures out how to make counterfeit information with input from the discriminator. It will likely cause the discriminator to arrange its yield as genuine.

To prepare the generator, you’ll need to firmly incorporate it with the discriminator. Preparing includes taking arbitrary information, changing it into an information occurrence, taking care of it to the discriminator and getting an arrangement, and figuring generator misfortune, which punishes for a right judgment by the discriminator.

Now let’s see how we can train the model by using a discriminator as follows.

The cycle used to prepare a standard neural network is to change loads in the backpropagation interaction, trying to limit the misfortune work. Be that as it may, the generator takes care of the discriminator in a GAN, and the generator’s misfortune estimates its inability to trick the discriminator.

This should be remembered for backpropagation: it needs to begin at the yield and stream back from the discriminator to the generator. Therefore, keep the discriminator static during generator preparation.

- To prepare the generator, utilize the accompanying general method:

- Get an underlying irregular commotion test and use it to create generator yield

- Get discriminator arrangement of the irregular commotion yield

- Compute discriminator misfortune

- Back propagate utilizing both the discriminator and the generator to get slopes

- Utilize these slopes to refresh just the generator’s loads

This will guarantee that with each preparation cycle, the generator will improve at making yields that will trick the current age of the discriminator.

PyTorch GAN code example

Now let’s see the example of GAN for better understanding as follows.

First, we need to import the different packages that we require as follows.

import torch

import argparse

import numpy as nup

import torch.nn as tnn

import torch.optim as optm

from torchvision import datasets, transforms

from torch.autograd import Variable

from torchvision.utils import save_image

from torchvision.utils import make_grid

from torch.utils.tensorboard import SummaryWriter

After that, we need a dataset, so by using the following, we can load and process the dataset as follows.

t_data = transforms.Compose([transforms.Resize((64, 64)),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

train_dataset = datasets.ImageFolder(root='anime', transform= t_data)

train_loader = torch.utils.data.DataLoader(set=train_dataset,

batch_size=batch_size, shuffle=True)

In the next step, we need to assign the weight as follows.

def weights_init(m):

cname = m.__class__.__name__

if cname.find('Conv') != -1:

torch.nn.init.normal_(m.weight, 0.0, 0.02)

elif cname.find('BatchNorm') != -1:

torch.nn.init.normal_(m.weight, 1.0, 0.02)

torch.nn.init.zeros_(m.bias)

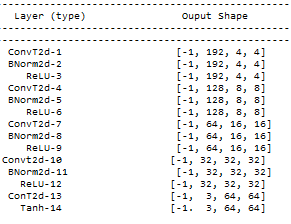

Now we need to create the generator network by using the following code as follows.

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.main = tnn.Sequential(

tnn.ConvT2d(l_dim, 32* 6, 4, 1, 0, bias=False),

tnn.BNorm2d(32 * 6),

tnn.ReLU(True),

tnn.ConvT2d(32 * 6, 32 * 4, 4, 2, 1, bias=False),

tnn.BNorm2d(32 * 4),

tnn.ReLU(True),

tnn.ConvT2d(32 * 4, 32 * 2, 4, 2, 1, bias=False),

tnn.BNorm2d(32 * 2),

tnn.ReLU(True),

tnn.ConvT2d(32 * 2, 64, 4, 2, 1, bias=False),

tnn.BNorm2d(32),

tnn.ReLU(True),

tnn.ConvT2d(32, 3, 4, 2, 1, bias=False),

tnn.Tanh()

)

def forward(self, input):

output = self.main(input)

return output

Explanation

The final output of the above program we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the Pytorch gan. From the above article, we have taken in the essential idea of the Pytorch gan, and we also see the representation of the Pytorch gan. From this article, we learned how and when we use the Pytorch gan.

Recommended Articles

We hope that this EDUCBA information on “pytorch gan” was beneficial to you. You can view EDUCBA’s recommended articles for more information.