Updated April 5, 2023

Introduction to PyTorch GRU

The following article provides an outline for PyTorch GRU. Gated Recurrent Unit or GRU helps solve the vanishing problem, which is normally present in all the recurrent networks. There are reset and update keys to manage the memory easily in GRU for small networks. Complete memory and hidden layers are shown easily in GRU, and the work is easy to understand for all. Information is transferred using the hidden state in GRU and hence less exposure.

What is PyTorch GRU?

We have Long Short Term Memory in PyTorch, and GRU is related to LSTM and Recurrent Neural Network. So it is possible to keep long-term memories of any kind of data with the help of GRU. GRU is a gating mechanism that works like LSTM but contains fewer parameters. It was developed by Kyunghyun Cho in 2014 and acts with forget gate in the network. This helps to manage the memory process.

PyTorch GRU Model

torch.nn.GRUA multi-layer GRU is applied to an input sequence of RNN using the above code. There are different layers in the input function, and it is important to use only needed layers for our required output. We have the following parameters in the GRU function.

- Input_size – gives details of input features for our solution

- Hidden_size – we have hidden layers in GRU, and the size of the same is mentioned in this parameter.

- Num_layers – there are different recurring layers in GRU, and those layers are represented in this parameter. If the layers are 4, we have the first GRU output being taken in the 2nd GRU, and it follows the same pattern till 4. All the GRUs are stacked together to form one.

- Bias – the default function is true. This tells the GRU whether to use biased weights b_ih and b_hh.

- Bidirectional – the default value is false. This tells the GRU whether to go bidirectional or not.

- Batch_first – The value true represents whether GRU is given in the form of batch, sequence, feature. If it is false, it follows the order sequence, batch, feature. This parameter is not applied to hidden states. The default value for batch_first is false.

- Dropout – a dropout layer is placed on the output of each GRU layer except maybe for the last layer. The probability of this layer is mostly dropout, and the default value is zero.

GRU has only one hidden step in the model, which holds both long-term and short-term memory in the same state. There are different gating mechanisms and computations involved in GRU that help it to hold the memory for any model. The gates in GRU are trained to filter out any unnecessary memory storage and hold only useful activities. We have vectors with values from 0 to 1, and these values are multiplied with the input data or hidden data to get the required output.

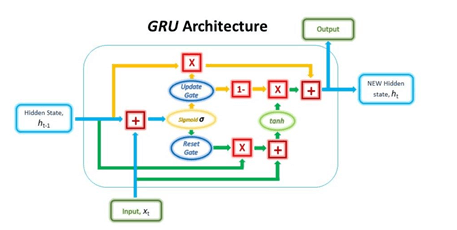

Structure of GRU unit ( Ref: www.blog.floydhub.com)

There are three main steps here: Reset gate, update gate, and combination of output. First, the reset gate is calculated from both the input state and the hidden state of GRU. Then, the input and hidden state sum is passed through the sigmoid function, where the values are transformed between 0 and 1. This helps the gate to filter between important information in the state.

Backpropagation is done in the entire network so that the vector will have only useful features in the network. Now, as the final step, the tanh function is applied to the result so that the required result is obtained in the system.

Update gate is also created using input and hidden state of GRU. But here, the difference is that the weights getting multiplied with an update and reset gate are different, so the results are different. This is because element-wiseuse element-wise multiplication happens in the update gate, and this value is used to obtain the final result. The motive of the update gate is to determine how much data should be preserved for future reference and to use in the system.

This update gate is used in the final step to manage the hidden state, and hence the output is obtained. The inverse of the update vector is taken here, and element-wise multiplication is done on the vector. This result will be added to update the gate result, and we will obtain the new result in the system.

PyTorch GRU DataLoader

Let us import all the necessary libraries for data analytics.

import os

import time

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

from torch.utils.data import TensorDataset, DataLoader

from tqdm import tqdm_notebook

from sklearn.preprocessing import MinMaxScaler

data_directory = "./data_load/"

pd.read_csv(data_directory+ 'hourly.csv').head()

label_scale = {}

training_a = []

testing_a = {}

testing_b = {}

for file in tqdm_notebook(os.listdir(data_directory)):

if file[-5:] != ".csv" or file == "hourly_est.csv":

continue

dataframe = pd.read_csv('{}/{}'.format(data_directory, file), parse_dates=[0])

dataframe['hour'] = dataframe.apply(lambda a: a['Datetime'].hour,axis=1)

dataframe['dayoftheweek'] = dataframe.apply(lambda a: a['Datetime'].dayoftheweek,axis=1)

dataframe['month'] = dataframe.apply(lambda a: a['Datetime'].month,axis=1)

dataframe['dayoftheyear'] = dataframe.apply(lambda a: a['Datetime'].dayoftheyear,axis=1)

dataframe = dataframe.sort_values("Datetime").drop("Datetime",axis=1)

scale = MinMaxScaler()

label_scale = MinMaxScaler()

data_load = sc.fit_transform(dataframe.values)

label_scale.fit(df.iloc[:,0].values.reshape(-1,1))

label_scalers[file] = label_scale

lookbck= 80

ins = np.zeros((len(data)-lookbck,lookbck,dataframe.shape[1]))

labels = np.zeros(len(data)-lookbck)

for k in range(lookbck, len(data)):

ins[k-lookbck] = data[i-lookbck:k]

labels[k-lookbck] = data[k,0]

ins = ins.reshape(-1,lookbck,dataframe.shape[1])

labels = labels.reshape(-1,1)

testing_part = int(0.1*len(ins))

if len(training_a) == 0:

training_a = ins[:-testing_part]

training_b = labels[:-testing_part]

else:

training_a = np.concatenate((training_a,inputs[:-testing_part]))

training_b = np.concatenate((training_b,labels[:-testing_part]))

testing_a[file] = (ins[-testing_part:])

testing_b[file] = (labels[-testing_part:])

batch_lot = 1024

training_data = TensorDataset(torch.from_numpy(training_a), torch.from_numpy(training_b))

training_loader = DataLoader(training_data, shuffle=True, batch_lot=batch_lot, dropped_last=True)

if is_cuda:

device = torch.device("cuda")

else:

device = torch.device("cpu")

class GRUmodelNet(nn.Module):

def __init__(self, input_dimen, hidden_dimen, output_dimen, num_layers, drop_probabl=0.2):

super(GRUmodelNet, self).__init__()

self.hidden_dimen = hidden_dimen

self.num_layers = num_layers

self.gru = nn.GRU(input_dimen, hidden_ dimen, numa_layers, batch_first=True, dropout=drop_probabl)

self.fc = nn.Linear(hidden_dimen, output_dimen)

self.relu = nn.ReLU()

def forward(self, a, h):

output, h = self.gru(a, h)

output = self.fc(self.relu(output[:,-1]))

return output, h

Conclusion

Since there are fewer parameters and hence less memory is used when compared to LSTM, GRU works pretty faster than LSTM. Hence, if the information is not complex and if the output requirement is based on time, it is better to go with GRU. On the other hand, LSTM is good for long sequence data.

Recommended Articles

We hope that this EDUCBA information on “PyTorch GRU” was beneficial to you. You can view EDUCBA’s recommended articles for more information.