Updated April 5, 2023

Definition of PyTorch Interpolate

In deep learning, we have different types of functionality to the user, in which that interpolate is one the functionality that the PyTorch provides. By using interpolate, we can set the input according to our requirement, and the different used interpolate algorithm depends on the setting of different parameter modes. Interpolate supports the 1D, 2D, and 3D cloud data such as vector data, different types of images JPG, PNG, etc. In other words, we can say that interpolation applies to temporal or volumetric dimensions. So as per our requirement, we can create the output by using a scale factor.

What is PyTorch Interpolate?

As per the given size or scale_factor boundary to down/up example the information,

It upholds the current testing information of impermanent (1D, like vector information), spatial (2D, for example, jpg, png, and other picture information), and volumetric (3D, for example, point cloud information) types as information. The info information design is minibatch x channels x [optional depth] x [optional height] x width. For a piece of impermanent information, anticipate the contribution of the 3D tensor, in particular, minibatch x channels x width. For spatial information, we anticipate the contribution of the 4D tensor, in particular, minibatch x channels x stature x width. For the volumetric information, we anticipate the contribution of the 5D tensor, for example, minibatch x channels x profundity x stature x width. The calculation can be utilized for the three-dimensional (3D) and cubic just (3D) calculations.

How to use PyTorch interpolate?

Now let’s see how we can use the interpolate function in PyTorch as follows.

Syntax:

torch.nn.functional.interpolate(specified input, o_size = None, scale_factor =None, mode_function ='nearest', align = None)Explanation

In the above syntax, we use an interpolate function with different parameters as follows.

- specified input: Specified input means input tensor.

- o_size: o_size means the output size of the tensor that depends on the user requirement.

- scale_factor: It is utilized to indicate how often the result is input. If you enter TUPLE, it is also important to set the TUPLE type.

- mode_function: It is used to specify the samples, and it depends on the requirement.

- align: In math, we trust that the pixels of information and result are square, not a few focuses. Therefore, whenever set to True, the info and result tubes are adjusted by the middle place of their rakish pixels, in this way holding the qualities at the precise pixels. Assuming set to bogus, the info and result tubes are adjusted by the rakish pixels of their precise pixels, and the interjection utilizes the limit worth of the boundary. When scale_factor stays unaltered, make this activity freely of the information size. Only utilize the calculation for use linear, ‘bilinear,’ ‘bilinear’ or You can utilize when ‘Trilinear.’ The default setting is false. With align = True, the directly introducing modes (straight, bilinear, and trilinear) don’t adjust the result and information pixels; consequently, the result esteems can rely upon the info size. This was the default conduct for these modes up to adaptation 0.3.1. From that point forward, the default conduct is align = False. See Up sample for substantial models on how this influences the results.

PyTorch Interpolate Function

For introduction in PyTorch, this open issue calls for more insertion highlights. There is currently an nn. functional.grid_sample() highlight; however, basically, at first, this didn’t appear as though what I wanted (yet we’ll return to this later).

Specifically, I needed to take a picture, W x H x C, and test it commonly at various arbitrary areas. Note likewise that this is not quite the same as upsampling, which thoroughly tests and furthermore doesn’t give us adaptability with the accuracy of inspecting.

When scale_factor is indicated, in the event that recompute_scale_factor=True, scale_factor is utilized to register the output_size, which will be utilized to induce new scales for the introduction. The default scale_factor is changed in 1.6.0.

The interpolate 1.1k capacity requires the contribution to be in genuine BCHW design and not CHW as the past would be. Hence, supplant the past by x = torch. rand(1, 2, 10, 10).cuda(), or call the unsqueeze(0) work on x to add a cluster aspect (since it’s just 1 picture, it will be a group size of 1; however, the capacity as yet needs it)

PyTorch Interpolate Linear

Now let’s see what is interpolated linearly as follows.

A direct interjection is a strategy for working out middle-of-the-road information between known qualities by adroitly defining a straight boundary between two nearby known qualities. An inserted esteem is any point along that line. You utilize direct addition to, for instance, draw diagrams or vivify between keyframes.

PyTorch interpolate Examples

Now let’s see the different examples of interpolation for better understanding as follows.

Example #1

Code:

import torch

import torch.nn.functional as Fun

X = 2

Y = 4

Z = A = 8

B = torch.randn((X, Y, Z, A))

b_usample = Fun.interpolate(B, [Z*3, A*3], mode='bilinear', align_corners=True)

b_mod = B.clone()

b_mod[:, 0] *= 2000

b_mod_usample = Fun.interpolate(b_mod, [Z*3, A*3], mode='bilinear', align_corners=True)

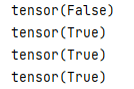

print(torch.isclose(b_usample[:,0], b_mod_usample[:,0]).all())

print(torch.isclose(b_usample[:,1], b_mod_usample[:,1]).all())

print(torch.isclose(b_usample[:,2], b_mod_usample[:,2]).all())

print(torch.isclose(b_usample[:,3], b_mod_usample[:,3]).all())Output:

Explanation: In the above example, we try to implement the interpolate function in PyTorch. Here first, we created a random tensor with different parameters, as shown in the above code. After that, we use the interpolate function.

Now let’s see another example of interpolate function as follows.

Example #2

Code:

import torch

import torch.nn.functional as Fun

A = torch.randn(6, 26, 151)

output = Fun.interpolate(A, size=150)

print(output.shape)Output:

Explanation: In the above example, we first import the required packages; after that, we create a tensor using the randn () function as shown. After that, we use interpolate () function.

Conclusion

We hope from this article you learn more about the PyTorch interpolate. From the above article, we have taken in the essential idea of the PyTorch interpolate, and we also see the representation and example of the PyTorch interpolate. Furthermore, we learned how and when we use the PyTorch interpolate from this article.

Recommended Articles

We hope that this EDUCBA information on “PyTorch interpolate” was beneficial to you. You can view EDUCBA’s recommended articles for more information.