Updated April 5, 2023

Introduction to PyTorch JIT

PyTorch JIT is used for compiling the programs written in PyTorch. It is a completely optimized compiler solution for PyTorch. PyTorch JIT comes with several features and characteristics that make it perfect. Some of them include having the additional feature of interpreting in a lightweight and safe environment, custom transformations can be written easily and supportive, and it comes along with automated diff support along with the inferencing.

In this article, we will learn about what PyTorch JIT is, using the PyTorch JIT, JIT Trace, and JIT Script, PyTorch JIT Modules, some of the examples associated with it, and finally, conclude our statement.

What is PyTorch JIT?

JIT compiler makes use of runtime information for the module optimization of torchscript. Using JIT that is Just In Time compiler automation in optimizing sparsification, quantization, and layer fusion are possible.

Script mode makes the use of eager module and torch.JIT.script and torch.JIT.trace modules for creating an IR that is an Intermediate Representation of PyTorch.

Using the PyTorch JIT

We need to create models, transfer them to the production environment, and then compile and optimize it using the PyTorch JIT compiler, making inferencing faster without much effort.

PyTorch handles production and research using two modes: eager and script mode. Eager is used for training, prototyping, and experimentation, while script mode is for production. Script mode contains two separate components, namely TorchScript and PyTorch JIT. Script mode proves to be of great help to avoid dependence on python and python’s GIL. Moreover, it brings along features like portability and performance due to the use of PyTorch JIT, which is an optimized compiler.

JIT Trace and JIT Script

The invocation of script mode is carried out either by using a torch.JIT.script or by using a torch.JIT.trace. JIT trace that is a torch.JIT.trace accepts the input containing our trained, eager model and the instance of data. The JIT tracer is responsible for keeping the tensor’s operations records and helps run the supplied module. Further, we convert the recordings made by the JIT tracer to the module of the torch script.

The JIT script is also known as a torch.JIT.script makes us write the code straight inside the torch script. It is versatile and, at the same time, a little verbose. After certain adjustments, it helps us support most of the PyTorch models. We just need to provide the model instance to the JIT script, which is completely different than that of the JIT trace mode. It is not necessary to specify the sample of data.

PyTorch JIT Modules

Script mode makes the use of eager module and torch.JIT.script and torch.JIT.trace modules for creating an IR that is an Intermediate Representation of PyTorch. PyTorch JIT modules are the JIT trace module and JIT script module.

JIT Trace Module

Tracer can reuse the coder if the eager model exists and handle all the programs using exclusive torch operations and tensors. The disadvantages of tracer include omitting the python constructs, data structures, and flow of control. Even without specification of any warnings, it creates representations they are not faithful. Just to verify that the structures of the model of PyTorch are being parsed correctly, it always checks the IR.

JIT Script Module

It looks similar to python and supports the python constructs, and the flow of control is preserved. In addition, it provides complete support for dictionaries and lists. However, it does not support the constant values, and typecasting is necessary. The default type, when not specified anything, is tensor.

Example of PyTorch JIT

Let us consider one example that was developed by google artificial intelligence named BERT that stands for Bidirectional Encoder Representations from Transformers. The library that we will use is provided by hugging face.

We will follow the steps given below to implement the example –

- Tokenizers and the BERT model will be initialized, and sample data will be created for inference.

- PyTorch models will be prepared for inferencing on both GPU and CPU.

- Torchscript models should be prepared for inferencing on both GPU and CPU.

from transformers

import numpy as numpyObj

import torch

import BertTokenizer, BertModel

from time import perf_counter

def sampleTimerFun(f,*args):

initiate = perf_counter()

f(*args)

return (1000 * (perf_counter() - initiate))

sampleTokenizerForScript = BertTokenizer.from_pretrained('bert-base-uncased', torchscript=True)

modelOfScript = BertModel.from_pretrained("bert-base-uncased", torchscript=True)

# Text in input is being tokenized

inputString = "[CLS] Who was Jim Henson ? [SEP] Jim Henson was a puppeteer [SEP]"

tokenized_inputString = sampleTokenizerForScript.tokenize(inputString)

# One of the input token is being masked

indexOfMaskedToken = 8

tokenized_inputString[indexOfMaskedToken] = '[MASK]'

ListOfIndexedTokens = sampleTokenizerForScript.convert_tokens_to_ids(tokenized_inputString)

IdOfSegments = [0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1]

# dummy object creation for input value

tensorOfTokens = torch.tensor([ListOfIndexedTokens])

tensorOfSegments = torch.tensor([IdOfSegments])# Example 1.1 – Sample Example demonstrating BERT model on CPU

sampleNativeModel = BertModel.from_pretrained("bert-base-uncased")

numpyObj.mean([sampleTimerFun(sampleNativeModel,tensorOfTokens,tensorOfSegments) for _ in range(100)])# Example 1.2 – Sample Example demonstrating BERT model on GPU

# In order for inference to be applied, both of the models used for sampling should be present on the sample GPU device.

sampleGPUOfNative = sampleNativeModel.cuda()

tensorOfTokens_gpu = tensorOfTokens.cuda()

tensorOfSegments_gpu = tensorOfSegments.cuda()

numpyObj.mean([sampleTimerFun(sampleGPUOfNative,tensorOfTokens_gpu,tensorOfSegments_gpu) for _ in range(100)])# Example 1.3 – CPU implementation of the torch.jit.trace

JITTraceModel = torch.jit.trace(modelOfScript, [tensorOfTokens, tensorOfSegments])

numpyObj.mean([sampleTimerFun(JITTraceModel,tensorOfTokens,tensorOfSegments) for _ in range(100)])# Example 1.4 – GPU implementation of the torch.jit.trace

JITTraceModel_gpu = torch.jit.trace(modelOfScript.cuda(), [tensorOfTokens.cuda(), tensorOfSegments.cuda()])

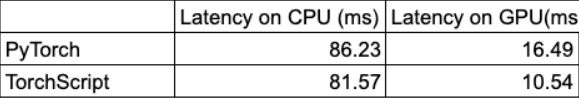

numpyObj.mean([sampleTimerFun(JITTraceModel_gpu,tensorOfTokens.cuda(),tensorOfSegments.cuda()) for _ in range(100)])The execution of various examples above on CPU, GPU for trace mode, and BERT mode in PyTorch and torchscript models give the following inference output –

We can observe that runtimes are almost the same on CPU, but on GPU, the runtime of torchscript proves to be better than PyTorch.

Internally, torchcript does the creation of an Intermediate representation of the PyTorch models that can be optimistically compiled at runtime by using the Just In Time (JIT) compilers. We can have a look at the IR that is an intermediate representation by using the traced_model.code.

Conclusion

We learned about the script mode and PyTorch JIT. Order to create the models for research in a production environment can be created by using script mode, and at the same time, it remains in the ecosystem of PyTorch.

Recommended Articles

We hope that this EDUCBA information on “PyTorch JIT” was beneficial to you. You can view EDUCBA’s recommended articles for more information.