Updated April 5, 2023

Introduction to PyTorch MSELoss()

PyTorch MSELoss() is used for creation of criteria that help to measure the error of mean squared format that is squared L2 normalization. The default consideration is that the target is y and the provided input is x between each and individual element. In this article, we will have a detailed study about what is PyTorch MSELoss(), how to use PyTorch MSELoss(), deep, learning PyTorch MSELoss(), PyTorch MSELoss() examples and finally conclude our statement on PyTorch MSELoss().

What is PyTorch MSELoss()?

MSELoss() is the class present in the PyTorch with its blueprint as given below.

Class torch.nn.MSELoss (reduction = 'mean', reduce = None , size average = None) [value of source]This class is useful while creating a new criterion for measuring the mean squared error. The format considered while creating this measure is of squared L2 norm and is considering the target y and input x between each of the value of element. When the value of reduction has none in it then the unreduced loss is illustrated using the following format –

Loss (x, y) = L = {l1, l2, l3, …., ln} ^ T, ln = (x to the base of n - y to the base of n) ^ 2Where the n value is for the size of batch.

The other case is when the value of reduction is other than none which also includes its default case of mean in which we can make the use of following formula for MSELoss() calculation –

Loss (x, y) = mean (L) which applies when the reduction is set to mean value

Or Loss (x, y) = sum (L) when the value of reduction parameter is set to sum

In both of the formulas the x and y values should be tensors which can have any arbitrary shape and having the total of n elements in each one of the tensor.

When the operation of mean is going to be performed then the operation is carried out on all the elements and they are divided by n.

If you set the value of reduction as sum then the division by n operation could be avoided.

Various parameters described and used in the above syntax of PyTorch Nllloss are described in detail here –

- Size average – This is the optional Boolean value and is deprecated for usage. Inside a particular batch, the default behavior is the calculation of average of each of loss of the loss element inside the batch. In case of certain losses, each of the sample value may contain multiple associated elements. When the value of the size average parameter is set to false then inside each of the mini-batch, the value of losses is being summed up. By default, when not specified the value is set to true and this value is ignored when the value of reduce parameter is set to false.

- Reduction – This is the optional string parameter used to specify the reduction that needs to be applied in the output value when the type of output is either mean, none or sum. The value of mean corresponds to specification that the weighted mean of the output is considered, none means that none of the reduction is applicable, sum corresponds to the specification that the values of the output will be summed up. The parameters reduce and size average are completely deprecated and in the near future if we try to specify any of the values of those two parameters then it will be overridden by the reduction. When not specified the value of this parameter is treated to be mean.

- Reduce – This is the optional string value which is deprecated for now. The default value when not specified set to true which gives the average of loss or observations being summed up for each of the batch considering the value of size average. If the value of this parameter is set to false then the loss is returned per element of the batch and the value of the size average parameter is completely ignored.

How to use PyTorch MSELoss()?

The shape that you use for specification of tensors while using PyTorch MSELoss() are described below –

- Input value – It can be tensor having any number of dimensions.

- Output value – It is also the tensor with a particular dimension having the shape same as that of input tensor.

According to the values of reduce, reduction, and size average that you use in MSELoss() class call, the formula used for calculating the loss changes as described previously.

Deep Learning PyTorch MSELoss()

MSELoss() is mostly used as loss function in the scenarios that involve regression. In deep learning, while you work with neural networks loss functions play a key role in deciding whether the performance of your application will be breaked up or maked up. Hence, it is very important to choose the proper loss function for your algorithm for proper and expected working of model. Loss function help to determine the error between the predicted and actual values.

PyTorch MSELoss() Examples

Let us consider one example to understand the implementation of MSELoss() in PyTorch –

Example #1

Code:

sampleMSELossEducba = neuralNetwork.MSELoss()

sampleInputvalue = torch.randn(3, 5, requires_grad=True)

achievedTargetValue = torch.randn(3, 5)

retrievedOutput = sampleMSELossEducba(sampleInputvalue, achievedTargetValue)

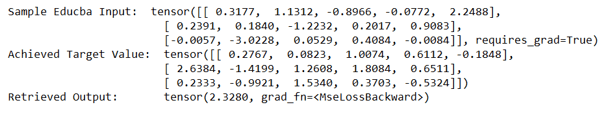

retrievedOutput.backward()After executing the above program, we get the following output as the resultant –

Let us consider one more example –

Example #2

Code:

import torch

import torch.nn as neuralNetwork

sampleEducbaInput = torch.randn(3, 5, requires_grad=True)

achievedTargetValue = torch.randn(3, 5)

sampleMseLossCalculated = neuralNetwork.MSELoss()

retrievedOutput = mse_loss(sampleEducbaInput, achievedTargetValue)

retrievedOutput.backward()

print('Sample Educba Input: ', sampleEducbaInput)

print('Achieved Target Value: ', achievedTargetValue)

print('Retrieved Output: ', retrievedOutput)Which gives the following output after execution –

Conclusion

MSELoss() also known as L2 loss stands for Mean Squared loss and is used for computing the average value of the squared differences that lie between the predicted and actual values. Irrespective of whatever signs the predicted and actual values have, the value of MSELoss() will always be a positive number. To make your model accurate, you should try to make the value of MSELoss() as 0.0. PyTorch MSELoss() is actually considered as a default loss function while creating the models and solving the problems related to regression.

Recommended Articles

We hope that this EDUCBA information on “PyTorch MSELoss()” was beneficial to you. You can view EDUCBA’s recommended articles for more information.