Updated April 6, 2023

Definition of PyTorch optimizer

Basically, PyTorch provides the optimization algorithms to optimize the packages as per the implementation requirement. Normally we know that we manually update the different parameters by using some computed tools but it is suitable for only two parameters. Now consider real-world cases if we have more than two parameters so we cannot write the optimization code for each and every parameter, so at that time we can use PyTorch optimizer to reduce the human effort as well as it is also useful to reduce the complexity of the model. Optimizer helps us to select the parameter that we want to update as well as we can select all the possible parameters as per requirements.

PyTorch optimizer overviews

During neural network preparation, its loads are haphazardly instated at first and afterward, they are refreshed in every age in a way with the end goal that they increment the general precision of the network.

In every age, the yield of the preparation information is contrasted with real information with the assistance of the misfortune capacity to ascertain the mistake and afterward, the weight is refreshed likewise but how would we realize how to refresh the weight to such an extent that it expands the exactness? This is really an issue of enhancement where the objective is to streamline the misfortune work and get the best loads. Also, the technique utilized for advancement is called Optimizer.

For cutting-edge research themes like support learning, meager coding, or GAN research, it could be attractive to physically deal with the advancement interaction.

This is just suggested for specialists who need extreme adaptability. Lightning will deal with just accuracy and gas pedals rationale. The clients are left with optimizer.zero_grad(), inclination amassing, model flipping, and so forth.

How to use PyTorch Optimizer?

Now let’s see how we can use PyTorch optimizer as follows.

We know that many individuals don’t understand that Pytorch can be utilized for general slope advancement. All in all, you can utilize Pytorch to discover the minimum or limit of subjectively complex enhancement goals. Be that as it may, for what reason would you need to do this? You can imagine something like three valid justifications (there are some more).

You are as of now acquainted with Pytorch and don’t have any desire to need to get familiar with another streamlining system

You need to enhance over the results of a Pytorch model — for example, you need to utilize enhance over the expectations of a Pytorch Neural net (for example a first stage neural net may anticipate the penchant of a client to participate in a specific high-esteem activity and the analyzer is utilized to figure out which activity is best given some constraints like promoting spending plan).

You need to utilize the high-level analyzers characterized in Pytorch like Adam.

Types of PyTorch Optimizers

Now let’s see the different types of Pytorch optimizers as follows.

1. SGD Optimizer

This is the first type of optimizer, also called Stochastic Gradient Optimizer I, in which the weight of trained data is updated or we can say that all small subsets of data are updated.

2. Adam Optimizer

Basically, Adam Optimizer uses adaptive learning and momentum rate for better implantation. This type of optimizer is most widely used in a neural network for practical purposes.

3. Adagrad Optimizer

This is an Adaptive Gradient Algorithm and basically, it is used for the gradient-based optimization for each parameter to improve the performance of learning rates.

4. Adadelta Optimizer

It is an extension of the Adagrad algorithm which is used to adapt new learning rates that are based on the gradient updates or we can say that moving window.

5. AdamW Optimizer

The AdamW is another version of Adam optimizer algorithms and basically, it is used to perform optimization of both weight decay and learning rate. One more advantage of the optimizer is that it is faster.

6. Adamax

Adamax analyzer is a variation of Adam streamlining agent that utilizes vastness standard. However, it isn’t utilized generally in functional work. Some examination shows Adamax results are superior to Adam optimizer.

7. RMSProp

The RMSProp is applied on the stochastic gradient with a mini-batch and it uses the adaptive learning rates.

PyTorch Optimizers Code

Now let’s see the code for the optimizer as follows.

opt =myopt(sample_model.parameters(), lr=0.001)

for epoch in epochs:

for btch in epoch:

result = sample_model (btch)

loss = l_f(result, tv)

loss.backward()

opt.step()Explanation

In the above code, we try to implement the optimizer as shown. Normally PyTorch provides the different types of standard libraries. In the above we can see the parameter function, loss function(l_f) as well as we also need to specify the different methods such as backward() and step () as shown. In the step method, we can see what is actually happening and how it happens.

Example

Now let’s see the different examples of PyTorch optimizers for better understanding as follows.

Code:

import torch

import torch.nn as tn

import torch.optim as optm

from torch.autograd import Variable

X = 2.15486

Y = 4.23645

e = 0.1

Num = 50 # number of data points

Z = Variable(torch.randn(Num, 1))

tv = X * Z + Y + Variable(torch.randn(Num, 1) * e)

sample_model = tn.Linear(1, 1)

optim = optm.SGD(sample_model.parameters(), lr=0.05)

loss_fn = tn.MSELoss()

nitr = 8

for _ in range(0, nitr):

optim.zero_grad()

predictions = sample_model(Z)

loss = loss_fn(predictions, tv)

loss.backward()

optim.step()

print("-" * 10)

print("value of X Learned = {}".format(list(sample_model.parameters())[0].data[0, 0]))

print("value of X Learned = {}".format(list(sample_model.parameters())[1].data[0]))Explanation

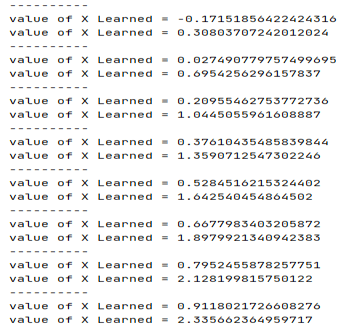

In the above example, we try to implement Pytorch SGD Optimizer as shown. The final result of the above code we illustrated by using the following screenshot as follows.

So in this way, we can implement all the types of Pytorch optimizers as per our requirement.

Conclusion

We hope from this article you learn more about the Pytorch Optimizer. From the above article, we have taken in the essential idea of the Pytorch Optimizer and we also see the representation and example of Pytorch Optimizer From this article, we learned how and when we use the Pytorch Optimizer.

Recommended Articles

We hope that this EDUCBA information on “PyTorch optimizer” was beneficial to you. You can view EDUCBA’s recommended articles for more information.