Updated April 5, 2023

Introduction to PyTorch Permute

PyTorch provides the different types of functionality to the user, in which that permute is one of the functionalities that the PyTorch provides. For example, in deep learning, sometimes we need to rearrange the original tensor as per the specified order and return a new multidimensional tensor; at that time, we can use the permute() function as per our requirement. The main advantage of the permute() function is that the size of a returned tensor is the same as the size of the original tensor, which means it remains the same. In other words, we can say that the permute() function is faster than PyTorch as well as we can also implement deep learning inefficiently as per our requirement.

What is PyTorch Permute?

Translate/Permute operation can be found in the models of Transformer, which overwhelms the NLP, and Vision Transformer, which is a rising star in the field of CV. Particularly in Multi-Head Attention, this operation is expected to change the information aspect course of action.

Clearly, as an exceptionally utilized operation, the CUDA execution of Transpose/Permute operation influences the preparation speed of the real organization. The outcomes show that the profoundly streamlined Permute activity is a lot quicker and more transmission capacity viable than PyTorch, and the transfer speed use is near that of the local Copy activity.

How to use PyTorch permute?

Now let’s see how we can use of permute() function in PyTorch as follows.

In the above point, we already discussed the permute() function. Now let’s see how we can implement the permute() function as follows.

Syntax

torch.permute(specified input, specified dimension)Explanation

In the above syntax, we use of permute() function with two different parameters, as shown.

- Specified input: Specified input means input tensor; we can create the tensor by using the randn() function with different values.

- Specified dimension: The specified dimension means the specified order of tensor dimension and depends on the user requirement.

PyTorch Permute Elements

Now let’s see different elements of permute() function as follows.

Inputs: Contribution for which change attributions are registered. If forward_func accepts a solitary tensor as info, a solitary information tensor ought to be given. In the event that forward_func accepts various tensors as info, a tuple of the information tensors ought to be given. It is expected that for all given info tensors, aspect 0 relates to the number of models (also known as bunch size), and assuming various information tensors are given, the models should be adjusted properly.

Target: Yield lists for which distinction is processed (for order cases, this is typically the objective class). Assuming that the organization returns a scalar worth for each model, no objective record is essential. For general 2D results, targets can be by the same token:

- A solitary number or a tensor containing a solitary whole number, which is applied to all information models

- A rundown of whole numbers or a 1D tensor, with length coordinating with the number of models in inputs (faint 0). Every number is applied as the objective for the comparing model.

For yields with > 2 aspects, targets can be by the same token:

- A solitary tuple, which contains output_dims – 1 component. This objective list is applied to all models.

- There is a rundown of tuples with length equivalent to the number of models in inputs (faint 0), and each tuple contains output_dims – 1 component. Each tuple is applied as the objective for the comparing model.

additional_forward_args: This contention can be given if the forward work requires extra contentions other than the contributions for which attributions ought not to be processed. It should be either an additional conflict of a Tensor or emotional (non-tuple) type or a tuple containing various extra contentions, including tensors or any discretionary python types. These contentions are given to forward_func all together after the contentions in inputs. For example, for a tensor, the principal aspect of the tensor should compare to the number of models. The given contention is utilized for all forward assessments for any remaining kinds.

PyTorch permute method

Different methods are mentioned below:

- Naive Permute Implementation: The capacity of Permute is to change the request for tensor information aspects.

- Static Dispatch of IndexType:As profound learning models get bigger, the number of components associated with the activity might surpass the reach addressed by int32_t. Furthermore, in the facilitated stage, the division activity has various overheads for various whole number sorts.

- Merging Redundant Dimensions:In some uncommon cases, Permute aspects can be converged, with the accompanying guidelines:

- For example, aspects of size 1 can be taken out straightforwardly.

- Likewise, back-to-back aspects can be converted into one aspect.

Using Greater Access Granularity

You might have noticed a format boundary size_t movement size in the piece work, which shows the granularity of the components to be gotten too. The Nvidia Performance Optimization blog Increase Performance with vectorized Memory Access referenced that CUDA Kernel execution can be improved by vectorizing memory tasks to decrease the number of guidelines and further develop data transfer capacity use.

Now let’s see the different examples of the permute() function for better understanding as follows.

Example #1

Code:

import torch

A = torch.randn(3, 5, 2)

A.size()

torch.Size([3, 4, 6])

output = torch.permute(A, (1, 0, 2)).size()

print(output)

Explanation

In the above example, we try to implement the permute() function; here, we created a tensor by using the randn function, and after that, we use the permute() function as shown. The final output of the above implementation we illustrated by using the following screenshot as follows.

Example #2

Now let’s see another example of the permute() function as follows.

Code:

import torch

input_tensor = torch.randn(2,3)

print(input_tensor.size())

print(input_tensor)

input_tensor = input_tensor.permute(1, 0)

print(input_tensor.size())

print(input_tensor)

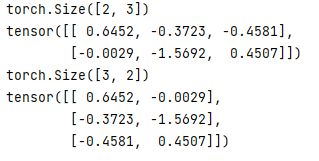

Explanation

The final output of the above implementation we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the PyTorch permute. From the above article, we have taken in the essential idea of the PyTorch permute, and we also see the representation and example of the PyTorch permute. Furthermore, we learned how and when we use the PyTorch permute from this article.

Recommended Articles

We hope that this EDUCBA information on “PyTorch permute” was beneficial to you. You can view EDUCBA’s recommended articles for more information.