Updated April 5, 2023

Introduction to PyTorch rnn

Basically, Pytorch rnn means Recurrent Neural Network, and it is one type of deep learning which is a sequential algorithm. In deep learning, we know that each input and output of a layer is independent from other layers, so it is called recurrent. In other words, we can say that it performs some mathematical computational operation one operation after another. By using this algorithm, we are able to perform the different mathematical operations in a sequential manner as per our requirements. First, we need to consider the hidden layer in the neural network. At that time, we can use the rnn algorithm.

What is Pytorch rnn?

Profound learning is a tremendous field that utilizes fake neural organizations to handle information and train an AI model. Inside profound learning, two learning approaches are utilized, directed and unaided. Here centers on intermittent neural organizations (RNN), which utilize directed profound learning and successive figuring out how to foster a model. This profound learning method is particularly valuable when dealing with time-series information, as is utilized in this instructional exercise.

While making any AI model, comprehend the information you’re investigating to utilize the most important model design. Here, the objective is to make an essential model that can anticipate a stock’s worth utilizing Open, High, Low, and Close qualities every day. Since the financial exchange can be very unstable, there are many variables that can impact and add to a stock’s worth.

Feed forward Neural Networks Transition to 1 Layer Recurrent Neural Networks (RNN)

RNN is basically an FNN, however with a secret layer (non-direct yield) that gives data to the following FNN

Contrasted with an FNN, we’ve one extra arrangement of weight and inclination that permits data to move, starting with one FNN then onto the next FNN successively that permits time-reliance.

Create RNN Model

Now let’s see how we can create the RNN model as follows.

Recurrent means the yield at the current time step turns into the contribution to the following time step. Thus, at every component of the succession, the model considers the current info, however, what it recalls about the first components.

The memory permits the organization to learn long haul conditions in succession, which implies it can consider the whole setting when making a forecast, regardless of whether that is the following word in a sentence, a feeling characterization, or the following temperature estimation. An RNN is intended to imitate the human method of handling arrangements: we consider the whole sentence while shaping a reaction rather than words without anyone else.

At the core of an RNN is a layer made of memory cells. The most famous cell right now is the Long Short-Term Memory (LSTM) which keeps a phone state just as a conveyor for guaranteeing that the sign (data as a slope) isn’t lost as the succession is handled. So at each time step, the LSTM thinks about the current word they convey and the cell state.

Now let’s see how we can prepare the data as follows.

We’ll begin with the patent modified works as a rundown of strings. The primary information planning ventures for our model are:

- Eliminate accentuation and split strings into arrangements of individual words

- Convert the singular words into whole numbers

These two stages should both be possible utilizing the Keras Tokenizer class. As a matter of course, this eliminates all accentuation, lowercase words and afterward changes words over to arrangements of numbers. A Tokenizer is first to fit on a rundown of strings and afterward changes over this rundown into a rundown of arrangements of whole numbers.

In the next step, we need to make the features and labels. That means we need to create a network by using supervised machine learning. Then, finally, we can build the recurrent neural network that we need to implement.

Terminology for pytorch RNN

Now let’s see the different types of the terminology used for RNN as follows.

- Input_size: It is used to specify how many expected features are as input.

- Hidden_size: It is used to specify how many expected features are hidden.

- Num_lyaers: It is used to specify the number of recurrent layers.

- Nonlinearity: It is used to specify the non-linearity use of tanh or relu.

- Blas: If it is false, then the layer can’t use weight.

- Batch_first: In this terminology, we can set true or false as per requirement.

- Dropout: If we have non zero then the layer is dropout for each RNN layer.

Types PyTorch rnn

Now let’s see the different types of RNN as follows.

- One to one: If we need to deal with fixed size input and output at that time, we can use this type, and it is also called a Plain Neural Network.

- One to many: This is the second type of rnn, and it shows the sequence of data as an

- Many to one: if we have multiple inputs and we require a single output, we can use this type.

- Many to many: if we have multiple inputs and we require multiple outputs at that time, we can use this type.

PyTorch rnn examples

Now let’s see rnn examples for better understanding as follows.

Code:

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optm

from torch.utils.data import Dataset, DataLoader

rnndata = torch.Tensor([1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20,21,22,33,34,35,65,45])

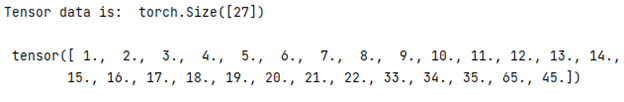

print("Tensor data is: ", rnndata.shape, "\n\n", rnndata)

Explanation

In the above example, we import the different packages; after that, we specify the data and print data. The final output of the above program we illustrated by using the following screenshot as follows.

Now add the one layer as follows.

Code:

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optm

from torch.utils.data import Dataset, DataLoader

rnndata = torch.Tensor([1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20])

print("Tensor data is: ", rnndata.shape, "\n\n", rnndata)

i_size = 1

s_length = 5

h_size = 2

NUM_LAYERS = 1

BATCH_SIZE = 4

rnn = nn.RNN(input_size=i_size, hidden_size=h_size, num_layers = 1, batch_first=True)

inputs = rnndata.view(BATCH_SIZE, s_length, i_size)

out, h_n = rnn(inputs)

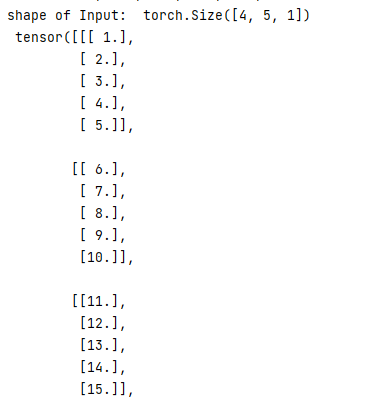

print('shape of Input: ', inputs.shape, '\n', inputs)

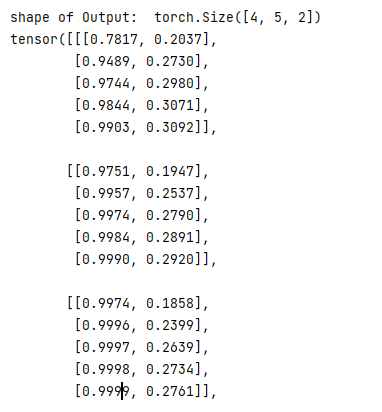

print('\n shape of Output: ', out.shape, '\n', out)

print('\nshape of Hidden: ', h_n.shape, '\n', h_n)

Explanation

The final output of the above program we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the PyTorch rnn. From the above article, we have taken in the essential idea of the PyTorch rnn, and we also see the representation and example of PyTorch rnn. Furthermore, from this article, we learned how and when we use the PyTorch rnn.

Recommended Articles

We hope that this EDUCBA information on “PyTorch rnn” was beneficial to you. You can view EDUCBA’s recommended articles for more information.