Updated April 4, 2023

Introduction to PyTorch Variable

A PyTorch variable is a wrapper that wraps the tensor in PyTorch, and in computational graphs, it is used to represent the node. When considering the sample as the variable, you can get its corresponding tensor value using sample.data. One more variable is responsible for storing the gradient of the variable. We are considering taking some scalar value of reference, which can be accessed by using sample.grad if a sample is our variable.

In this article, we will try to dive into the sea of the PyTorch variable. We will study the PyTorch variable, create the PyTorch variable, use the PyTorch variable, PyTorch variable functions, PyTorch variable example, and conclude about the same.

What is PyTorch Variable?

PyTorch variables represent nodes on computational graphs and are the wrappers around the tensors. The API of tensors and variables is almost the same in PyTorch. The same operations that can be performed on tensors in PyTorch can also be executed on the variables; just the simple difference that lies is that the computation of gradients on an automatic basis is allowed by the autograd.

When we are working on the relu network that is fully connected and has one of its layers hidden and includes none of biased and is completely trained for the prediction of values of x and y that are found by using the minimizing squared Euclidean distance, the implementation of this model has the computational made using the forward pass. Moreover, it includes operations on the variables of PyTorch. During this, it also uses PyTorch autograd for the computation of gradient.

Create PyTorch Variable

For the creation of PyTorch variables, we will need to follow certain steps –

- Import the necessary library of PyTorch at the start of the program can be done by using the statement – import torch

- We can check the version of PyTorch by simply printing a message using the statement – print(torch._version_), which will result in the output showing the version of PyTorch on your system. In my case, it is showing –

- We will further need to import the functionality of the variable present inside PyTorch’s autograd library. This can be done by writing the statement – from the torch.autograd import Varaiable

- Let us create a random tensor as a sample for now using the statement –

sampleEducbaTensor = (torch. rand (2,3,4) * 100).int ()Here, we created a sample tensor of dimensions 2 * 3 * 4. Further, we multiply this tensor with 100 and then cast it into an integer.

- You can print the tensor by using the statement – print (sampleEducbaTensor)

- As we have got an integer tensor, when you go for printing the type of tensor, you can use the statement – print (type (sampleEducbaTensor)) whose output will be –

- Now, its time to create a definition of the random variable which can be done by using the statement – sampleEducbaVariable = Variable ((torch.random(3)).int (), requires_grad = True). The output looks as shown below –

- Requires grad is the parameter that is a Boolean value and, when set to true, helps keep track of all the operations.

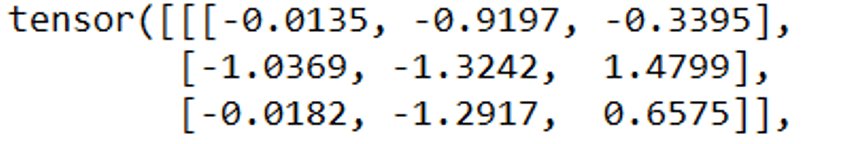

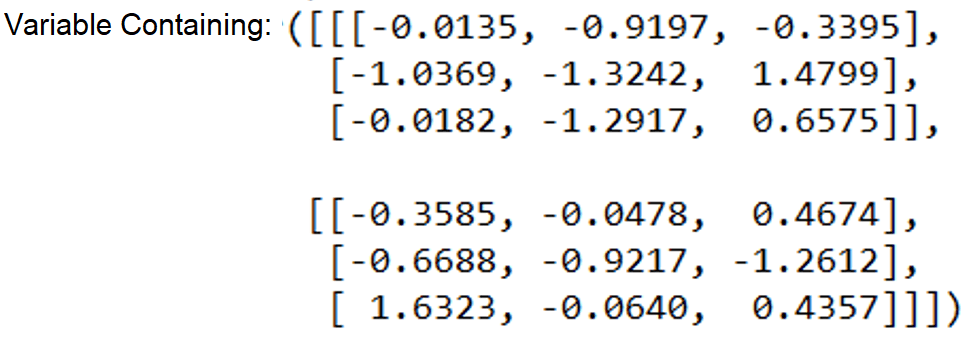

- Further, you can also print the value of this variable by using the statement – print (sampleEducbaVariable) which will result in an almost similar output. Just a minor change will be that it will show the text – variable containing: long with the output tensor value as shown in the image –

- One more difference is that when you go for printing the type of the variable using the statement – print (type (sampleEducbaVariable)), it will result in output as shown below –

Using PyTorch variable

PyTorch variables used to be very helpful for representing the nodes in the computational graph. Along with that, it is also used for the backward process of autograd.

The support for all the APIs of tensor PyTorch variables also provides a backward method to execute backpropagation. Let us consider one sample example; when we are trying to backpropagate the execution of the loss function at that time for the trained model, we take one train model variable sample, and one sample variable says loss which will help us to store the value that is created by computation of the loss function.

Further, we can call it a loss.backward, enabling us to get the computed value of gradients that apply to all the training parameters. Again, after getting the gradient results, PyTorch will store all the results generated from the gradient in the variable sample that corresponds to training.

We can use the PyTorch variable as a wrapper around the tensor. Although the variable usage is now deprecated, when the value of requires_grad is set to true, it also autograd supports the tensors.

PyTorch Variable Functions

In the case of differentiation in PyTorch and autograd, all the operations are performed on the tensors, and the backpropagation also begins from the variable. Therefore, this variable mostly holds the value of the cost function in deep learning.

Requires_grade is the parameter we need to pass to the Variable function to create a variable. The default value of this attribute is set to false while creating a new variable. This helps us to understand whether the variable is trainable or not. When even a single input requires the gradient for an operation, the subsequent subgraphs and the output will also need the gradient. We can set the requires_grad to false early in pre-training the model for fine-tuning but again set it to true when entering into the subgraphs where we will need to retrain the model.

PyTorch Variable Example

Let us consider one example –

Code:

# import all the necessary libraries of PyTorch and variable.

import torch

from torch.autograd import Variable

# wrapping up the value of tensors inside the variable and storing them

sampleEducbaVar1 = Variable(torch.tensor([5., 4.]), requires_grad=True)

sampleEducbaVar2 = Variable(torch.tensor([6., 8.]))

# Creating a new function that is polynomial in nature accepting two sample inputs

sample1 = ((sampleEducbaVar1 **2)+(5*sampleEducbaVar2))

sample2 = sample1.mean()

# dsample1/dsampleEducbaVar1 =2*sampleEducbaVar1 =10,8

# dsample1/dsampleEducbaVar2 =5

# gradient computation

sample2.backward()

# displaying the result by printing the values

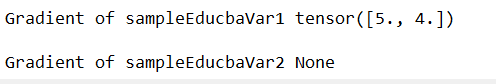

print('Gradient of sampleEducbaVar1', sampleEducbaVar1.grad)

print('Gradient of sampleEducbaVar2', sampleEducbaVar2.grad)Output:

Conclusion

A pyTorch variable represents nodes in computational graphs and acts as a wrapper around tensors.

Recommended Articles

We hope that this EDUCBA information on “PyTorch Variable” was beneficial to you. You can view EDUCBA’s recommended articles for more information.