Updated March 23, 2023

Difference Between R and R Squared

R vs R Squared is a comparative topic in which R represents a Programming language and R squared signifies the statistical value to the Machine learning model for the prediction accuracy evaluation. R is being an open-source statistical programming language that is widely used by statisticians and data scientists for data analytics. R squared is a standard statistical concept in R language which is associated to the liner data models algorithms. R is a scripting language that supports multiple packages for machine learning model development. Whereas R squared is a calculated value which is also known as coefficient of determination for the regression algorithms

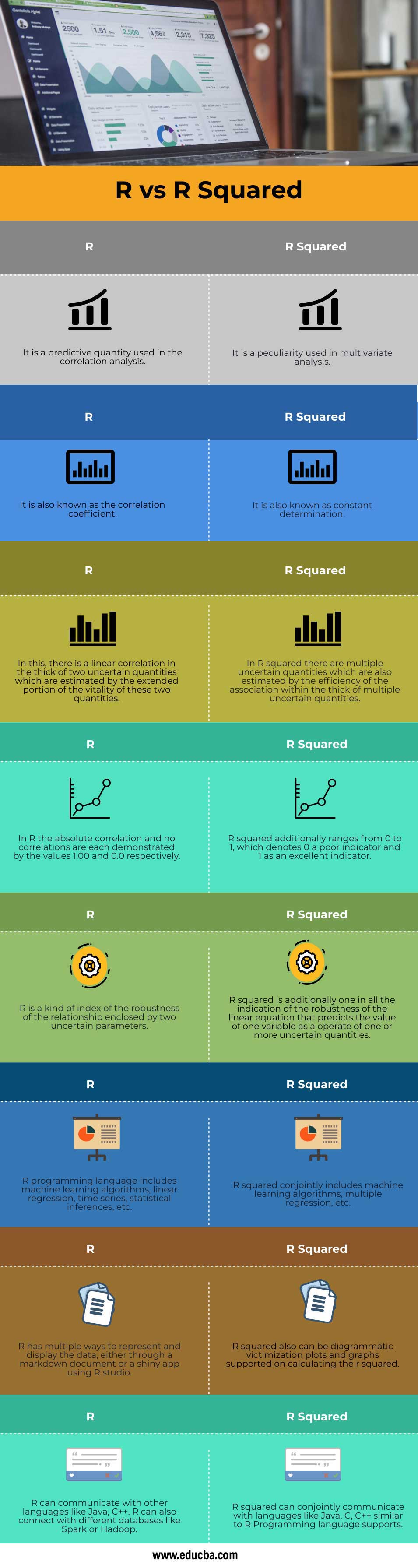

Head to Head comparison Between R and R Squared (Infographics)

Below are the top 8 differences between R vs R Squared:

Key Differences Between R and R Squared

Let us see some of the major key differences between R and R squared.

- Definition: R is a programming language that supports the computation of statistical data sets and demonstrating these data sets graphically for the easy analysis of the given data. R squared also supports statistical data sets for the development of better data analysis with this data mining software. R squared is nothing two times the R, i.e multiple R times R to get R squared. In other words, Constant of determination is the square of constant correlation.

- Constants: R gives the value which is regression output in the summary table and this value in R is called the coefficient of correlation. In R squared it gives the value which is multiple regression output called a coefficient of determination.

- Understanding the concept: It is easy to explain R square with the regression concept but it is difficult to do so with R.

- Range of values of variables: In R the two uncertain quantity values range from -1 to 1. In R squared the two uncertain quantity values range from 0 to 1 because it can never be negative as its value gets squared.

- Correlation between the number of variables: In R correlation can be easily elaborated for simple linear regression as it involves only two uncertain variables one is x and the other is y. In R squared it elaborates both simple linear regression and multiple regressions, wherein R it is difficult to explain for multiple regressions.

- Limitations: In R squared it cannot determine whether the coefficients estimates and prediction are biased. It cannot indicate if the regression model provides a good fit for the given data. As in R, it does support for a huge set of data such as dealing with big data.

- R and R squared values: In R squared the coefficient of determination shows the percentage variation in y which is explained by all the x variables together. So it ranges from 0 to 1 where 1 gives excellent value and 0 the poor. In R coefficient of correlation is the degree of relationship between two variables only say x and y, So it ranges from -1 to 1 where 1 indicates the two variables are moving in unison and -1 indicates two variables are in perfect opposites.

R vs R Squared Comparison Table

Let’s discuss the top comparison between R vs R Squared

There are plenty of tools available to perform data analysis. As data science is one amongst the evolving technologies to run and develop businesses. As we are able to see even Python and SAS are other tools for applied math such as statistical data analysis however SAS is not free and Python lacks communication options, thus R is good tool between implementation and data analysis.

| Sr.No | R | R Squared |

| 1. | It is a predictive quantity used in the correlation analysis. | It is a peculiarity used in multivariate analysis. |

| 2. | It is also known as the correlation coefficient. | It is also known as constant determination. |

| 3. | In this, there is a linear correlation in the thick of two uncertain quantities which are estimated by the extended portion of the vitality of these two quantities. | In R squared there are multiple uncertain quantities which are also estimated by the efficiency of the association within the thick of multiple uncertain quantities. |

| 4. | In R the absolute correlation and no correlations are each demonstrated by the values 1.00 and 0.0 respectively. | R squared additionally ranges from 0 to 1, which denotes 0 a poor indicator and 1 as an excellent indicator. |

| 5. | R is a kind of index of the robustness of the relationship enclosed by two uncertain parameters. | R squared is additionally one in all the indication of the robustness of the linear equation that predicts the value of one variable as a operate of one or more uncertain quantities. |

| 6. | R programming language includes machine learning algorithms, linear regression, time series, statistical inferences, etc. | R squared conjointly includes machine learning algorithms, multiple regression, etc. |

| 7. | R has multiple ways to represent and display the data, either through a markdown document or a shiny app using R studio. | R squared also can be diagrammatic victimization plots and graphs supported on calculating the r squared. |

| 8. | R can communicate with other languages like Java, C++. R can also connect with different databases like Spark or Hadoop. | R squared can conjointly communicate with languages like Java, C, C++ similar to R Programming language supports. |

Conclusion

As we saw in this article R squared is the square of R i.e. the square of correlation among two uncertain quantities (x and y). So indirectly it states that R is the coefficient of correlation of linear relation between only two uncertain quantities or variables. But in the case of R squared it can measure the strength of relationships among multiple variables which is not possible in R. So we can conclude that R squared is better than R as it is multiple of R times R. Therefore,

R squared = 1 – (First sum of Errors / Second sum of Errors)

Recommended Articles

This has been a guide to R vs R Squared. Here we also discuss the key differences with infographics, and comparison table. You may also have a look at the following articles to learn more –