Updated June 9, 2023

Introduction to Recurrent Neural Networks (RNN)

The following article provides an outline for Recurrent Neural Networks (RNN). A recurrent neural network is one type of Artificial Neural Network (ANN) and is used in application areas of natural Language Processing (NLP) and Speech Recognition. An RNN model is designed to recognize the sequential characteristics of data and thereafter using the patterns to predict the coming scenario.

Working of Recurrent Neural Networks

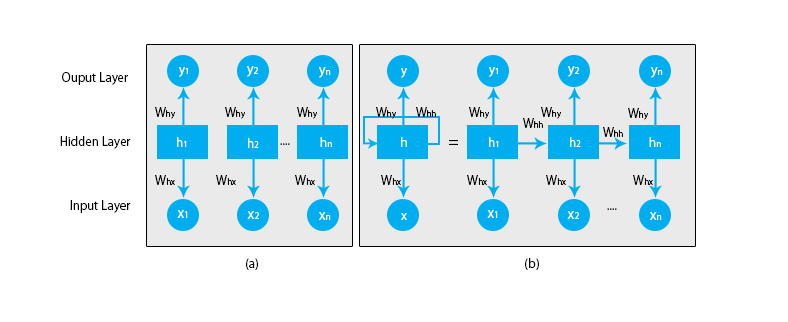

When we talk about traditional neural networks, all the outputs and inputs are independent of each other, as shown in the below diagram:

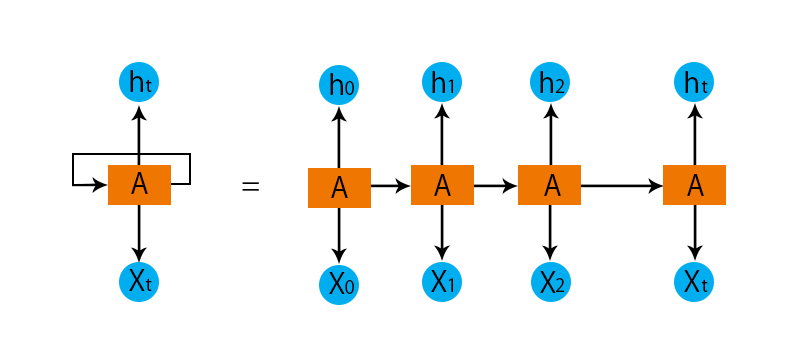

But in the case of recurrent neural networks, the output from the previous steps is fed into the input of the current state. For instance, to predict the next letter of any word or to predict the next word of the sentence, there is a need to remember the previous letters or the words and store them in some form of memory.

The hidden layer is the one that remembers some information about the sequence. A simple real-life example to which we can relate RNN is when we watch a movie, and in many instances, we are in a position to predict what will happen next but what if someone just joined the movie and he is being asked to predict what is going to happen next? What will be his answer? He or she will not be having any clue because they are not aware of the previous events of the movie, and they do not have any memory about it.

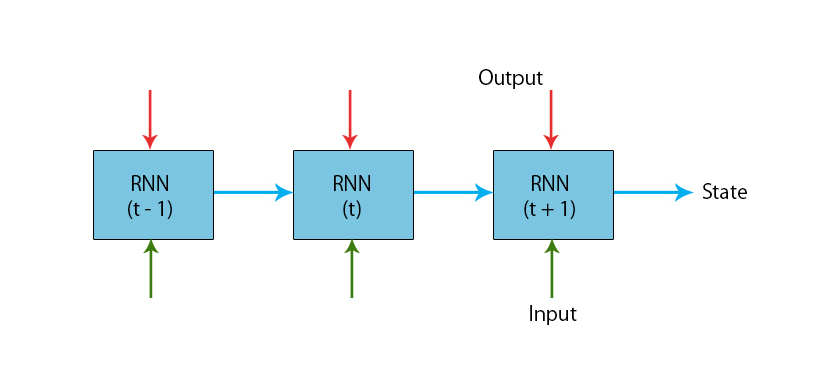

An illustration of a typical RNN model is given below:

The RNN models are having a memory that always remembers what was done in previous steps and what has been calculated. The same task is being performed on all the inputs, and RNN uses the same parameter for each of the inputs. As the traditional neural network is having independent sets of input and output, they are more complex than RNN.

Now let us try to understand the Recurrent Neural Network with the help of an example.

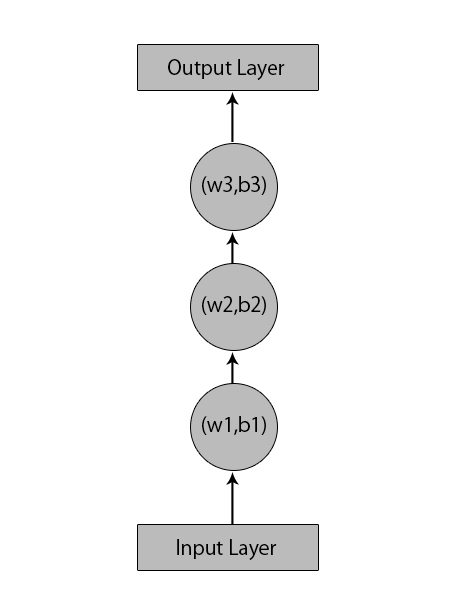

Let’s say we have a neural network with 1 input layer, 3 hidden layers, and 1 output layer.

When we talk about other or the traditional neural networks, they will have their own sets of biases and weights in their hidden layers like (w1, b1) for hidden layer 1, (w2, b2) for hidden layer 2, and (w3, b3) for the third hidden layer, where:w1,w2, and w3 are the weights and,b1,b2, and b3 are the biases.

Given this, we can say that each layer is not dependent on any other and that they cannot remember anything about the previous input:

Now, what an RNN will do is the following:

- The independent layers will be converted to the dependent layer. This is done by providing the same biases and weights to all the layers. This also reduces the number of parameters and layers in the recurrent neural network, and it helps RNN to memorize the previous output by outputting the previous output as input to the upcoming hidden layer.

- To sum up, all the hidden layers can be joined together into a single recurrent layer such that the weights and bias are the same for all the hidden layers.

So a recurrent neural network will look something like the below:

Now it’s time to deal with some of the equations for an RNN model.

- For calculating the current state,

ht= f (ht-1,xt),

Where:

xt is the input state

ht-1 is the previous state,

ht is the current state.

- For calculating the activation function

ht= tanh (Whh ht-1+Wxh xt),

Where:

Wxh is the weight at input neuron,

Whh is the weight at recurrent neuron.

- For calculating output:

Yt=Whyht.

Where,

Yt is the Output and,

Why is the weight at the output layer.

Steps for Training a Recurrent Neural Network

Given below are few steps for training a recurrent neural network:

- In the input layers, the initial input is sent with all having the same weight and activation function.

- Using the current input and the previous state output, the current state is calculated.

- Now the current state ht will become ht-1 for the second time step.

- This keeps on repeating all the steps, and to solve any particular problem, it can go on as many times to join the information from all the previous steps.

- The final step is then calculated by the current state of the final state and all other previous steps.

- Now an error is generated by calculating the difference between the actual output and the output generated by our RNN model.

- The final step is when the process of backpropagation occurs wherein the error is backpropagated to update the weights.

Advantages & Disadvantages of Recurrent Neural Network

Given below are the advantages & disadvantages mentioned:

Advantages:

- RNN can process inputs of any length.

- An RNN model is modeled to remember each information throughout the time which is very helpful in any time series predictor.

- Even if the input size is larger, the model size does not increase.

- The weights can be shared across the time steps.

- RNN can use their internal memory for processing the arbitrary series of inputs which is not the case with feedforward neural networks.

Disadvantages:

- Due to its recurrent nature, the computation is slow.

- Training of RNN models can be difficult.

- If we are using relu or tanh as activation functions, it becomes very difficult to process sequences that are very long.

- Prone to problems such as exploding and gradient vanishing.

Conclusion – Recurrent Neural Networks (RNN)

In this article, we have seen another type of Artificial Neural Network called Recurrent Neural Network; we have focused on the main difference which makes RNN stands out from other types of neural networks, the areas where it can be used extensively, such as in speech recognition and NLP(Natural Language Processing). Further, we have gone behind the working of RNN models and functions that are used to build a robust RNN model.