Updated April 20, 2023

Definition of Scala DataFrame

DataFrame is a collection of dataset or we can say it is an organized DataSet. DataSet is a collection of data, its api is available in scala and java. DataFrame is equal to the relational database b7ut it comes up with more optimization technique. DataFrame concept was introduced by a spark. DataFrameapi is available for many languages which include Java. Scala, R, and python. Data Frame can be created from different sources which include RDDS, Hive, data files, and many more.

Syntax:

valvariale_name = sqlContext.read.json("file_name")In this syntax, we are trying to read the value from json file. For this, we need to mention the file name as a parameter and give any valid name to your variable. DataFrame provides us various methods to perform an operation on the file. Here we are using the read method to read the data from the file. Now we can have one practical example to show the syntax for better understanding see below;

valmyObj = sqlContext.read.json("file.json")In this way, we can read the file data using the read method. We need to place the file into the scala directory for read.

How DataFrame Works in Scala?

DataFrame is used to work with a large amount of data. In scala, we use spark session to read the file. Spark provides Api for scala to work with DataFrame. This API is created for data science based application and also for big data. Now we will see how to create a data frame in scalausing sparksession and read data from the file. We will see one example for this to understand it better;

1. To Read File

If we want to read a file we have read the method here. Inside this read method, we need to mentioned the file name which we want to read data from.

Example:

valobj = sparksession.read(file_name)2. Mentioned File Type

If we want to specifically mentioned the type of file then we have method for it. Suppose if a CSV file so we will call .csv() method and mentioned your file path there. For better understanding see example below;

Example:

valobj = sparksession.read().csv(mentioned file path here)3. Print the File Data

This spark API provides us various method to deal with the data frame in scala. Suppose we have obtained the file data from the read method now we want to print the data. For this, we have show() method available in scala. We can call this method on the spark session object that we have prepared by performing many operations. Let’s see one example for better understanding see below;

Example:

valobj = sparksession.read(file_name)

obj.show(5)In this way we can show cast our data, also we can limit the number of data we want to print. Here we are mentioning limit as 5, so it will print only the five objects from the file.

4. To Print the Schema

We can also see the schema definition by using this API. There are some cases where we want to see our schema definition. For this also spark session object provides us one method called printSchema() in scala. By using this method, we can see the schema for the data frame. Let’s see one example for a better understanding.

Example:

valobj = sparksession.read(file_name)

obj.printSchema()5. See Columns From Dataframe

This API also provides us the facility to select specific columns from the dataframe file. It comes up with one method for this which is called as select() in scala. By using this we can select the columns that we want to print and limit their row number as well by using show() method already available in scala but it depends upon the requirement we have.

Example:

obj.select("name", "address", "city").show(30)In this way we can use the select option in scala data frame API. We just need to mention the column names here in order to access them.

6. Condition Based Search

By using this API for scala we can apply a filter in the file columns. For this, they comes up with a filter() method. Suppose we have one case where we want only the student whose city is Mumbai then in this case this filter method is very useful to deal with. We will just mention the column name and the value by which we want to filer our data. This filter is more we can say a condition. See the example below for better understanding;

Example:

obj.filter("city == 'Mumbai'").show(20)7. Number of Records Count

There is also a provision to count the number of rows present into the dataframe. For these, we can use the count() method available in the scala. These methods will return us the count of records present.

Example:

obj.filter("city == 'Mumbai'").count()In this way, we can count the number of records whose city is Mumbai we are using it with a filter but we can also use this separately.

Points to be remember while working with data frame in scala :

- These APi is available for different languages like java, python, scala, and R.

- It can process large size data very easily form kilo to petabytes.

- DataFrame is the collection of DataSet, DataSet is collection of data in scala.

- In scala, it created the DataSet[Row] type object for dataframe.

Example of Scala DataFrame

Following are the examples are given below:

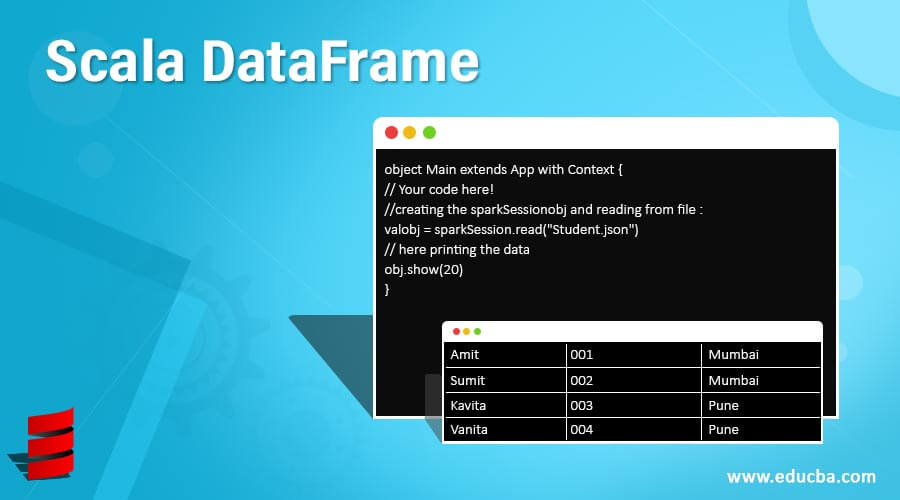

In this example, we are creating a spark session for this we need to use Context class with App in scala and just we are reading student data from the file and printing them by using show() method.

Example:

object Main extends App with Context {

// Your code here!

//creating the sparkSessionobj and reading from file :

valobj = sparkSession.read("Student.json")

// here printing the data

obj.show(20)

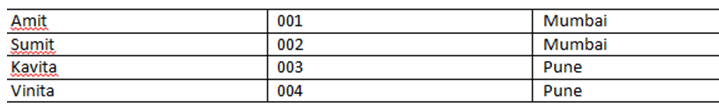

}Output:

Conclusion

Scala data frame API is provided by the spark. It is similar or we can say equal to the relational data base we have. But in this, we read records from the file.These file can be a json file or CSV file. Data frame provide us various method to deal with different cases we can perform different operations by using this API.

Recommended Articles

We hope that this EDUCBA information on “Scala DataFrame” was beneficial to you. You can view EDUCBA’s recommended articles for more information.