Updated March 16, 2023

Introduction to Scikit Learn KNN

Scikit learn KNN is very simple and easy to understand and it is the topmost algorithm of machine learning. KNN is nothing but the k nearest neighbor algorithms and it is used in a variety of applications such as healthcare, finance, image recognition, and video recognition. The KNN algorithm is used in both regression and classification problems, KNN algorithm is based on the approach of feature similarity in any applications.

Key Takeaways

- The k nearest neighbor is a type of machine learning algorithm that was supervised and is used in classification and regression tasks.

- The machine learning algorithm of supervised depends on the labeled input data from which the algorithm learns and uses to produce correct outputs of unlabeled data.

What is Scikit Learn KNN?

Sklearn KNN is providing functionality for the supervised and unsupervised neighbors based on the methods of learning. Unsupervised learning methods are a foundation of other learning methods specifically spectral clustering and spectral clustering. Supervised learning is coming into two flavors regression with continuous labels and classification with discrete labels. The principle of the nearest neighbor is to find the predefined number of training. The number of samples is constant which was user-defined or it can vary as per density.

The distance we can measure as in general. Neighbors-based method in the scikit learn is known as the methods of non-generalized machine learning and training data is transformed to the KD tree. As per despite of its simplicity KNN methods is successful in a large number of regression and classification problems.

The classes of scikit learn neighbors are handled by using numpy arrays or by using sparse metrics as an input. For the dense matrices, the large distance metrics were supported. As per the non-parametric method, it is a successful situation where we can define the decision boundary. There are only learning routines that rely on the nearest neighbor’s core.

How to Use Scikit Learn KNN?

The k name in the classifier will represent nearest neighbors where k is an integer value that was specified by the user. As per the name, this classifier will implement the nearest neighbor’s algorithm. For using scikit learn KNN we need to follow below steps as follows:

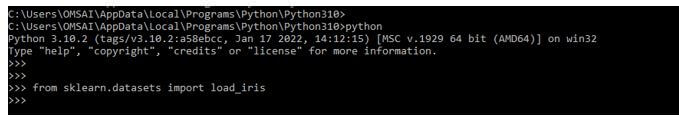

1. In the first step we are importing the data set name as iris as follows. We are importing the same from sklearn.datasets library as follows.

Code:

from sklearn.datasets import load_irisOutput:

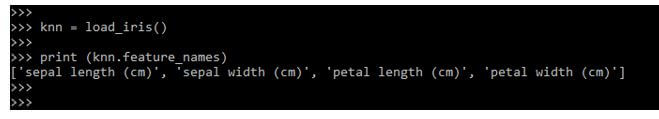

2. After loading the library of the dataset, in this example we are loading the iris dataset and defining the KNN variable to the same as follows.

Code:

knn = load_iris()

print (knn.feature_names)Output:

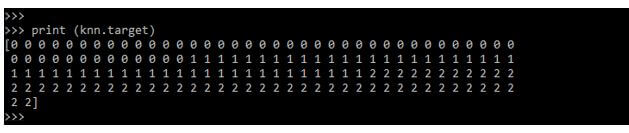

3. After importing the iris data set in this step we are printing the target of the dataset as follows.

Code:

print (knn.target)Output:

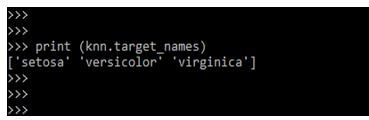

4. After printing the target in the below example we are printing the target_names of the dataset as follows.

Code:

print (knn.target_names)Output:

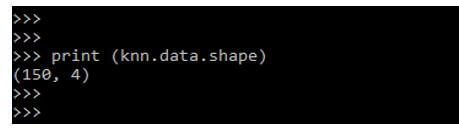

5. In the below example we are checking the number of observations and features as follows.

Code:

print (knn.data.shape)Output:

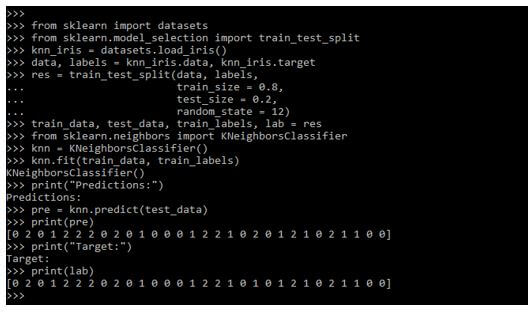

6. Below example shows how we can use and create the nearest neighbor classifier as follows.

Code:

from sklearn import datasets

from sklearn.model_selection import train_test_split

knn_iris = datasets.load_iris()

data, labels = knn_iris.data, knn_iris.target

train_data, test_data, train_labels, lab = res

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier()

knn.fit (train_data, train_labels)

print("Predictions:")

pre = knn.predict (test_data)

print (pre)

print ("Target:")

print (lab)Output:

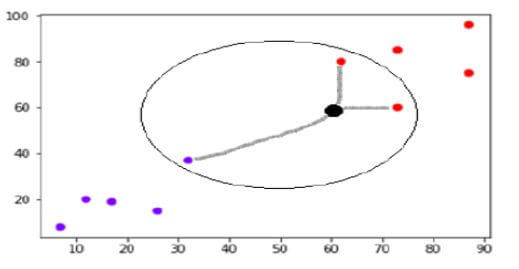

How does Scikit Learn KNN work?

The KNN is the nearest neighbor, neighbors are a core deciding factor. The k neighbor is a core deciding factor, in KNN is generally an odd number if the number of classes is two. At the time k is one then the same algorithm is known as the nearest neighbor algorithm.

The below example shows how KNN works.

Suppose p1 is a point for which labels we are predicting, then first we need to find which was the closest point to p1, and then we need to classify the majority of k neighbors. Each object is voting for the class and class the voting for the prediction. For finding the closest point we need to find the distance between points by using measures.

Scikit learn KNN contains the following steps as follows:

- Calculate the distance

- Find the closest neighbors

- Labels vote

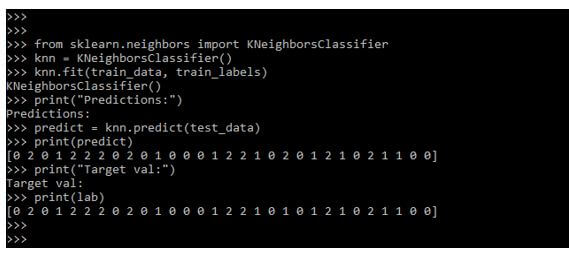

The below example shows how KNN will work as follows. In the below example, we are loading the iris dataset.

Code:

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier()

knn.fit(train_data, train_labels)

print("Predictions:")

predict = knn.predict(test_data)

print(predict)

print("Target val:")

print(lab)Output:

Scikit Learn KNN Classifier

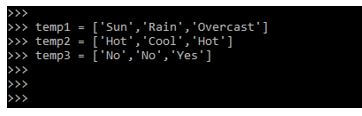

For defining the dataset we need to define two kinds of attributes in our data label and features. The below example shows to create a dataset.

Code:

temp1 = ['Sun', 'Rain', 'Overcast']

temp2 = ['Hot', 'Cool', 'Hot']

temp3 = ['No', 'No', 'Yes']Output:

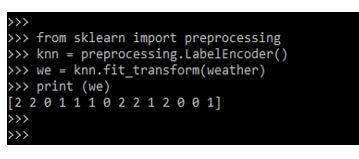

In the below example, we are defining encoding data columns. Various algorithms require numerical data which was representing categorical and numerical columns as follows.

Code:

from sklearn import preprocessing

knn = preprocessing.LabelEncoder()

we = knn.fit_transform(weather)

print (we)Output:

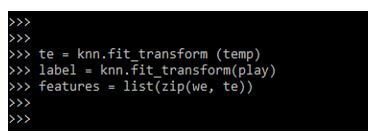

Now we are combining the features and multiple columns into a single set by using the zip function as follows.

Code:

te = knn.fit_transform (temp)

label = knn.fit_transform(play)

features = list(zip(we, te))Output:

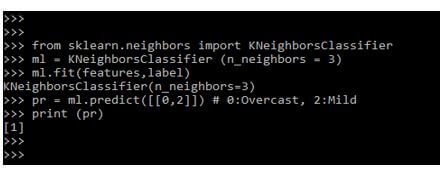

In the below example, we are building the KNN classifier model as follows. We are first importing the object of KNN classifier by passing the argument number as follows.

Code:

from sklearn.neighbors import KNeighborsClassifier

ml = KNeighborsClassifier (n_neighbors = 3)

ml.fit (features,label)

pr = ml.predict ([[0,2]]) # 0:Overcast, 2:Mild

print (pr)Output:

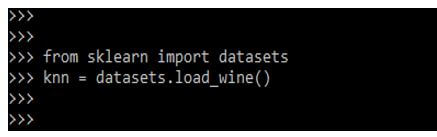

In the below example we have created KNN classifier, we are also creating multiple label with KNN as follows. In the below example, we are loading the wine dataset.

Code:

from sklearn import datasets

knn = datasets.load_wine()Output:

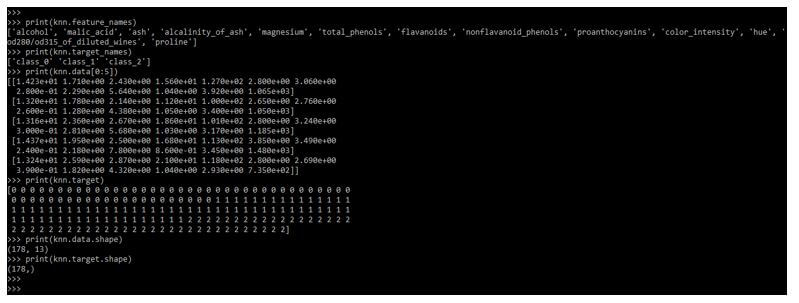

After creating the multiple label in the below example we are exploring the data as follows.

Code:

print(knn.feature_names)

print(knn.target_names)

print(knn.data[0:5])

print(knn.target)

print(knn.data.shape)

print(knn.target.shape)Output:

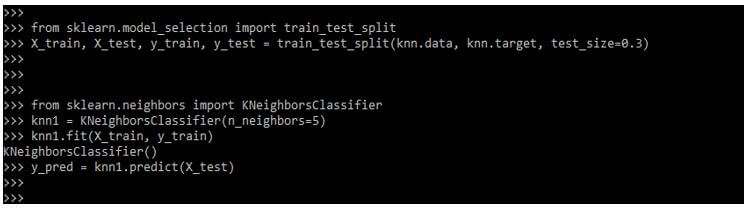

In the below example, we are splitting the data and building the KNN classifier by value using k = 5.

Code:

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

knn1 = KNeighborsClassifier (n_neighbors=5)

knn1.fit (X_train, y_train)

y_pred = knn1.predict(X_test)Output:

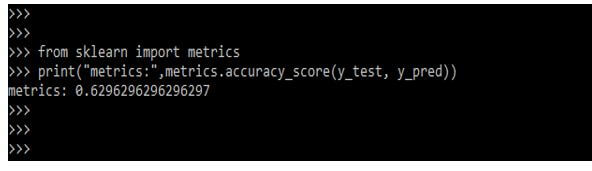

Now in the below example, we are finding the accuracy of the KNN classifier as follows.

Code:

from sklearn import metrics

print("metrics:",metrics.accuracy_score (y_test, y_pred))Output:

Parameters

Below are the parameters of scikit learn k nearest neighbor as follows. The KNN contains multiple parameters.

- n_neighbors – The default value of this parameter is 5 and the type is int. This defines a number of neighbors.

- Weights – The default value of this parameter is uniform. It is used for prediction.

- Algorithm – The default value of this parameter is auto.

- leaf_size – The default value of this parameter is 30 and the type is int. Leaf size if passed to the KD tree.

- p – The default value of this parameter is 2 and the type is int. This defines the power parameter.

- Metric – The default value of this parameter is Minkowski. This defines metrics which was used for distance computation.

- metric_params – The default value of this parameter is none. These are additional arguments.

- n_jobs – The default value of this parameter is none and the type is int.

FAQ

Given below are the FAQs mentioned:

Q1. What is the use of scikit learn KNN in python?

Answer: KNN is a simple classification algorithm we can use the same for assigning a class to a new data point.

Q2. Which libraries do we need to use at the time of working with scikit learn KNN in python?

Answer: We need to use sklearn library and need to import the dataset at the time of working with scikit learn KNN.

Q3. What is the use of KNN classifier?

Answer: We need to create KNN classifier at the time of working with k nearest neighbor algorithm in python.

Conclusion

KNN is nothing but the k nearest neighbor algorithms and it is used in a variety of applications such as healthcare, finance, image recognition, and video recognition. In spite of its simplicity, the KNN approach is effective in many regression and classification issues.

Recommended Articles

This is a guide to Scikit Learn KNN. Here we discuss the introduction, use, and work of scikit learn KNN, classifier, and FAQs respectively. You may also have a look at the following articles to learn more –