Updated April 8, 2023

Introduction to Scikit Learn Neural Network

Scikit learn neural network is used to solve the many challenges we are facing in artificial intelligence. They are performing traditional ML models because it contains the advantages of variable interaction and non-linearity. Creating the neural network will begin from the perceptron; in simple terms, the perceptron will receive the inputs, multiply the same, and pass the same to the activation function.

A neural network is created by adding the layers for the perceptions; it is known as the multi-layer model of the perceptron. The neural network contains three layers, i.e., input, output, and hidden layers. The input layer will directly receive the data, and the output layer will create the required output. The layer between input and output is known as the hidden layer.

The neural network algorithm is used for regression and classification problems. Human contains the ability to identify patterns by using information which was accessible with a degree of accuracy. At the time of seeing any object, we can immediately recognize the same. The artificial neural network is a computation system that was intended to imitate the capabilities of human learning.

It is a machine learning method inspired by how the brain works. This is best while pattern recognition and classification of tasks while using images as input. This is a good machine learning technique which was referring the deep learning. The artificial neural network will comprise the input layer of the neurons.

Key Takeaways

- Perception is the building block of the scikit neural network. This contains the artificial version of the work of human brains.

- The scikit neural network is suitable for pattern recognition and task classification; we can also use the same image as inputs.

How to Use Scikit Learn Neural Network?

The multi-layer perception is a supervised learning algorithm that learns the function by training the dataset. We can also create the neural network manually.

For using the scikit learn neural network, we need to follow the below steps as follows:

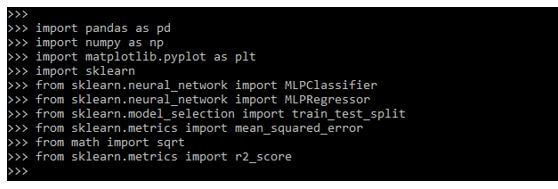

1. First, we need to load the required modules and libraries. While using the neural networks, we need to add the following modules below.

Code:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import sklearn

from sklearn.neural_network import MLPClassifier

from sklearn.neural_network import MLPRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from math import sqrt

from sklearn.metrics import r2_scoreOutput:

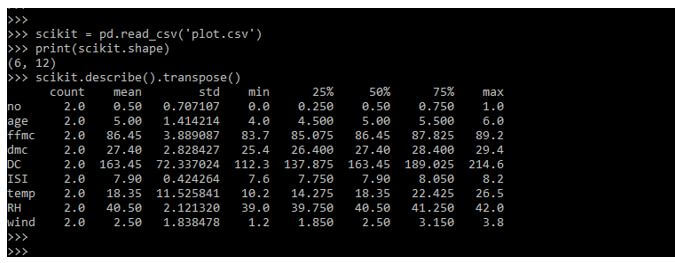

2. After loading all the modules and libraries, we load the data and perform the data check.

Code:

scikit = pd.read_csv('plot.csv')

print(scikit.shape)

scikit.describe().transpose()Output:

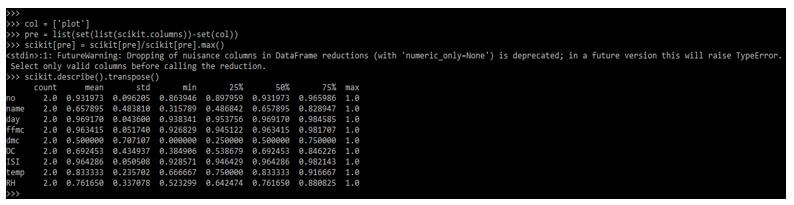

3. After loading the dataset in this step, we create the arrays for the features and the response variable.

Code:

col = ['plot']

pre = list(set(list(scikit.columns))-set(col))

scikit[pre] = scikit[pre]/scikit[pre].max()

scikit.describe().transpose()Output:

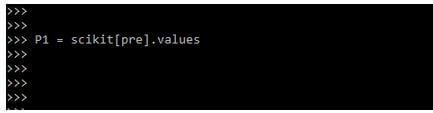

4. In this step, we create the test datasets. We are using the P1 variable for the same and the scikit variable created in the above example.

Code:

P1 = scikit[pre].valuesOutput:

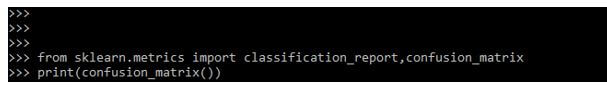

5. After creating the test datasets, we import the classification report and confusion matrix for printing the confusion matrix.

Code:

from sklearn.metrics import classification_report, confusion_matrix

print(confusion_matrix (y_train, predict_train))Output:

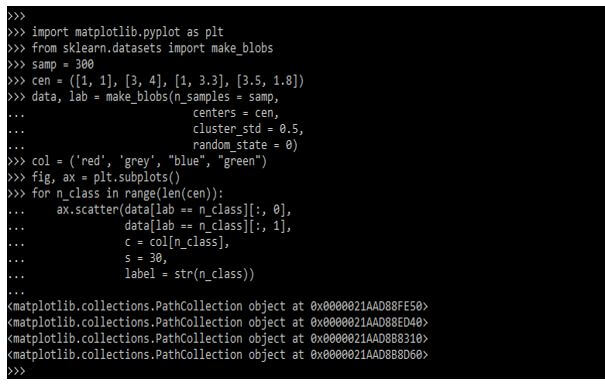

Scikit Learn Neural Network Multilabel

Multilabel perception is a model of an artificial neural network that maps a set of input data into a set of appropriate outputs. The MLP consists of multiple layers, where each layer is connected to another layer. The node of the layer is neurons. There are numerous non-linear hidden.

Code:

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

samp = 300

cen = ([1, 1], [3, 4], [1, 3.3], [3.5, 1.8])

data, lab = make_blobs(n_samples = samp,

centers = cen,

cluster_std = 0.5,

random_state = 0)

col = ('red', 'grey', "blue", "green")

fig, ax = plt.subplots()

for n_class in range(len(cen)):

ax.scatter (data[lab == n_class][:, 0],

data [lab == n_class][:, 1],

c = col [n_class],

s = 30,

label = str(n_class))Output:

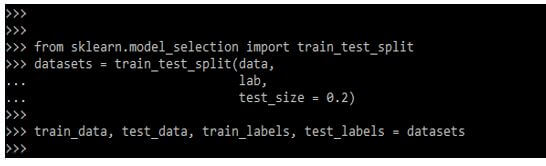

In the below example, we are importing the module of train test split; also, we are defining the datasets as follows.

Code:

from sklearn.model_selection import train_test_split

datasets = train_test_split(data, lab, test_size = 0.2)

train_data, test_data, train_labels, test_labels = datasetsOutput:

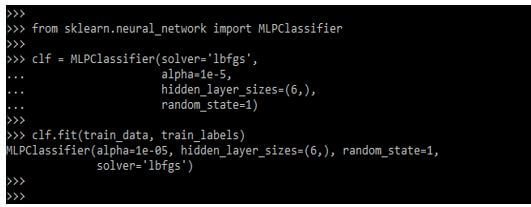

We are using multiple parameters used to control underfitting and overfitting.

Code:

from sklearn.neural_network import MLPClassifier

plot = MLPClassifier(solver = 'lbfgs', alpha = 1e-5, hidden_layer_sizes = (6,), random_state = 1)

plot.fit (train_data, train_labels)Output:

Examples of Scikit Learn Neural Network

Different examples are mentioned below:

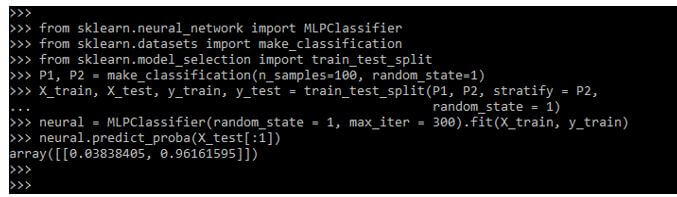

Example #1

In the below example, we are using the predict_proba function as follows.

Code:

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

P1, P2 = make_classification (n_samples=100, random_state=1)

X_train, X_test, y_train, y_test = train_test_split(P1, P2, stratify = P2,

random_state = 1)

neural = MLPClassifier(random_state = 1, max_iter = 300).fit(X_train, y_train)

neural.predict_proba (X_test[:1])Output:

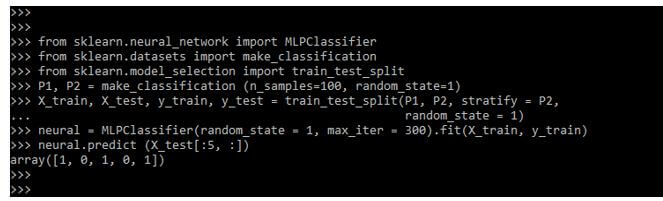

Example #2

In the below example, we are using predict function as follows.

Code:

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

P1, P2 = make_classification (n_samples=100, random_state=1)

X_train, X_test, y_train, y_test = train_test_split(P1, P2, stratify = P2,

random_state = 1)

neural = MLPClassifier(random_state = 1, max_iter = 300).fit(X_train, y_train)

neural.predict (X_test[:5, :])Output:

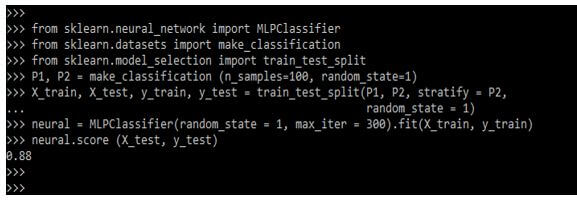

Example #3

In the below example, we are using the score function as follows.

Code:

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

P1, P2 = make_classification (n_samples=100, random_state=1)

X_train, X_test, y_train, y_test = train_test_split(P1, P2, stratify = P2,

random_state = 1)

neural = MLPClassifier(random_state = 1, max_iter = 300).fit(X_train, y_train)

neural.score (X_test, y_test)Output:

FAQ

Other FAQs are mentioned below:

Q1. What is the use of the scikit learn neural network in python?

Answer:

The neural network is used to solve the many challenges we face in ML and AI.

Q2. Which libraries and packages do we need to use when working with scikit learn neural networks?

Answer:

We need to use numpy, sklearn, and matplotlib library when working with scikit learn neural network.

Q3. What is the use of perceptron in scikit learn neural networks?

Answer:

The perceptron is a neural network building block. It will contain the artificial version of the neural network.

Conclusion

The neural network contains three layers, i.e., input, output, and hidden layers. Creating the neural network will begin from the perceptron; in simple terms, the perceptron will receive the inputs, multiply the same, and pass the same to the activation function.

Recommended Articles

This is a guide to Scikit Learn Neural Network. Here we discuss the introduction and how to use scikit learn neural networks with examples and FAQ. You may also have a look at the following articles to learn more –