Updated March 17, 2023

Introduction to Scikit Learn Random Forest

We know that Scikit learn random forest is a supervised machine learning algorithm used for classification, regression, and many other tasks we want. While implementing a machine learning algorithm, we need to predict results accurately. So random forest is one of the techniques which helps us make easy decision tree classifiers on different types of data to improve the prediction and accuracy.

Random Forest Classifier utilizing the Scikit-Learn library of Python programming language, and to do this; we employ the IRIS dataset, which is a seriously common and renowned dataset. The Random timberland or Random Decision Forest is a directed Machine learning calculation used for grouping, relapse, and different undertakings utilizing choice trees.

The Random timberland classifier makes a bunch of choice trees from a haphazardly chosen subset of the preparation set. It is essentially a bunch of choice trees (DT) from a randomly selected subset of the preparation set, and afterward, It gathers the votes from various choice trees to choose the last forecast.

Key Takeaways

- A random forest is not biased because it includes multiple decision trees.

- The random forest is a stable algorithm.

- This algorithm is suitable for definite as well as numerical data.

- It can handle a large amount of data in efficient ways.

Scikit Learn Random Forest Classifier

Let’s see what a random forest classifier is as follows:

Random Forest Classifier has various applications, like proposal motors, picture arrangement, and element choice. It very well may be utilized to order steadfast advanced candidates, recognize fake movement, and foresee infections. It lies at the foundation of the Boruta calculation, which chooses significant highlights in a dataset.

For implementation, we need to follow several steps as follows:

First, we need to import all required libraries as shown below:

Code:

from sklearn import datasetsAfter that, we need to import the dataset we want through the coding.

After successfully loading the dataset, we need to construct the decision tree for every sample dataset and, according to the dataset, predict with the help of each decision tree. In this step, we need to check the content and features of the dataset. In the next step, we need to vote for every predicted result. Those anticipated results got a high vote which we can consider as the final prediction.

Scikit Learn Random Forest API

Let’s see the random forest API as follows:

We should figure out the calculation in layman’s terms. Assume you need to go out traveling, and you might want to head out to a spot that we will appreciate. So how would you find a place that you would like? You can look through the web, read surveys on touring web journals and entryways, or you can likewise ask your companions.

We should assume you have chosen to ask your companions and consult with them about their past movement experience in different spots. You will get a few proposals from each companion. Presently it would help if you made a rundown of those suggested places. Then, at that point, you request that they vote (or select one best spot for the outing) from the rundown of recommended sites you made. The spot with the most votes will be your last decision for the excursion.

In the above choice cycle, there are two sections. To start with, getting some information about their singular travel insight and getting one suggestion out of numerous spots they have visited. This part resembles utilizing the choice tree calculation. Here, every companion decides where the person has visited up until now.

The subsequent part, in the wake of gathering every one of the suggestions, is the democratic method for choosing the best spot in the rundown of proposals. This entire course of getting suggestions from companions and deciding on them to find the best spot is known as the arbitrary woods calculation.

Features of Scikit Learn Random Forest

Given below are the features mentioned:

- It includes the number of decision trees to make highly accurate and robust functions.

- It does not allow overfitting problems due to the average of all predictions.

- It included classification and regression problems.

- By using random forests, we can also handle missing values.

Examples of Scikit Learn Random Forest

Given below are the examples of Scikit Learn Random Forest:

First, we must import libraries and load the dataset, as shown in the code below.

Code:

from sklearn import datasets

d_set = datasets.load_iris()

print(d_set.target_names)After execution, we get the following result shown in the screenshot below.

Output:

In the next part, we need to divide our dataset into two parts, as shown in the code below.

Code:

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import pandas as pd

d_set = datasets.load_iris()

A,B = datasets.load_iris( return_A_B = True)

A_train_d, A_test_d, B_train_d, B_test_d = train_test_split(A, B, test_size_d = 0.40)

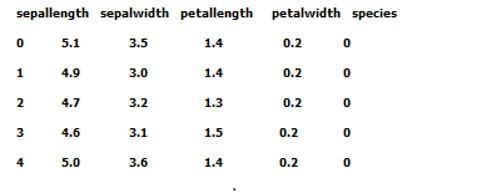

s_data = pd.DataFrame({'sepallength': d_set.s_data[:, 0], 'sepalwidth': d_set.s_data[:, 1], 'petallength': d_set.s_data[:, 2], 'petalwidth': d_set.s_data[:, 3], 'species': d_set.target})

print(s_data.head())After execution, we get the following result shown in the screenshot below.

Output:

Finally, we need to write the code for accuracy as below.

Code:

from sklearn import metrics

print("Accuracy Model is: ", metrics.accuracy_score(B_test_d, B_pred_d))After execution, we get the following result shown in the screenshot below.

Output:

FAQ

Other FAQs are mentioned below:

Q1. What is used for a random forest?

Answer:

It comes under the supervised category of the algorithm and is usually used for regression and classification problems. It also includes the decision tree for different data samples.

Q2. How can we use the random forest in python?

Answer:

First, we need to import all the required packages and, after that, load the dataset that we require; in this third step, we need to construct the decision tree and make the voting for a result.

Q3. What are the benefits of a random forest?

Answer:

It is suitable for large amounts of data, and we can easily make the parts of the dataset as per our requirement to get more accurate results.

Conclusion

In this article, we are trying to explore Scikit Learn, Random Forest. In this article, we saw the basic ideas of Scikit Learn random forest, and the features of these Scikit Learn random forests. Another point from the article is how we can see the basic implementation of Scikit Learn random forest.

Recommended Articles

This is a guide to Scikit Learn Random Forest. Here we discuss the introduction, scikit learn random forest classifier and API, features, examples, and FAQ. You may also have a look at the following articles to learn more –