Updated April 4, 2023

Introduction to Scrapy log

Scrapy log and related routines have been deprecated in favor of python’s standard logging, Scrapy logs events with logging. The Scrapy settings given in the Logging settings can be tweaked to a degree. Scrapy calls scrapy.utils.log.configure logging when running commands to set some appropriate Logging settings; therefore, it’s best to call it manually if we are running Scrapy from scripts as indicated in Scrapy can be run from a script.

Overview of Scrapy log

- The scrapy log module provides a logging mechanism. Twisted logging is now used as the underlying implementation; however, this could change in the future. We are using scrapy.log.start function, we can launch the service of logging explicitly.

- Logging refers to recording events using a built-in logging system and defining functions and classes to implement applications and libraries.

- Logging is material that may be used with the Scrapy settings found in the Logging settings section.

- When running commands, Scrapy will use scrapy.utils.log.configure logging to specify some default parameters and manage those settings.

How to log scrapy Messages?

The below steps show how to log scrapy messages as follows.

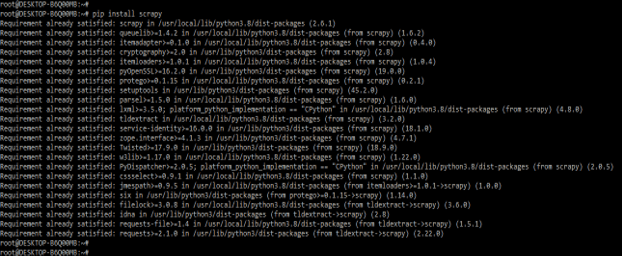

1) We install the scrapy using the pip command in this step. In the below example, we have already established a scrapy package in our system, so it will show that the requirement is already satisfied, so we do not need to do anything.

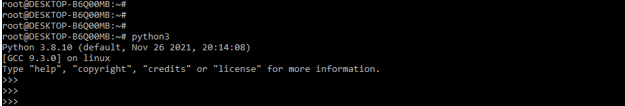

pip install scrapy2) After installing the scrapy in this step, we log into the python shell using the python3 command.

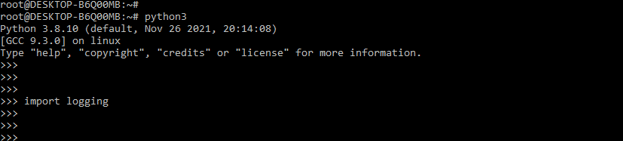

python33) After logging into the python shell, we import the logging module by using the import keyword in this step. The below example shows that importing the logging module in scrapy is as follows.

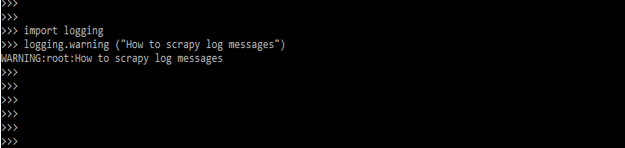

import logging4) After importing the logging level in this step, we are checking how to log a message using the logging level as follows.

Code:

import logging

logging.warning ("How to scrapy log messages")5) There are shortcuts for sending messages on the regular five levels and a general logging option. The level argument is passed to the log method. The below example shows shortcuts to send the messages on five normal levels.

Code:

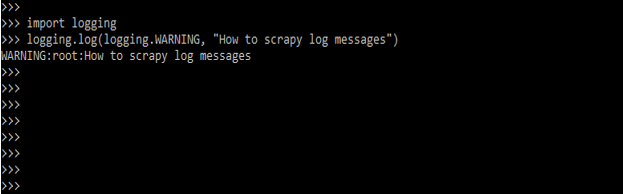

import logging

logging.log (logging.WARNING, "How to scrapy log messages")6) Different “loggers” can also be created to encapsulate messages. These loggers are self-configurable and support hierarchical structures. The root logger, a top-level logger to which all letters are sent, is used behind the scenes in the previous instances. Using logging helpers is only a shortcut for directly obtaining the root logger. The below example shows the use of a logger as follows.

Code:

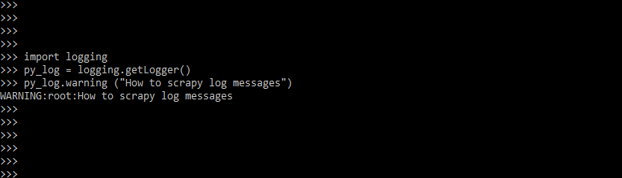

import logging

py_log = logging.getLogger()

py_log.warning ("How to scrapy log messages")Scrapy log levels

- A log message can be classified into five severity categories in python. The typical log messages are listed below in ascending order.

- Below are the types of log levels in scrapy as follows.

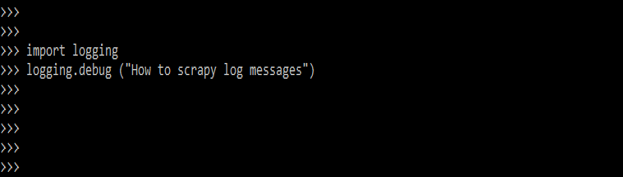

1) Logging.DEBUG – This logging level is used for message debugging. This logging level contains a lower severity. The below example shows debugging level logging as follows. In the below example, we can see that it will not be printing anything because the seriousness of this logging level is low.

Code:

import logging

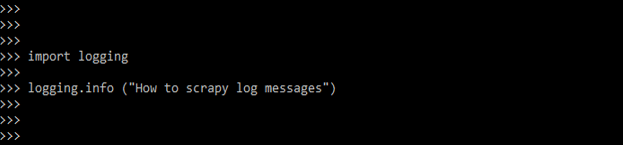

logging.debug("How to scrapy log messages")2) Logging.INFO – This logging level is used for message information. This logging level contains medium severity. The below example shows info level logging as follows. In the below example, we can see that it will not be printing any message.

Code:

import logging

logging.info ("How to scrapy log messages")3) Logging.WARNING – This logging level is used for message warnings. This logging level contains medium severity. The below example shows warning levels as follows. In the below example, we can see that it will be printing all the messages.

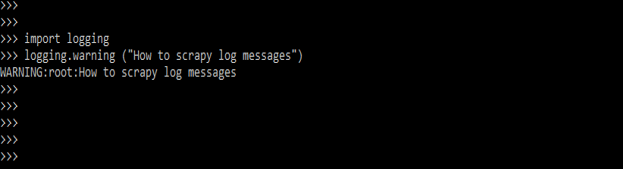

Code:

import logging

logging.warning ("How to scrapy log messages")4) Logging.ERROR – This logging level is used for common mistakes. This logging level contains medium severity. The below example shows error level logging as follows. In the below example, we can see that it will be printing all the messages.

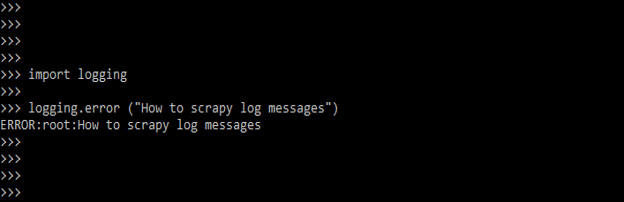

Code:

import logging

logging.error("How to scrapy log messages")5) Logging.CRITICAL – This logging level is used for critical errors. This logging level contains medium severity. The below example shows the necessary level of logging as follows. In the below example, we can see that it will be printing all the messages.

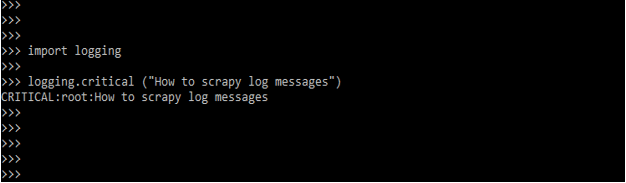

Code:

import logging

logging.critical ("How to scrapy log messages")Scrapy log spiders

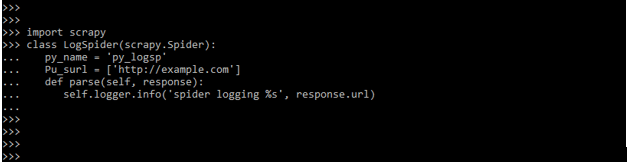

Every spider contains the logger, which can be used as follows. The below example shows scrapy log spiders as follows.

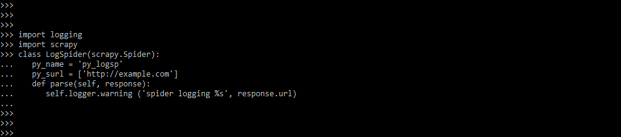

Code:

import scrapy

class LogSpider (scrapy.Spider):

py_name = 'py_logsp'

Pu_surl = ['http://example.com']

def parse(self, response):

self.logger.warning ('spider logging %s', response.url)The logger is generated using the spider’s name in the preceding code, but we can use python provided customized logger, as seen in the following code.

Code:

import logging

import scrapy

class LogSpider(scrapy.Spider):

py_name = 'py_logsp'

py_surl = ['http://example.com']

def parse(self, response):

self.logger.warning ('spider logging %s', response.url)Configuration

- Messages sent by loggers cannot be shown on their own. As a result, they will need handlers to deliver those messages, and handlers will redirect those messages to their appropriate destinations. Scrapy configures the logger handler based on the following options.

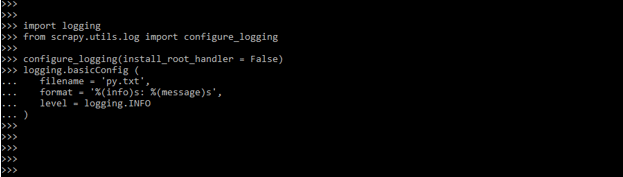

- The below example shows a configuration in the scrapy log as follows. In the example below, we first import the logging module and then import the configure_logging module using the scrapy.utils.log package.

Code:

import logging

from scrapy.utils.log import configure_logging

configure_logging (install_root_handler = False)

logging.basicConfig (

filename = 'py.txt',

format = '%(warning)s: %(message)s',

level = logging.INFO

)Conclusion

Logging refers to recording events using a built-in logging system and defining functions and classes to implement applications and libraries. Scrapy log and related routines have been deprecated in favor of python’s standard logging; Scrapy logging is significant in python.

Recommended Articles

We hope that this EDUCBA information on “Scrapy log” was beneficial to you. You can view EDUCBA’s recommended articles for more information.