Updated March 29, 2023

Definition of Spring Cloud Data Flow

Spring cloud data flow is providing a tool to create topologies for batch data and streaming pipelines. The spring cloud data flow pipeline is consisting a spring boot app that is built using tasks and streams. Spring cloud data flow is supporting the range of use cases for data processing. It will be supporting use cases from event streaming, import, export, and analytics of predictive.

What is spring cloud data flow?

- Using data flow, we can create data pipelines for the common use cases like real-time analytics, and data ingest.

The data flow has two types are as follows:

1) Batch data pipelines

2) Streaming

- In the streaming type of data flow, unbounded data is consumed by using messaging middleware.

- In batch data pipelines type of data flow, terminate the task processes after a set of finite data.

- The streaming type of data flow included the external system events, polyglot persistence, and data processing. Basically, those phases were commonly referred to as a processor, source, and sink.

- Basically, data flow is java based open-source toolkit which was developed by VMware for use of real-time streaming, and data integration.

- Using data flow data pipelines will be deployed in using data flow.

- Spring cloud data flow is developed for the replacement of data ingestion, ETL process, and real-time analysis.

Using Spring Cloud Data Flow

- Data flow is the toolkit that helps the developer to set up micro service-driven data pipelines for addressing the implementation challenges of the common pipelines.

- Data flow includes a variety of applications which include apache, YARN, cloud foundry, and Kubernetes.

- Data flow contains the broker binders of pluggable messaging code which was used to bind in any messaging service like apache, Kafka, amazon kinesis, and azure event hubs.

- Using data flow we can deploy design and manage data pipelines by using admin UI. data flow programming models will offer the message broker abstractions.

- It is easy to build batch and streaming applications by using the task project and spring cloud stream.

- Using data flow we can take advantage of health checks, metrics, and data microservices which were remotely managed.

- Data flow contains the semantics of standard security by using OpenID and Oauth2. We can scale the data pipeline and stream with zero downtime without interrupting any data flow.

- Data flow contains UI dashboards for designing, deploying, and managing the intensive and large-scale data pipeline.

Spring Cloud Data Flow Server running and up

- The main component of data flow is the data flow server, spring boot-based microservice is providing the main entry point which was used to define data pipelines in data flow through a web dashboard.

- The data flow server is responsible for the stream parsing based on the language of domain-specific.

- In data flow server requires the relational database for persisting metadata-related tasks, streams, and registered artifacts like library files and docker image which was used in the definition of the pipeline.

- A data flow server is used to deploy the batch from one or more runtime platforms.

Create a module using spring cloud data flow

The below example shows to create a module using data flow.

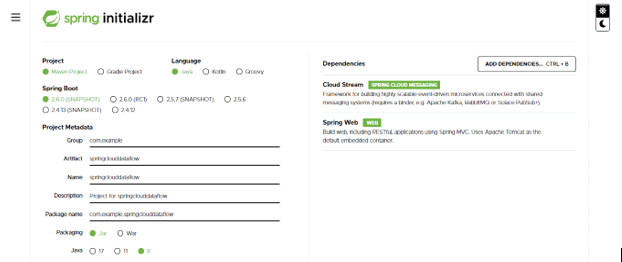

1) Create a project module template for data flow application by using the spring initializer –

In the below step, we have provided project group name as com. example, artifact name as springclouddataflow, project name as springclouddataflow, and selected java version as 8.

Group – com. example

Artifact name – springclouddataflow

Name – springclouddataflow

Spring boot – 2.6.0

Project – Maven

Project Description – Project for springclouddataflow

Java – 8

Dependencies – cloud stream, spring web

Package name – com. example.springclouddataflow

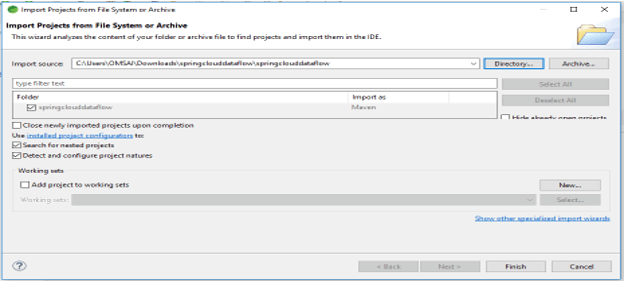

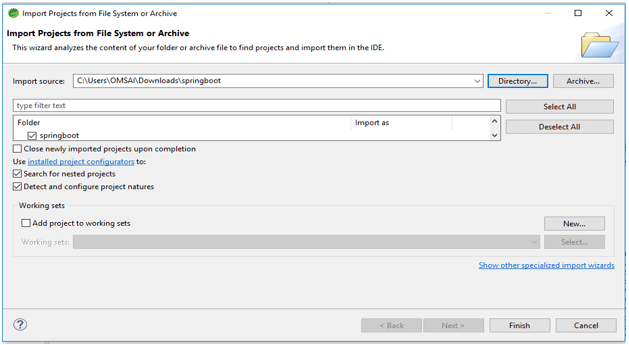

2) After generating the project extract files and open this project by using the spring tool suite –

After generating the project by using the spring initializer in this step we are extracting the jar file and opening the project by using the spring tool suite.

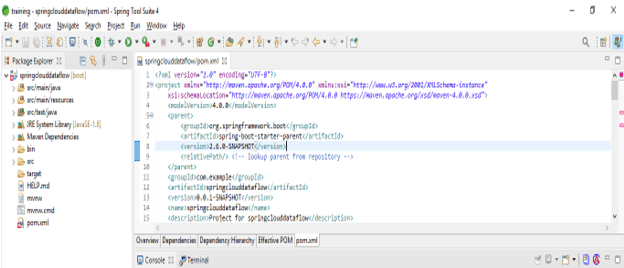

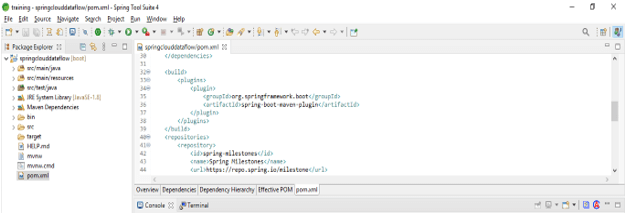

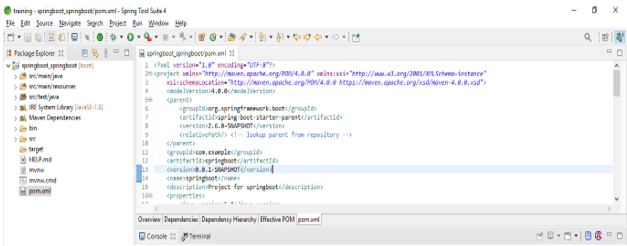

3) After opening the module using the spring tool suite check the project and its files –

In this step, we are checking all the project template files. We also need to check maven dependencies and system libraries.

4) Add dependency packages –

Code:

<dependency> -- Start of dependency tag.

<groupId>org.springframework.cloud</groupId> -- Start and end of groupId tag.

<artifactId>spring-cloud-starter-stream-rabbit</artifactId> -- Start and end of artifactId tag.

</dependency> -- End of dependency tag.5) Create a module of data flow –

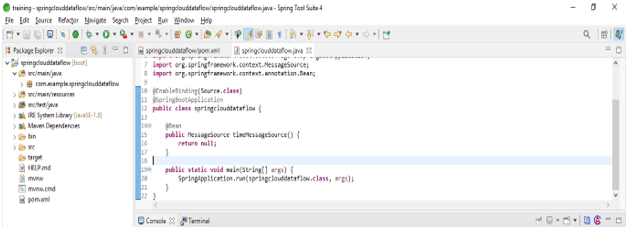

Code:

@EnableBinding (Source.class)

@SpringBootApplication

public class springclouddataflow {

@Bean

public MessageSource timeMessageSource() {

return null;

}

public static void main(String[] args) {

SpringApplication.run (springclouddataflow.class, args);

}

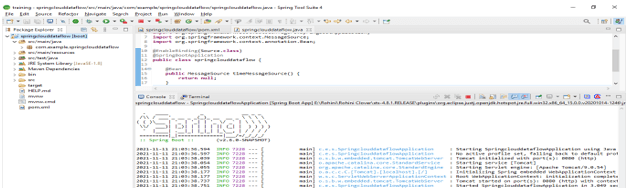

}6) Compile and run the module –

Create a new spring boot application

Below steps shows to create a new spring boot application are as follows.

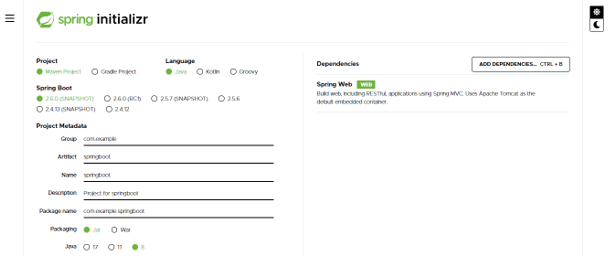

1) Create a project module template for the data flow application by using the spring initializer –

In the below step, we have provided project group name as com. example, artifact name as spring-boot, project name as springboot, and selected java version as 8.

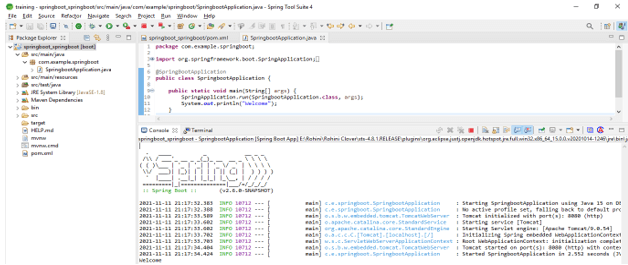

2) After generating the project extract files and open this project by using the spring tool suite –

3) After opening the application using the spring tool suite check the project and its files –

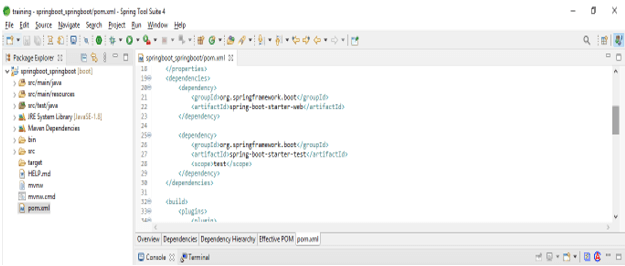

4) Add dependency packages –

In this step, we are adding the required dependency to our project.

Code:

<dependency> -- Start of dependency tag.

<groupId>org.springframework.boot</groupId> -- Start and end of groupId tag.

<artifactId>spring-boot-starter-web</artifactId> -- Start and end of artifactId tag.

</dependency> -- End of dependency tag.5) Create class file –

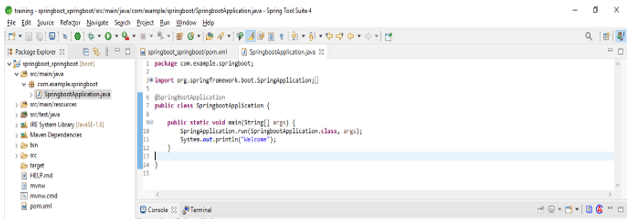

Code:

@SpringBootApplication

public class SpringbootApplication {

public static void main(String[] args) {

SpringApplication.run (SpringbootApplication.class, args);

System.out.println ("Welcome");

}

}6) Compile and run the application –

Conclusion

Spring cloud data flow is allowing developers to interact to deploy and define data pipelined by using different endpoints like restful API, stream java DSL, command-line shell, and dashboard GUI. Data flow is the operative and cloud-native programming model for microservices which was composable.

Recommended Articles

This is a guide to Spring Cloud Data Flow. Here we discuss the definition, What is spring cloud data flow? Example and applications. You may also have a look at the following articles to learn more –