Updated May 4, 2023

Difference Between Supervised Learning vs Deep Learning

In supervised learning, the training data you feed to the algorithm includes the desired solutions, called labels. A typical supervised learning task is classification. The spam filter is an excellent example of this: it is trained with many example emails and their class (spam or ham) and must learn how to classify new emails.

Deep learning is an attempt to mimic the activity in layers of neurons in the neocortex, which is about 80% of the brain where thinking occurs (In a human brain, there are around 100 billion neurons and 100 ~ 1000 trillion synapses). It is called deep because it has more than one hidden layer of neurons, which enables multiple states of nonlinear feature transformation.

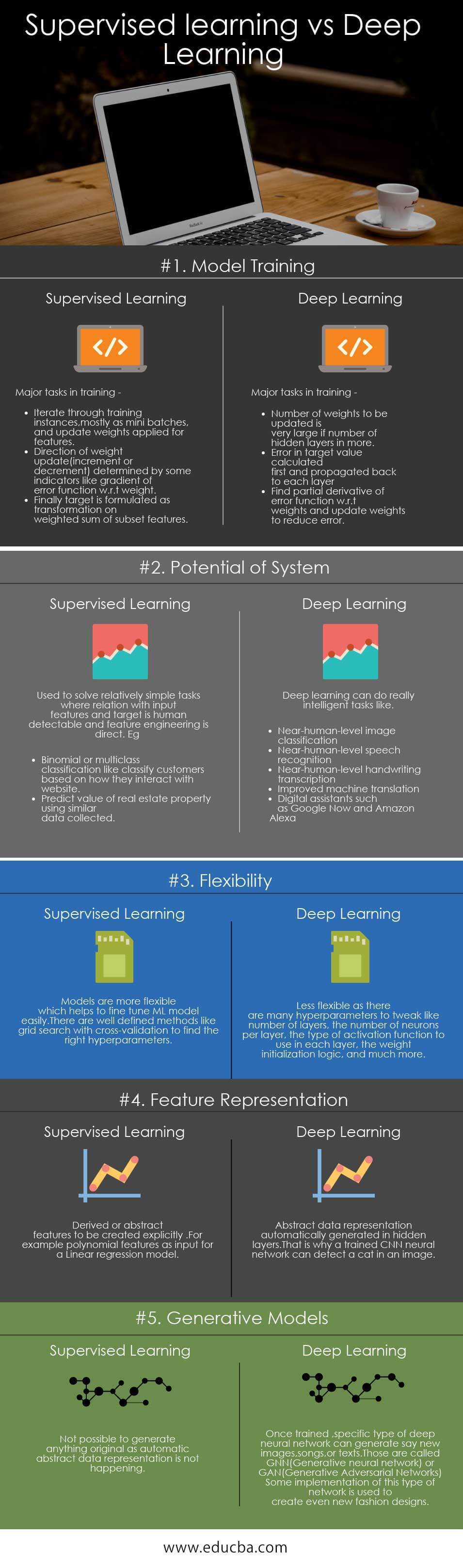

Head-to-Head Comparison Between Supervised Learning vs Deep Learning (Infographics)

Below are the top 5 Comparisons Between Supervised Learning vs Deep Learning:

Key Differences Between Supervised Learning vs Deep Learning

Supervised Learning vs Deep Learning are popular choices in the market; let us discuss some major differences between Supervised Learning and Deep Learning.

Major Models:

Important supervised models are:

- k-Nearest Neighbors: Used for classification and regression.

- Linear Regression: For Prediction/regression.

- Logistic Regression: For Classification.

- Support Vector Machines (SVMs): Used for classification and regression.

- Decision Trees and Random Forests: Both classification and regression tasks.

Most Popular Deep Neural Networks:

- Multilayer Perceptrons (MLP): Most basic type. This network is generally the starting phase of building other, more sophisticated deep networks, and it can be used for any supervised regression or classification problems.

- Autoencoders (AE): Network has unsupervised learning algorithms for feature learning, dimension reduction, and outlier detection.

- Convolution Neural Network (CNN): Particularly suitable for spatial data, object recognition, and image analysis using multidimensional neuron structures. One of the main reasons for the popularity of deep learning lately is due to CNN.

- Recurrent Neural Network (RNN): Sequenced data analysis, such as time series, sentiment analysis, NLP, language translation, speech recognition, and image captioning, uses RNNs. One of the most common types of RNN models is Long Short-Term Memory (LSTM) network.

- Training Data: As mentioned earlier, supervised models need training data with labels. But Deep learning can handle data with or without labels. Some neural network architectures, such as autoencoders and restricted Boltzmann machines, can be unsupervised.

- Feature Selection: Some Supervised models can analyze features and select a subset of characteristics to determine the target. But most of the time, this must be handled in the data preparation phase. As learning progresses in Deep Neural Networks, new features emerge, and unwanted parts are discarded.

- Data Representation: Classical supervised models do not create high-level abstractions of input features. The final model tries to predict output by applying mathematical transforms on a subset of input features. Deep neural networks form abstractions of input features internally. For example, the neural network converts input text to internal encoding while translating text, transforming that abstract representation into the target language.

- Framework: Many generic ML frameworks across different languages, such as Apache Mahout, Scikit Learn, and Spark ML, support supervised ML models. Deep learning frameworks provide a developer-friendly abstraction to create a network easily, take care of distributing computation, and have permission for GPUs. Caffe, Caffe2, Theano, Torch, Keras, CNTK, and TensorFlow are popular frameworks. Tensorflow from Google is widely used now with active community support.

Supervised Learning vs Deep Learning Comparison Table

Below is some key comparison between Supervised Learning vs Deep Learning:

| Basis of Comparison | Supervised Learning | Deep Learning |

| Model Training | Major tasks in training:

|

Major tasks in training:

|

| Potential of System | Used to solve relatively simple tasks where the relation with input features and the target is human detectable and feature engineering is direct. E.g.:

|

Deep learning can do brainy tasks like:

|

| Flexibility | Models are more flexible, which helps to fine-tune ML models easily. To find the correct hyperparameters, well-defined methods like grid search with cross-validation exist. | Less flexible as there are many hyperparameters to tweak, like the number of layers, the number of neurons per layer, the type of activation function to use in each layer, the weight initialization logic, and much more. |

| Feature Representation | You need to create derived or abstract features explicitly. For example, polynomial features as input for a Linear regression model. | Hidden layers automatically generate abstract data representation. That is why a trained CNN neural network can detect a cat in an image. |

| Generative Models | Not possible to generate anything original as automatic abstract data representation is not happening. | Once trained, a specific type of deep neural network can generate new images, songs, or texts. Those are called GNN(Generative neural network) or GAN(Generative Adversarial Networks). Creating new fashion designs is one of the applications of implementing this type of network. |

Conclusion

The accuracy and capability of DNN(Deep Neural Networks)s have significantly increased in the last few years. That is why DNNs are now an area of active research, and we believe it has the potential to develop a General Intelligent System. At the same time, it is difficult to reason why a DNN gives an individual output, making fine-tuning a network difficult. If a problem can be solved using simple ML models, it is strongly recommended to use them, even if a generally intelligent system is developed using DNNs. As a result, a simple linear regression will still be relevant.

Recommended Articles

This has been a guide to Supervised Learning vs Deep Learning. Here we have discussed Supervised Learning vs Deep Learning head-to-head comparison, key differences, infographics, and comparison table. You may also have a look at the following articles –