Updated April 20, 2023

What is Tensorflow LSTM?

TensorFlow is a technology which is used in machine learning and is the open-source platform available on GitHub provided by Google for end-to-end communication in that incredibly changes the way to build models of machine learning for experts as well as beginners. LSTM on the other end stands for Long short-term memory which is used in deep learning technology and acts as an artificial RNN that is Recurrent Neural Network. Jurgen schmidhuber and sepp hochreiter were the one who proposed LSTM in the year 1997. They are the feedback connections that can process the whole data sequence including video and speech data instead of just single data points.

Some of the scenarios where we can make use of LSTM are during the creation of an application that involves speech recognition or recognition of connected handwriting. The unit of LSTM called a cell mostly consists of a forgetting gate, output gate, and input gate. The unit cell has the capability to remember the values for a particular time period while the three gates mentioned above are responsible for handling the flow of information to and from the cell.

Why use TensorFlow LSTM?

LSTM is mostly used in scenarios where we have no idea of the time duration and we need to process, classify and predict the series of time it will take. The use of LSTM is mostly used for remembering the post data inside the system memory which is also considered as the updated or modified version of RNN which is a Recurrent neural network that makes the work of remembering easy.

Example code- Using LSTM with TensorFlow –

Let us consider one example to understand the working of LSTM with TensorFlow together to create a model that will be trained. machine learning and deep learning are used here –

import tensorflow as tf

from tensorflow.keras.datasets import imdb

from tensorflow.keras.layers import Embedding, Dense, LSTM

from tensorflow.keras.losses import BinaryCrossentropy

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.preprocessing.sequence import pad_sequences

# Configurations related to the model

extra_metric_param = ['accuracy']

size_of_single_batch = 128

output_of_dims = 15

function_used_for_Loss= BinaryCrossentropy()

length_of_maximum_sequence = 300

distinct_words_number = 5000

epochs_count = 5

optimizer = Adam()

split_for_validation = 0.20

Mode_Verbose = 1

# Execution should be in eager mode, so disable it

tf.compat.v1.disable_eager_execution()

# Data set should be loaded first

(x_train, y_train), (test_for_X_coordinate, test_for_Y_coordinate) = imdb.load_data(num_words=distinct_words_number)

print(x_train.shape)

print(test_for_X_coordinate.shape)

# Sequences should have proper padding between them

padded_inputs = pad_sequences(x_train, maxlen=length_of_maximum_sequence, value = 0.0) # 0.0 because it corresponds with <PAD>

padded_inputs_test = pad_sequences(test_for_X_coordinate, maxlen=length_of_maximum_sequence, value = 0.0) # 0.0 because it corresponds with <PAD>

# The keras model that will be used should be defined properly

model = Sequential()

model.add(Embedding(distinct_words_number, output_of_dims, input_length=length_of_maximum_sequence))

model.add(LSTM(10))

model.add(Dense(1, activation='sigmoid'))

# Model is compiled

model.compile(optimizer=optimizer, loss=loss_function, metrics=extra_metric_param)

# Providing brief summary about the model

model.summary()

# Model is being trained

history = model.fit(padded_inputs, y_train, batch=size_of_single_batch, epochs=epochs_count, verbose=Mode_Verbose, validation_split=split_for_validation)

# Model should be tested after the completion of the training

result_of_testing = model.evaluate(padded_inputs_test, test_for_Y_coordinate, verbose=False)

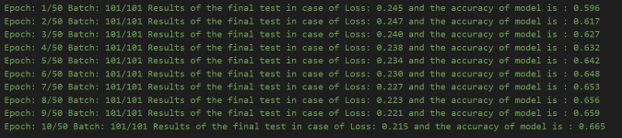

print(f'Results of the final test in case of Loss: {result_of_testing[0]} and the accuracy of model is : {result_of_testing[1]}')The output of above code is as shown below –

Steps followed for writing a program –

The steps followed while writing the above program and brief description about the same is as shown below –

- Import classes and functions – The first step involves importing all the required classes and functions from the available packages and the libraries available in machine learning right in the beginning of the program.

- Setting the configuration details – The next step is to mention the values of all the parameters or metrics that will be used further in the program.

- Disabling eager mode – The execution should be done after disabling the eager mode

- Loading dataset – The next step involved is to load the dataset that you will be using as an input to your program to study and analyze the same.

- Creating properly padded sequences – The padding should be done equally between multiple sequences that will be supplied.

- Defining listing model – In the above example, we made the use of keras model of TensorFlow though there are many other models available.

- Compilation of model – after defining all the details of the model, we need to compile it for the purpose of optimization and making it machine-readable.

- Training model – Training involves the learning phase of the model to teach in batches.

- Testing model – After compilation and training, we need to test the accuracy of the model and the loss that is taking place in the way of training of the model.

Brief recap on LSTMs

LSTM that is Long short-term memory which is used in deep learning technology and acts as an artificial RNN that is Recurrent Neural Network. The unit of LSTM called a cell mostly consists of forgetting gate, output gate, and input gate. The unit cell has the capability to remember the values for a particular time period while the three are responsible for handling the flow of information.

Listing model

There are three types of models used in machine learning which are as listed below –

- Regression Model – For finding out numeric values as the outcome. Examples involve the determination of humidity or temperature of the upcoming day, the number of units that could be sold, etc.

- Multiclass Classification model – It can involve more than one class and can give one or more than one outcome. For example, if the product is a camera, stand, or wardrobe, the genre of music, a category which is preferred by the customer.

- Binary Classification Model – The model has a binary outcome and is used in scenarios such as determining the spam email, whether the product will be bought or not, whether the object is living or non-living, etc.

Other than that, there are two approaches followed in machine learning that are supervised and unsupervised learning. In the first approach, the output of the model is produced by studying the historical examples of the same while non-supervised involves studying the patterns.

Conclusion

TensorFlow is the open-source platform provided by google available for end-to-end communication in machine learning and deep learning while LSTM is the recurrent neural network architecture that can be used along with TensorFlow for deep learning.

Recommended Articles

We hope that this EDUCBA information on “Tensorflow LSTM” was beneficial to you. You can view EDUCBA’s recommended articles for more information.