Updated March 23, 2023

Introduction to TensorFlow Models

TensorFlow Models is an open-source machine learning library from the year 2015. TensorFlow is especially used for deep learning. TensorFlow can be used in the Research and Production Environment. TensorFlow is open-source with an Apache 2.0 license. TensorFlow is a combination of two words Tensor – A multidimensional array and flow is a graph of operations. Every Mathematical Operations is expressed as a graph in the TensorFlow. Nodes in the graph as the operations and Edges are Tensors. TensorFlow runs on various platforms- CPU, TPU, GPU, Android, IOS, and Raspberry Pi. TensorFlow is developed by researchers and developers working on GoogleBrain Team. The Base language of TensorFlow is C++.

Various TensorFlow Models

The Heart of Everyday technology today is a neural network. These neural networks inspire deep Learning Models.

Neural Networks are like neurons in the human brain; these neurons have the capability to solve complex problems. These simple neurons are interconnected to each other to form a Layered Neural Network. This Layered neural network contains Input Layers, Output layers, Hidden Layers, Nodes, and Weights. The input layer is the first layer from where the input is given. The last layer is the output layer. The Hidden layers are the middle layers that carry out processing with the help of nodes (Neurons/operations) and weights (signal strength).

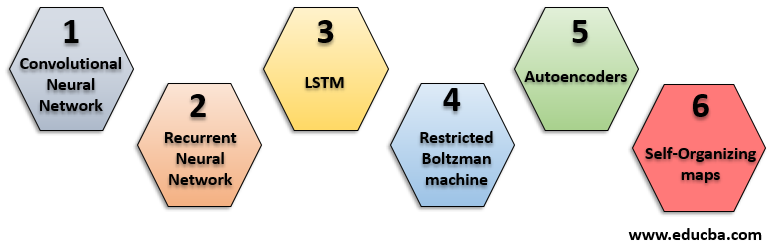

Various models of TensorFlow are:

1. Convolutional Neural Network

Yan LeCun and Joshua bengio in 1995 introduced the concept of a Convolutional neural network. It is used to analyze Images and recognize visual patterns directly from the pixel images. It is a class of Deep feed-forward artificial neural networks. CNN required minimal pre-processing compared to other classification algorithms.

Layers in CNN:

- Convolution layer: It is a Layer where we convolve the data or image using filters or kernels. These Filters we apply to the data through the sliding window. The depth of the filter is the same as the input. For a colour image, RGB values give the filter of depth 3. It involves taking the element-wise product of the filters’ image and then summing those values for every sliding action. The output of a convolution of a 3d filter with a colour image is a 2d matrix.

- Activation Layer: The activations functions are between convolutional layers that receive an input signal, perform non-linear transformations and send the transformed signal to the next input layer of neurons. Different activations functions are sigmoid, tanh, Relu, Maxout, Leaky ReLU, ELU. The most widely used activation function is Relu. Non-linear transformations are used to make a network capable of learning and performing complex tasks.

- Pooling Layer: This layer is responsible for reducing the number of parameters and complex computation in the network. At pooling, Average pooling and Max pooling are performed.

- Fully Connected Layer: It connects every neuron to every previous neuron. It is the output layer on CNN. This is the last phase of CNN. CNN should be used when the input data is the image, 2d data can be converted to 1d, and when the model requires a great computation amount.

2. Recurrent Neural Network

A recurrent Neural network (RNN) is a network of at least one feedback connection forming a loop. RNN is powerful as to retain information for some time, to do temporal processing and learning sequences. RNN retain information means it store information about the past, which helps learn the sequence. The RNN can be – Simple RNN with at least one feedback connection or a fully connected RNN. One example of RNN is Text generation. The model will be trained with lots of words or with some author’s book. The model will then predict the next character(o) of the word(format). The auto prediction, which is now available in emails or smartphones, is a good example of RNN. RNN is invented for predicting sequences. RNN is helpful for video classification, Sentiment analysis, character generation, Image captioning, etc.

3. LSTM

LSTM (Long short term memory) is one of the most efficient problems for sequence prediction. RNN is quite effective when dealing with short term dependencies. RNN failed to remember the context and something which is said long before. LSTM networks are very good at holding long term dependencies/memories. LSTM is useful for handwriting recognition, handwriting generation, Music generation, image captioning, language translation.

4. Restricted Boltzman Machine

It is an undirected graphical model and has a major role in deep learning frameworks like TensorFlow. It is an algorithm used for dimensionality reduction, classification, regression, collaborative filtering, feature learning, and topic modelling. In RBM, there are visible layers and Hidden layers. The first layer of RBM is the visible or input layer. The nodes perform calculations and are connected across layers, but no two nodes of the same layer are linked, so there is no internode communication restriction in RBM. Each node processes input and makes a stochastic decision on whether to transmit the input or not.

5. Autoencoders

Autoencoders are an unsupervised neural network that uses machine learning to do compression. Autoencoders are used to perform compression, converting multidimensional data to a low dimension. The original data is reconstructed when anyone needs it. Autoencoder aims to learn the compressed distributed representation of the given data type for dimensionality reduction. The components of Autoencoders are 1. Encoders 2. Code 3. Decoders.

- Encoder: Takes input image and compresses it, and produces code.

- Decoder: It reconstructs the original image from the code.

Autoencoders are Data specific; it means that they can compress images only on which it is trained. The Autoencoder, which is trained to compress images for cats, would not compress images of humans.

6. Self-Organizing Maps

Self-Organizing maps are helpful for feature reduction. They are used to map high dimensional data to lower dimensions which provide good visualization of the data. It consists of an input layer, weights, and Kohonen layers. Kohonen layer is also called a feature map or competitive layer. The self-organizing map is good for data visualization, dimensionality reduction, NLP, etc.

Conclusion

TensorFlow has huge capabilities to train different models with more great efficiency. In this article, we studied different deep learning models which can be trained on the TensorFlow framework. We hope that you have gained insight into some of the deep learning models.

Recommended Articles

This is a guide to TensorFlow Models. Here we discuss the introduction to TensorFlow model along with five different models explained in detail. You can also go through our other suggested articles to learn more–