Updated March 14, 2023

Introduction to TensorFlow normalize

Tensorflow normalize is the method available in the tensorflow library that helps to bring out the normalization process for tensors in neural networks. The main purpose of this process is to bring the transformation so that all the features work on the same or similar level of scale. Normalization plays a vital role in boosting the training stability as well as the performance of the model. The main techniques that are used internally for normalization include log scaling, scaling to a specified range, z score, and clipping.

In this article, we will have a discussion over the points tensorflow normalize overviews, how to use tensorflow normalize, tensorflow Normalize features, tensorflow normalize examples, and finally concluding our statement.

Overview of TensorFlow normalize

Normalization is the process where we try to align all the parameters and features of the model on a similar scale so as to increase the overall performance and training quality for the model. The syntax of the normalized method is as shown below. Note that the normalize function works only for the data in the format of a numpy array.

Tensorflow.keras.utils.normalize(sample array, axis = -1, order = 2)

The arguments used in the above syntax are described in detail one by one here –

- Sample array – It is the NumPy array data that is to be normalized.

- Axis – This parameter helps to specify the axis along which we want the numpy array to normalize.

- Order – This parameter specifies the order of normalization that we want to consider. The value can be a number such as here 2 stands for the L2 norm normalization.

- Output value – The returned value of this method is a copy of the original numpy array which is completely normalized.

How to use TensorFlow normalize?

The steps that we need to follow while making the use of the normalizing method in our python tensorflow model are as described below –

- Observe and try to explore the available data set which includes finding out the connection and correlation between the data.

- The training data of the model should be normalized by using normalize method following its syntax mentioned above.

- The steps for the linear model including building, compiling, and evaluation of the values should be carried out.

- The DNN neural network should be built, compiled, and trained properly giving out the evaluation of values.

TensorFlow Normalize features

Normalization can be carried out on various types of features. In general, normalization means that we want to get the average mid-value so that the data is distributed evenly when plotted on the histogram and we can understand the pattern of the data set that we have. For numeric features such as calculating the mean, mode, median or distribution of data set over range and many others, we will have to pass the features data to the normalize () function.

When you are trying to perform normalization, we will need to make the use of TensorFlow.estimator ad additionally we also need to mention the argument normalizer function inside the TensorFlow.feature_column in case if it’s a numeric feature then TensorFlow.feature_column.numeric_feature in case we want to supply the same parameters required while evaluating, training and serving.

The feature can be mentioned in below syntax –

Feature = tensorflow.feature_column.numeric_column (name of the feature, function of normalization = zscore)

The parameter that we pass as the function of normalizer here that is z score stands for the relation between the mean of the observations and the observations that are nothing but values.

The zscore function that we used is as shown below –

Def zscore (sample):

Mean = 3.04

Std = 1.2

Return (x-calculatedMean)/ calculatedStandardDeviation

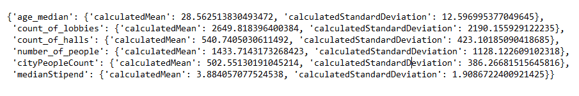

Let us consider one example where we will try to find the estimates by using normalization with numeric features. We will try to create standard deviation and mean values for which we have used above mentioned calculations. We will create the estimators by using the feature columns specified. Our code is as shown below –

def receiveParamsForNormalization(educbaSampleTrainedFnc, inputFeatures):

def zscoreArgument(column):

calculatedMean = educbaSampleTrainedFnc[column].mean()

calculatedStandardDeviation = educbaSampleTrainedFnc[column].std()

return {'calculatedMean': calculatedMean, 'calculatedStandardDeviation': calculatedStandardDeviation}

normalization_parameters = {}

for column in inputFeatures:

normalization_parameters[column] = zscoreArgument(column)

return normalization_parameters

NUMERIC_inputFeatures = ['age_median', 'count_of_lobbies', 'count_of_halls',

'number_of_people', 'cityPeopleCount', 'medianStipend']

normalization_parameters = receiveParamsForNormalization(educbaSampleTrainedFnc,

NUMERIC_inputFeatures)

normalization_parameters

The output of executing the above code gives the result as shown in the below image –

TensorFlow normalize examples

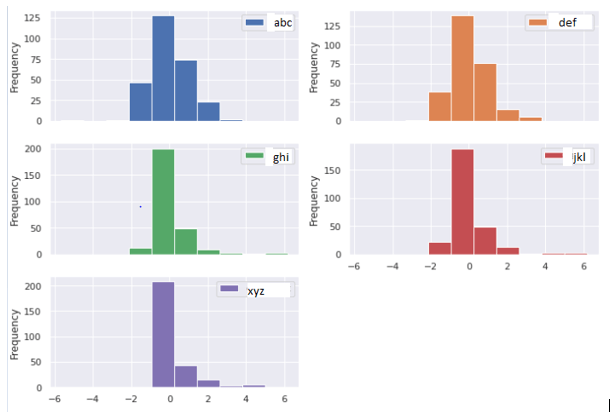

Let us consider one example. We will try to normalize all the data that is present in the range of -1 to 1. The TensorFlow 2.x library has come up with the experimental normalization engine which creates a way for the alternative of the manual normalization process.

We will firstly, import all the required and necessary packages and libraries. We will then proceed with creating an object for the normalization process. The next step would be to make the engine familiar with the statistics and range of the summary which can be done by feeding the normalizer engine without out data set.

The object of normalization helps us to provide the input of the set of data and also the method of NumPy which returns the matrix containing all the numbers that are normalized. This matrix can be used to prepare the pandas data frame by simply passing it which will help in plotting the histogram of the updated normalized data set. We can also observe that the data cluster around the zero value after normalization in the graph output.

import tensorflow as tensorObj

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.layers.experimental

import preprocessing

sampleEducbaNormalizingObj = preprocessing.Normalization()

sampleEducbaNormalizingObj.adapt( np.array( features_to_be_trained))

( pd

.DataFrame ( sampleEducbaNormalizingObj( features_to_be_trained)

.numpy(), columns = ['abc', 'def', 'ghi', 'jkl', 'xyz'], index = features_to_be_trained.index)

.plot

.hist ( subplots=True, layout = (3,2)))

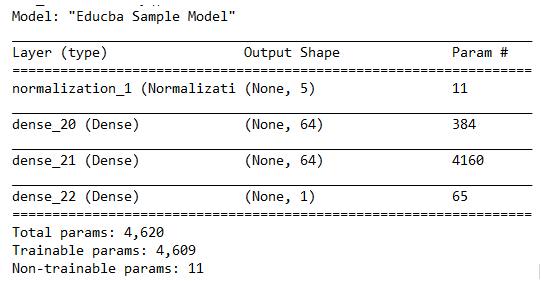

def build_and_compile_sampleEducbaModel(sampleEducbaNormalizingObj):

sampleEducbaModel = keras.Sequential([

sampleEducbaNormalizingObj,

layers.Dense (64, activation='relu'),

layers.Dense (64, activation='relu'),

layers.Dense (1)

])

sampleEducbaModel.compile(loss='mean_squared_error',

optimizer=tensorObj.keras.optimizers.Adam(0.001))

return sampleEducbaModel

dnnModel = build_and_compile_sampleEducbaModel ( sampleEducbaNormalizingObj)

dnnModel. summary()

Histogram output –

Conclusion

Tensorflow normalize method is used on NumPy array to make the available data set normalize. We will have to find out correlations and study the input data and then go for the manual normalization process or we can make use of the normalization technique of trial and error provided in version 2 of tensorflow.

Recommended Articles

This is a guide to TensorFlow normalize. Here we discuss the Introduction, overviews, How to use TensorFlow normalize? Examples with code implementation. You may also have a look at the following articles to learn more –