Updated March 13, 2023

Introduction to TensorFlow OpenCL

TensorFlow is a machine learning algorithm execution framework based on artificial intelligence concepts. We’re working on adding support for OpenCLTM devices to the TensorFlow framework using SYCLTM to give developers access to a wider range of processors. SYCL is an easy free, cross-platform C++ abstraction layer, while OpenCL(Open Computing Language) is a framework for building applications that execute across heterogeneous platforms. OpenCL is a standard parallel computing standard for event and data-based parallelism.

Overview of TensorFlow OpenCL

Over OpenGL ES acceleration, OpenCL provides a 2x inferencing speedup. TensorFlow Lite falls back to OpenGL ES if OpenCL isn’t available, although most mobile GPU vendors supply OpenCL drivers, even if they aren’t exposed to Android app development directly. Higher-level frameworks and compilers are increasingly using OpenCL as an acceleration target. Because OpenCL allows workloads to be shared by CPU and GPU while running the same programs, programmers can take advantage of both by dividing work across the devices. Because the relative rates of processes fluctuate among the devices, this creates a dilemma in selecting how to partition the work. Machine learning has been proposed as a solution to this issue.

CUDA vs OpenCL

| Comparison | CUDA | OpenCL |

| Developed | by NVIDIA Corporation. | developed by Khronos Group |

| Definition | Compute Unified Device Architecture (CUDA) is a parallel computing design that supports applications that demand a lot of parallel processing. | OpenCL is an open standard that may be used on a wide range of hardware, including desktop and laptop GPUs. |

| Multiple OS Support | CUDA can run on Windows, Linux, and macOS, but it requires NVIDIA hardware to do it.

e.g., Windows XP and later, macOS |

OpenCL, on the other hand, can run on practically any operating system and on a wide range of hardware.

e.g., Android, FreeBSD, Windows, Linux, macOS e |

| GPU Support | 2 GPUs | Utilize 1GPU |

| Language support | C, C++, fortran | C, C++ |

| Templates | CUDA is a C API and also constructs. | C++ bindings and has C99 |

| Function | Compiler- build kernels | Kernels at run time. |

| Libraries | Has a large number of high-performance libraries | Although it has a large number of libraries that may be used on any OpenCL-compliant hardware, it is not as comprehensive as CUDA. |

| Performance | There is no apparent advantage, as it is depending on code quality, hardware type, and other factors. | There is no apparent advantage, as it is depending on code quality, hardware type, and other factors. |

TensorFlow OpenCL examples

There are no known vulnerabilities in TensorFlow-OpenCL and no known vulnerabilities in its dependent libraries. The Apache-2.0 License applies to TensorFlow-OpenCL. This is a permissive license. Permissive licenses offer the fewest limitations and can be used in almost any project.

Blender’s most recent versions support OpenCL rendering. Using the container that has been provided to the Sylabs library, you can run Blender as a graphical programme that will use a local Radeon GPU for OpenCL compute:

$ singularity exec --rocm --bind /etc/OpenCL library://sylabs/demo/blend blender

Set-Up and Run the TensorFlow OpenCL

To add OpenCL support to TensorFlow, we need to use ComputeCpp to create an OpenCL version of TensorFlow. TensorFlow now includes OpenCL support, which can be implemented using SYCL, thanks to Codeplay. TensorFlow is based on the Eigen linear algebra C++ library.

OpenCL installation

sudo apt update

sudo apt install clinfo

clinfo

Install Packages

sudo apt update

sudo apt install git cmake gcc build-essential libpython3-all-dev ocl-icd-opencl-dev opencl-headers openjdk-8-jdk python3 python3-dev python3-pip zlib1g-dev

pip install -U --user numpy==1.14.5 wheel==0.31.1 six==1.11.0 mock==2.0.0 enum34==1.1.6

Configure Set-up

git clone http://github.com/codeplaysoftware/tensorflow

cd tensorflow

Environment variables Set-up

export CC_OPT_FLAGS="-march=native"

export PYTHON_BIN_PATH="/usr/bin/python"

export USE_DEFAULT_PYTHON_LIB_PATH=1

export TF_NEED_JEMALLOC=1

export TF_NEED_MKL=0

export TF_NEED_GCP=0

export TF_NEED_HDFS=0

export TF_ENABLE_XLA=0

export TF_NEED_CUDA=0

export TF_NEED_VERBS=0

export TF_NEED_MPI=0

export TF_NEED_GDR=0

export TF_NEED_AWS=0

export TF_NEED_S3=0

export TF_NEED_KAFKA=0

export TF_DOWNLOAD_CLANG=0

export TF_SET_ANDROID_WORKSPACE=0

export TF_NEED_OPENCL_SYCL=1

export TF_NEED_COMPUTECPP=1

It’s a good idea to run the tests to ensure TensorFlow was constructed successfully. With the following command, you may perform a big set of roughly 1500 tests:

bazel test --test_lang_filters=cc,py --test_timeout 1500 --verbose_failures --jobs=1 --config=sycl --config=opt -- //tensorflow/... -//tensorflow/compiler/... -//tensorflow/contrib/distributions/... -//tensorflow/contrib/lite/... -//tensorflow/contrib/session_bundle/... -//tensorflow/contrib/slim/... -//tensorflow/contrib/verbs/... -//tensorflow/core/distributed_runtime/... -//tensorflow/core/kernels/hexagon/... -//tensorflow/go/... -//tensorflow/java/... -//tensorflow/python/debug/... -//tensorflow/stream_executor/...

Build Tensor Flow

git clone http://github.com/codeplaysoftware/tensorflow

cd tensorflow

Set-Up operations

with tf. Session() as se1:

with tf.device("/gpu:0"):

To execute a code

with tf.Session() as se1:

This line-up will build a new context manager, instructing TensorFlow to use the GPU to accomplish those tasks.

TensorFlow program

Program #1

import tensorflow as tf

>>> he1 = tf.constant('Hi, TensorFlow world!')

>>> se1 = tf.Session()

>>> se1.run(hello)

'Hi, TensorFlow world!'

>>> x = tf.constant(12)

>>> y = tf.constant(22)

>>> se1.run(x + y)

34

>>> se1.close()

Program #2

import sys

import numpy as np

import tensorflow as tf

from datetime import datetime

d_name = sys.argv[1]

shape = (int(sys.argv[2]), int(sys.argv[2]))

if d_name == "gpu":

d_name = "/gpu:0"

else:

d_name = "/cpu:0"

with tf.device(d_name):

ran_matrix = tf.random_uniform(shape=shape, minval=0, maxval=1)

d_operation = tf.matmul(ran_matrix, tf.transpose(ran_matrix))

sum_op = tf.reduce_sum(d_operation)

start = datetime.now()

with tf.Session(config=tf.ConfigProto(log_device_placement=True)) as session:

res = session.run(sum_op)

print(res)

print("\n" * 6)

print("Shape:", shape, "Device:", d_name)

print("Time done:", datetime.now() - startTime)

print("\n" * 6)

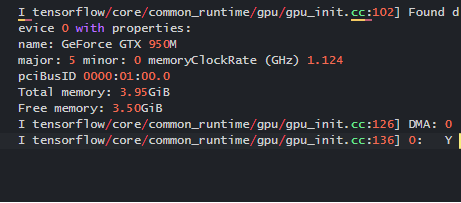

Explanation

To execute

Python name1.py gpu 1500

Output:

OpenCL Acceleration for TensorFlow

OpenCL allows a wide range of accelerators to be used, involving multi-core CPUs, GPUs, DSPs, FPGAs, and specialized hardware like inferencing engines. An OpenCL system is divided into host and device components, with host software developed in a general programming language like C or C++ and generated for running on a host CPU using a normal compiler. TensorFlow to OpenCL translation would necessitate scribbling the kernels in OpenCL C and distinct codebases, both of which would be difficult to maintain. All of it is single-source C++ when using SYCL, therefore it’s possible to integrate the SYCL back-end to TensorFlow in a non-intrusive way.

Let’s see the sample code for registration

namespace tensorflow {

REG5(UnaryOp, CPU, "Sqrt", functor::sqrt, float, Eigen::half, double,

complex64, complex128);

#if GOOGLE_CUDA

REG3(UnaryOp, GPU, "Sqrt", functor::sqrt, float, Eigen::half, double);

#endif

#ifdef TENSORFLOW_USE_SYCL

REG2(UnaryOp, SYCL, "Sqrt", functor::sqrt, float, double);

#endif

}

Conclusion

In general, OpenCL is successful. As a standard, it contains all of the necessary parts, namely run-time code creation and sufficient support for heterogeneous computing. Therefore, in this article, we have seen how tensor flow is acted on OpenCL.

Recommended Articles

This is a guide to TensorFlow OpenCL. Here we discuss the Introduction, overviews, examples with code implementation. You may also have a look at the following articles to learn more –