Updated April 20, 2023

Definition of TensorFlow Probability

Basically, TensorFlow provides different kinds of functionality to the user, in which TensorFlow is one of the functionalities provided by TensorFlow. Normally TensorFlow is a Python library that is built for TensorFlow, by using this Python library we can easily combine the probabilistic models as well as we can also be capable to combine the deep learning model with today’s modern hardware that is TPU and GPU. This library is basically useful for those who want to make predictions on data such as data scientists, statisticians, or ML researchers and students. TensorFlow uses a single language to implement.

What is TensorFlow Probability?

Basically, TensorFlow is a library to implement probabilistic calculations. As a component of the TensorFlow biological system, it furnishes a mix of probabilistic strategies with profound organizations; angle-based induction by means of programmed separation, and versatility to huge datasets and models through equipment speed increase (for instance, GPUs) and appropriated calculation.

It is a library for authentic estimation and probabilistic showing dependent on top of TensorFlow. Its structure blocks incorporate an immense scope of dissemination and invertible changes (bijectors), probabilistic layers that might be utilized in Keras models, and apparatuses for probabilistic thinking including variational induction and Markov Chain Monte Carlo.

Tensorflow has diverse advantages; some are recorded beneath as follows.

1. Tensorflow is utilized for a wide choice of likelihood circulations and bijectors.

2. It is a tool to fabricate profound probabilistic models, including probabilistic layers and a ‘JointDistribution’ deliberation.

3. The TensorFlow is a Variational induction and Markov chain, Monte Carlo.

4. It is utilized for Optimizers like Nelder-Mead, BFGS, and SGLD.

How does TensorFlow probability works?

Basically, there are three different components present in TensoFolw as follows.

Layer 0: TensorFlow

Mathematical tasks—specifically, the LinearOperator class—empowers grid-free executions that can take advantage of a specific construction (slanting, low-rank, and so on) for effective calculation. It is assembled and kept up with the group and is essential for tf.linalg in centring TensorFlow.

Layer 1: Statistical Building Blocks

Appropriations (tfp.distributions): A huge assortment of likelihood circulations and related insights with cluster and broadcasting semantics.

Bijectors (tfp.bijectors): Reversible and compostable changes of arbitrary factors. Bijectors give a rich class of changed appropriations, from traditional models like the log-typical dispersion to refined profound learning models like concealed autoregressive streams.

Layer 2: Model Building

Joint Distributions (e.g., tfp.distributions.JointDistributionSequential): Joint appropriations more than at least one potentially associated circulation. For a prologue to demonstrating with TFP’s JointDistributions, look at this collab

TensorFlow uses the probabilistic layer with vulnerability to address the different capabilities of TensorFlow.

Layer 3: Probabilistic Inference

Markov chain Monte Carlo (tfp.mcmc): Algorithms for approximating integrals by means of inspecting. Incorporates Hamiltonian Monte Carlo, irregular walk Metropolis-Hastings, and the capacity to assemble custom progress parts.

Variational Inference (tfp.vi): Algorithms for approximating integrals through streamlining.

Analyzers (tfp. optimizer): Stochastic streamlining techniques, broadening TensorFlow Optimizers. Incorporates Stochastic Gradient Langevin Dynamics.

Monte Carlo (tfp.monte_carlo): Tools for figuring Monte Carlo assumptions.

It is under the dynamic turn of events and interfaces might change.

It also works for the JAX: TensorFlow Probability (TFP) is a library for probabilistic thinking and factual investigation that presently additionally deals with JAX! For those not comfortable, JAX is a library for sped-up mathematical figuring dependent on compostable capacity changes. TFP on JAX upholds a great deal of the most valuable use of customary TFP while safeguarding the reflections and APIs that numerous TFP clients are currently alright with.

Now let’s see the Tensorflow probability to detect the Anomaly API as follows.

It has a library of APIs for Structural Time Series (STS), a class of Bayesian measurable models that disintegrate a period series into interpretable occasional and pattern parts. Already, clients needed to hand characterize the pattern and occasional parts for their models, for instance, the demo utilizes a model with a neighborhood direct pattern and month-of-year occasional impact for CO2 fixation estimating. Related to this understudy project, the group fabricated another abnormality location API where these parts are induced dependent on the information time series.

Installing TensorFlow Probability

There are three different ways to install which are as follows.

The first way is by using Stable builds: In this way, it depends on the current stable release of Tensorflow and we can use the pip command to install the TensorFlow package.

pip install –upgrade tensorflow-probabilityIf we require some additional package, we just need to replace pip3 instead of pip.

The second way is we can use nightly builds for TensorFlow under the pip command tfp-daily, which relies upon one of tf-daily and tf-daily gpu. Daily forms incorporate more current elements, however might be less steady than the formed deliveries.

The third way, we can install it from the source. Here we can use the Bazel build system.

sudo apt-get install bazel git python-pip

python -m pip install --upgrade --user tf-nightlyExamples of TensorFlow Probability

Now let’s see the example for a better understanding as follows.

Code:

import tensorflow as tf_obj

import tensorflow_probability as tfp_obj

tf_obj.enable_eager_execution()

print(tf_obj.__version__)

feat = tfp_obj.distributions.Normal(loc=0., scale=1.).sample(int(100e3))

lab = tfp_obj.distributions.Bernoulli(logits=1.618 * feat).sample()

model_demo = tfp_obj.glm.Bernoulli()

coef, linear_response, is_converged, num_iter = tfp_obj.glm.fit(

model_demo_matrix=feat[:, tf_obj.newaxis],

res=tf_obj.cast(lab, dtype=tf_obj.float32),

model_demo=model_demo)

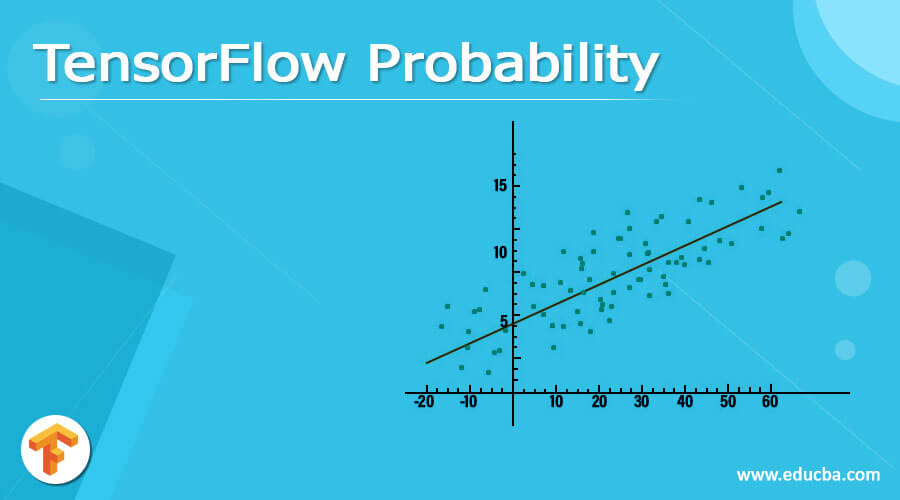

print(coef)Explanation

In the above example, we try to implement the TensorFlow probability, in this example first, we import the all required standard libraries as shown, after that, we load the synthetic data set and also we define the model. The final output of the above program we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about TensorFlow probability. From the above article, we have taken in the essential idea of the TensorFlow probability and we also see the representation of the TensorFlow probability with example. From this article, we learned how and when we use the TensorFlow probability.

Recommended Articles

We hope that this EDUCBA information on “TensorFlow Probability” was beneficial to you. You can view EDUCBA’s recommended articles for more information.