Updated March 23, 2023

Introduction to TensorFlow RNN

TensorFlow RNN or rather RNN stands for Recurrent Neural network these kinds of the neural network are known for remembering the output of the previous step and use it as an input into the next step. In other neural networks, the input and output of the hidden layers are independent of each other. Still, in case of RNN it contains a memory which helps saves the output and process it as an input to next step, this phenomenon is achieved using the single hidden layer of the RNN neural network.

How RNN Works in TensorFlow?

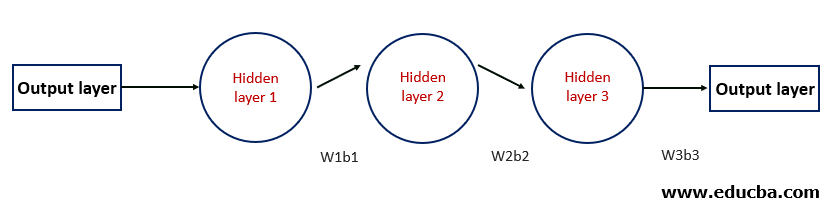

The above figure is a representation of a neural network with 3 different hidden layers, and each layer has its individual weights(w) and biases(b) such as we can see w1,w2,w3 are weights and b1,b2, and b3 are biases. All the weights and biases are independent of each other for each hidden layer, so for this network to remember a particular state is difficult to solve this distributed weights and biases problem and remember the state, RNN is used.

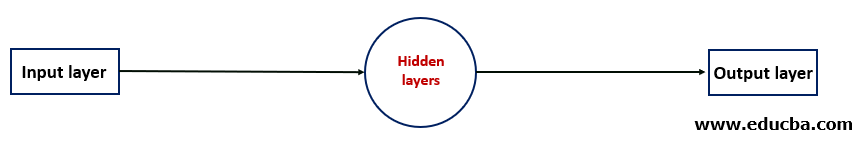

In the above figure, there is an RNN neural network with an input layer and output layer, and between them, there are multiple hidden layers. So, what exactly the RNN does is compile all the individual activation functions of all the hidden layers by calculating the mean weights and averages for all of them and creates this single layer that helps to memorize the previous state and reduce the complexity of the network.

RNN in TensorFlow

RNN is generally used in two cases that is to solve time series problems or to solve natural language processing problems. So in the below implementation of the RNN in TensorFlow, we will learn about the LSTM(Long short term memory)

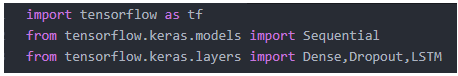

Let’s start with the imports.

We have imported TensorFlow, and Sequential model from TensorFlow .keras.models, and fromtensorflow.Keras.layers we have imported Dense, Dropout, and LSTM layers to build our model.

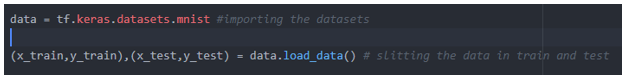

So we will be using a sequential model and build the model using Dense, Dropout and LSTM layers. In this, we are using the inbuild dataset which is provided by the TensorFlow library.

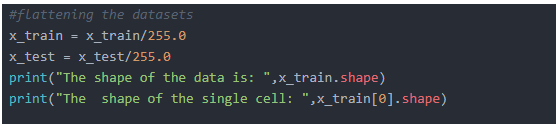

Since the data is a collection of images, so we need to flatten the data to get the dimensions and co-ordinates (this is data pre-processing)

The shape of the data is below here we can see it consist of 60000 images of 28 by 28 size.

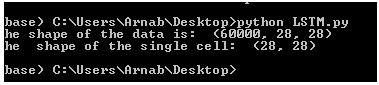

Defining the Structure of the model

Structure for the model consists of two LSTM layer and 2 Dense layers; we use the relu(rectified linear unit) as the activation function for two LSTM and one Dense layer. We will use SoftMax as the activation function for the last layer that is Dense layer.

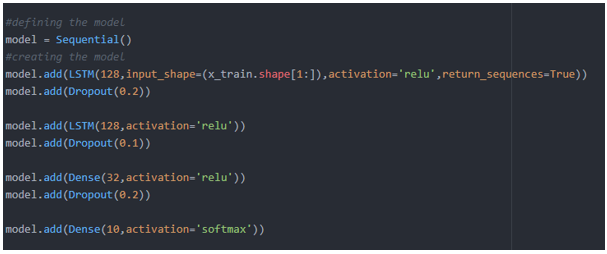

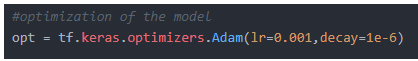

Optimization

We will use Adam optimizer to optimize the model.

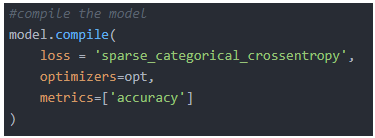

Compilation

While compilation, we used sparse_categorical crossentropy matrix as the loss function to calculate the loss.

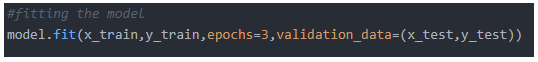

Once the model is built, we will fit the model using 3 epochs for now.

Final trained model

In the final trained model, we can see below.

Accuracy score: 97 %

Loss: 0.08%

Advantages and disadvantages of RNN in TensorFlow

Below given are advantages and disadvantages of RNN:

Advantages

- Since it remembers information for a particular time period, these kinds of neural networks are very useful in designing chatbots and working with time series analysis.

- These neural networks can be used in combination with other neural networks such as CNN (convolution neural network) to extend performance and effectiveness.

- RNN in TensorFlow is a very powerful tool to design or prototype new kinds of neural networks such as (LSTM) since Keras (which is a wrapper around TensorFlow library) has a package(tf.Keras.layers.RNN) which does all the work and only the mathematical logic for each step needs to be defined by the user.

- Also, RNN in TensorFlow is easy to use due to built-in functionalities such as Keras.layers.RNN, tf.keras.layers.LSTM, tf.keras.layers.GRU

- RNN in TensorFlow is highly customizable, and we can use RNN to create and implement our own neural networks.

Disadvantages

- Since RNN is a useful neural network for remembering sequences, it cannot process very long sequences if we use relu or tanh as an activation function.

- To train an RNN it takes lots of resources and its difficult to train an RNN. So its always advisable to train an RNN over a GPU instead of a CPU depending on the size of the data.

Conclusion

In this article, we covered the basics of RNN and how RNN is implemented in TensorFlow. RNN can be used in combination with other neural networks to design many more different kinds of neural networks. It acts as a basic stepping stone to develop chatbots due to its memorizing factor.

Recommended Articles

This has been a guide to TensorFlow RNN. Here we discuss how RNN works in TensorFlow? Advantages and disadvantages. You may also have a look at the following articles to learn more –