Updated March 15, 2023

Introduction to TensorFlow shape

TensorFlow provides the different types of functionality to the user, in which that shape is one of the functionalities that Tensorflow provides. Basically, Tensorflow shape means how many elements are present in each dimension. The TensorFlow automatically included all dimensions and points of shape during the construction of the graph. There is a known or unknown rank for the dimension and points of the shape. If we know the rank of shape, then dimension size and point may be known or unknown. There are two methods to draw the shape in TensorFlow that are static and dynamic. With the help of these two, we can connect the different nodes.

What is TensorFlow shape?

The challenges emerge when we plunge profound into the Tensorflow eccentricities, and we discover that there’s no limitation about the meaning of the state of a tensor. Tensorflow, indeed, permits us to address the state of a Tensor in 3 distinct ways:

- Completely known shapes are actually the models portrayed above, in which we know the position and the size for each measurement.

- To some extent, known shape: for this situation, we know the position, yet we have an obscure size for at least one measurement (everybody that has prepared a model in bunch knows about this when we characterize the information, we simply indicate the element vector shape, allowing the group to measurement set to None, e.g.: (None, 27, 29, 4).

- Obscure shape and known position: for this situation, we know the position of the tensor, yet we don’t have a clue about any of the measurement esteem, e.g.: (Null).

Tensorflow, when utilized in its non-energetic mode, isolates the diagram definition from the chart execution. This permits us to initially characterize the connections among hubs and solely after executing the chart.

At the point when we characterize an ML model (yet the thinking holds for a nonexclusive computational graph), we characterize the organization boundaries totally (for example, the inclination vector shape is completely characterized, just like the number of convolution channels and their shape); subsequently, we are on account of a completely known shape definition. However, a diagram execution time, all things considered, the connections among tensors (not among the organization boundaries, which remain constants) can be amazingly powerful.

TensorFlow shape function

Now let’s see the shape function in TensorFlow as follows.

The shape is the number of components in each measurement, e.g., a scalar has a position 0 and an unfilled shape(), a vector has rank 1 and a state of (A0), a lattice has rank 2, and a state of (A0, D1), etc.

Syntax

tf.shape( i/p, o/type=tf.specifeid_dataypes, name)

Explanation

In the above example, we use a shape function with different parameters as shown; here, the first parameter is the input means what is the input we require to produce the shape, and the next parameter is the output type of input which means here we need to specify the data type whatever we want. Name is an optional part of this syntax.

Example

Now let’s see an example of a shape function for better understanding as follows.

import tensorflow as tflow

from tensorflow.python.framework import ops

ops.reset_default_graph()

ses = tflow.Session()

t = tflow.zeros([1,20])

ses.run(t)

r = tflow.Variable(tflow.zeros([1,20]))

ses.run(r.initializer)

ses.run(r)

Explanation

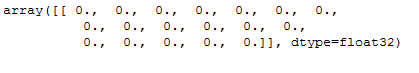

In the above example, we first import the TensorFlow libraries as shown. After that, we start the graph and use the zero function of shape; this is the default function of the TensorFlow shape. The final output of the above program we illustrated by using the following screenshot as follows.

tensorflow shape method

Tensor.get_shape strategy: this shape is derived from the tasks that were utilized to make the tensor and might be to some extent complete. In the event that the static shape isn’t completely characterized, the powerful state of a Tensor ten can be controlled by assessing tf.shape (ten)

Example

Now let’s see how we can generate a specified shape by using the shape method as follows.

import tensorflow as tflow

from tensorflow.python.framework import ops

ops.reset_default_graph()

ses = tflow.Session()

r_d = 3

c_d = 3

z_v = tflow.Variable(tflow.zeros([r_d, c_d]))

o_v = tflow.Variable(tflow.ones([r_d, c_d]))

ses.run(z_v.initializer)

ses.run(o_v.initializer)

print(ses.run(z_v))

print(ses.run(o_v))

Example

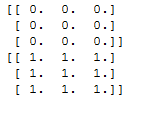

In the above example, we try to implement the specific shape we want by using the TensorFlow shape method; here, we generate 3 by 3 matrices. The final output of the above program we illustrated by using the following screenshot as follows.

Elements tensorflow shape

Now let’s see what the element we require for shape is as follows.

First, we need to import all the library files of TensorFlow.

After that, we need to start the session to draw the graph or say a computational graph.

Now provides the values to draw the shape, or Tensorflow uses the by default shape function that is tf.zeros() function. TensorFlow calculations need to realize which articles are factors and which are constants. So we make a variable utilizing the TensorFlow capacity tf.Variable(). Note that you cannot run session.run(variable name); this would bring about a mistake. Since TensorFlow works with computational diagrams, we need to make a variable introduction activity to assess factors. For this content, we can instate each factor in turn by calling the variable strategy variable. initializer.

- Network architecture

This organization acknowledges a picture of any profundity and with any spatial degree (tallness, width) in input. I will utilize this organizational design to show you the ideas of static and dynamic shapes, the number of data about the states of the tensors, and the organization boundaries we can get and use in both diagram definition time and execution time.

- Dynamic input

When we included value into the placeholder at the time of graph execution, at the same time, shape are introduced in TensorFlow, and it checks all possible shapes and values with the foreordained position and leaves us the task to intensely check if the passed regard is something we’re prepared to use. So, this suggests that we have 2 unmistakable shapes for the information placeholder: a static shape that is known at outline definition time and an interesting shape that will be known particularly at graph execution time.tf.shape(inputs_) returns a 1-D number tensor addressing the powerful state of inputs_.

Conclusion

We hope from this article you learn more about TensorFlow shape. From the above article, we have taken in the essential idea of the TensorFlow shape, and we also see the representation of the TensorFlow shape with examples. From this article, we learned how and when we use the TensorFlow shape.

Recommended Articles

This is a guide to tensorflow shape. Here we discuss the essential idea of the TensorFlow shape, and we also see the representation of the TensorFlow shape with examples. You may also have a look at the following articles to learn more –