Updated April 13, 2023

Introduction to torch.nn Module

The basic idea behind developing the PyTorch framework is to develop a neural network, train, and build the model. PyTorch has two main features as a computational graph and the tensors which is a multi-dimensional array that can be run on GPU. PyTorch nn module has high-level APIs to build a neural network. Torch.nn module uses Tensors and Automatic differentiation modules for training and building layers such as input, hidden, and output layers.

Modules and Classes in torch.nn Module

Pytorch uses a torch.nn base class which can be used to wrap parameters, functions, and layers in the torch.nn modules. Any deep learning model is developed using the subclass of the torch.nn module it uses method like forward(input) which returns the output. A simple neural network takes input to add weights and bias to it feed the input through multiple hidden layers and finally returns the output. Here we will learn in brief the classes and modules provided by torch.nn.

1. Parameters

torch.nn.Parameter(data,requires_grad)

torch.nn module provides a class torch.nn.Parameter() as subclass of Tensors. If tensor are used with Module as a model attribute then it will be added to the list of parameters. This parameter class can be used to store a hidden state or learnable initial state of the RNN model.

2. Containers

Container classes are used to create a complex neural network. Containers uses nn.Container() class to develop models.It is a base class to create all neural network modules. Modules are serializable and may have other modules added to the model which forms a tree like structure.

| Class_Name | Description |

| torch.nn.Module | It is a base class used to develop all neural network models. |

| torch.nn.Sequential() | It is a sequential Container used to combine different layers to create a feed-forward network. |

| torch.nn.ModuleList() | This is similar to the indexed Python list which holds submodules in a list. |

| torch.nn.ModuleDict() | This class creates a python dictionary that holds submodules. |

| torch.nn.ParameterList() | This is similar to the indexed python list which holds parameters in a list. |

| torch.nn.ParameterDict() | This class creates a python dictionary that holds parameters. |

3. Layers

Pytorch provides different modules in torch.nn to develop neural network layers. We can configure different trainable layers using a respective class from torch.nn Module.

Convolution Layer

It is a class in torch.nn which helps to apply learned filter on input images to create an intermediate layer.

| Class_Name | Description |

| torch.nn.Conv1d() | 1-D Convolution applied over an input signal composed of input planes. |

| torch.nn.Conv2d() | 2-D Convolution applied over an input signal composed of input planes. |

| torch.nn.Conv3d() | 3-D Convolution applied over an input signal composed of input planes. |

| torch.nn.ConvTranspose1d() | 1-D Transposed convolution applied over an input image signal composed of input planes. |

| torch.nn.ConvTranspose2d() | 2-D Transposed convolution applied over an input image signal composed of input planes. |

| torch.nn.ConvTranspose3d() | 3-D Transposed convolution applied over an input image signal composed of input planes. |

| torch.nn.Unfold() | Used to extract sliding local block from a batched input tensor. |

| torch.nn.Fold() | Create local block tensors by grouping an array of the sliding block. |

Pooling Layer

To down sample a feature maps pooling layer is used. it pools the important features from the input plane.

| Class_Name | Description |

| torch.nn.MaxPool1d() | Apply 1-D max pooling filter over an input signal composed of different input planes. |

| torch.nn.MaxPool2d() | Apply 2-D max pooling filter over an input signal composed of different input planes. |

| torch.nn.MaxPool3d() | Apply 3-D max pooling filter over an input signal composed of different input planes. |

| torch.nn.MaxUnpool1d() | It is used to compute the partial inverse of MaxPool1d. |

| torch.nn.MaxUnpool2d() | It is used to compute the partial inverse of MaxPool2d. |

| torch.nn.MaxUnpool3d() | It is used to compute the partial inverse of MaxPool3d. |

| torch.nn.AvgPool1d() | Apply 1-D average pooling filter over an input signal composed of different input planes. |

| torch.nn.AvgPool2d() | Apply 2-D average pooling filter over an input signal composed of different input planes. |

| torch.nn.AvgPool3d() | Apply 3-D average pooling filter over an input signal composed of different input planes. |

| torch.nn.FractionalMaxPool2d() | 2-D fractional max pooling applied over an input signal composed of several input planes. |

| torch.nn.LPPool1d() | 1-D power-average pooling applied over an input signal composed of several input planes. |

| torch.nn.LPPool2d() | 2-D power-average pooling applied over an input signal composed of several input planes. |

| torch.nn.AdaptiveMaxPool1d() | Apply 1-D adaptive max pooling over an input signal composed of several input planes. |

| torch.nn.AdaptiveMaxPool2d() | Apply 2-D adaptive max pooling over an input signal composed of several input planes. |

| torch.nn.AdaptiveMaxPool3d() | Apply 3-D adaptive max pooling over an input signal composed of several input planes. |

| torch.nn.AdaptiveAvgPool1d() | Apply 1-D adaptive Average pooling over an input signal composed of several input planes. |

| torch.nn.AdaptiveAvgPool2d() | Apply 2-D adaptive Average pooling over an input signal composed of several input plane. |

| torch.nn.AdaptiveAvgPool3d() | Apply 3-D adaptive Average pooling over an input signal composed of several input planes. |

Padding Layer

Padding classes add the values on the boundary of the input tensor.

| Class_Name | Description |

| torch.nn.ReflectionPad1d() | It uses reflection of input boundary to do 1D padding on the input tensor. |

| torch.nn.ReflectionPad2d() | It uses reflection of input boundary to do 2D padding on the input tensor. |

| torch.nn.ReplicationPad1d() | It uses replication of input boundary to do 1D padding on the input tensor. |

| torch.nn.ReplicationPad2d() | It uses replication of input boundary to do 2D padding on the input tensor. |

| torch.nn.ReplicationPad3d() | It uses replication of input boundary to do 2D padding on the input tensor. |

| torch.nn.ZeroPad2d() | Zero padding is added to the boundary of input tensor. |

| torch.nn.ConstantPad1d() | 1D padding to the input tensor boundary with constant value. |

| torch.nn.ConstantPad2d() | 2D padding to the input tensor boundary with constant value. |

| torch.nn.ConstantPad3d() | 3D padding to the input tensor boundary with constant value. |

Normalization Layer

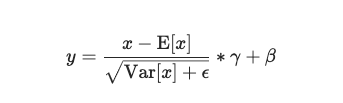

Normalization is applied on the input signal and it is given below:

Here, Mean and Standard deviation is calculated as per dimension.

γ and β are learnable parameters.

| Class_Name | Description |

| torch.nn.BatchNorm1d() | It is a mini-batch of 1D input with an option for adding the channel and apply normalization over 2D or 3D input. |

| torch.nn.BatchNorm2d() | It is a mini-batch of 2D input with option for adding the channel and apply normalization over 4D input. |

| torch.nn.BatchNorm3d() | It is a mini-batch of 3D input with the option for adding the channel and apply normalization over 5D input. |

| torch.nn.GroupNorm() | This class applies Group Normalization over a mini-batch of inputs. |

| torch.nn.SyncBatchNorm() | This class applies Batch Normalization over an N-Dimensional input having a mini-batch of [N-2]D inputs. |

| torch.nn.InstanceNorm1d() | This class is used to apply instance normalization on the 3D input signal. |

| torch.nn.InstanceNorm2d() | This class is used to apply instance normalization on the 4D input signal. |

| torch.nn.InstanceNorm3d() | This class is used to apply instance normalization on a 5D input signal. |

| torch.nn.LayerNorm() | This class is used to apply layer normalization on a mini-batch os input signal. |

| torch.nn.LocalResponseNorm() | This class is used to apply local response normalization on an input signal made of different input planes. |

Recurrent Layer

This layer is used while developing RNN which is used mostly to develop NLP applications.

| Class_Name | Description |

| torch.nn.RNNBase() | This works when GPU/cuDNN is enabled as it reset data pointer so that they can use a faster code path. |

| torch.nn.RNN() | This class applies a multi-layer Elman RNN with activation function as tanh or ReLU for non-linearity. |

| torch.nn.LSTM() | This is used to apply multi-layer long short term memory class on input signals. |

| torch.nn.GRU() | This is used to apply a multi-layer gated recurrent unit class on input signals. |

| torch.nn.RNNCell() | This is an Elman RNN cell with activation function as tanh or ReLU non-linearity. |

| torch.nn.LSTMCell() | This is a long short term memory cell to hold the previous input sequence. |

| torch.nn.GRUCell() | This is a gated recurrent unit cell to hold the previous input sequence. |

Transformer Layers

Transformer is used to perform different transformations while doing the processing of the input images as needed.

| Class_Name | Description |

| torch.nn.Transformer() | It is used to modify the input signal attribute as required by the user. |

| torch.nn.TransformerEncoder() | It is a stack of N encoder layers. |

| torch.nn.TransformerDecoder() | It is a stack of N decoder layers. |

| torch.nn.TransformerEncoderLayer() | It is made up of a self-attn and feedforward network. |

| torch.nn.TransformerDecoderLayer() | It is made up of self-attn,multi-head-attn, and feedforward networks. |

Linear Layer

Applies linear or bilinear transformation of the input data.

| Class_Name | Description |

| torch.nn.Identity() | It is an argument insensitive identity placeholder operator. |

| torch.nn.Linear() | This module applies the linear transformation of the input data. |

| torch.nn.Bilinear() | This module applies the bilinear transformation on the input data. |

Dropout Layer

The dropout layer is used in the neural network on top of the dense or convolution layer to regularize the model.

| Class_Name | Description |

| torch.nn.Dropout() | It randomly zeroes element in input tensor with probability p. |

| torch.nn.Dropout2d() | It is applied to randomly zero out the entire channel with a 2D feature map. |

| torch.nn.Dropout3d() | It is applied to randomly zero out the entire channel with a 3D feature map. |

| torch.nn.AlphaDropout() | It is used to apply alpha dropout over the input signal and maintains the self-normalizing property. |

Sparse Layer

This module is used in torch.nn as a placeholder for word embedding and the user can retrieve them using indexes.

| Class_Name | Description |

| torch.nn.Embedding() | This module helps to store the embedding of a fixed dictionary and size. |

| torch.nn.EmbeddingBag() | This module helps to store sums or means of bags of embedding. |

4. Functions

Module supports the classes for the various distance and loss functions.

Loss Function

Pytorch provides the function to calculate the error between the input and target values as torch.nn module.

| Class_Name | Description |

| torch.nn.L1Loss() | It is used to measure the mean absolute area between each element in input and output. |

| torch.nn.MSELoss() | It is used to measure the mean squared area in squared L2 norm between each element in input and output. |

| torch.nn.CrossEntropyLoss() | It is created from nn.LogSoftmax() and nn.NLLLoss() in a class. |

| torch.nn.CTCLoss() | It is used to measure the Connectionist Temporal Classification loss between the input to target. |

| torch.nn.NLLLoss() | It is a negative log likelihood loss and it is used to train the classification problems. |

| torch.nn.KLDivLoss() | This is Kullback-Leibler divergence loss used to measure distance for continuous distributions. |

| torch.nn.BCELoss() | It measures the Binary Cross Entropy loss between input and prediction. |

| torch.nn.BCEWithLogitsLoss() | It measures the loss by combining the sigmoid layer and BCELoss in one class. |

| torch.nn.MarginRankingLoss() | It measures the loss between inputs , two 1D mini-batch Tensors, and a label 1D mini-batch tensor. |

| torch.nn.MultiLabelMarginLoss() | It creates a criterion which optimizes multiclass hinge loss between input and output. |

| torch.nn.SmoothL1Loss() | If the error between element is below 1 then this criterion creates squared term loss. |

| torch.nn.SoftMarginLoss() | It optimizes a two-class classification logistic loss. |

| torch.nn.MultiLabelSoftMarginLoss() | It creates a criterion to optimize the multi-label one-versus-all loss based on maximum entropy between input and output of size (N,C). |

| torch.nn.MultiMarginLoss() | It is a criterion to optimize a multi-class classification hinge loss between input and target. |

| torch.nn.TripletMarginLoss() | It creates a criterion to measure triplet loss in input tensors and also measures a relative similarity between samples. |

Distance Function

Torch.nn Module provide below classes to calculate the distance between two parameter x1 and x2.

| Class_Name | Description |

| torch.nn.CosineSimilarity() | It returns the cosine similarity between the two variables with default parameters. |

| torch.nn.PairwiseDistance() | It returns the pairwise distance between the two vectors with default parameters. |

5. Other Classes and Modules

Here are some of the other classes and modules available in the torch.nn :

DataParallel Layers (multi-GPU, distributed)

| Class_Name | Description |

| torch.nn.DataParallel() | This module implements data parallelism at the module level. |

| torch.nn.parallel.DistributedDataParallel() | This module performs distributed data parallelism by replicating module on each device of the CPU. |

Non-Linear Activations (weighted sum, nonlinearity)

| Class_Name | Description |

| torch.nn.ELU() | Exponential linear function is applied on input x:

ELU(x)=max(0,x)+min(0,α∗(exp(x)−1)) |

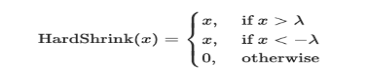

| torch.nn.Hardshrink() | It Applies hardshink function on input x:

|

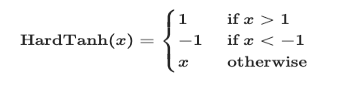

| torch.nn.Hardtanh() | It applies HardTanh function element wise on input x and returns the linear region [-1, 1].

|

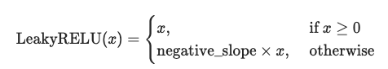

| torch.nn.LeakyReLU() | It is a leaky ReLU and has small negative slope and applies element wise on input x:

|

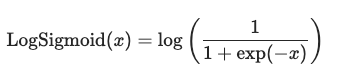

| torch.nn.LogSigmoid() | It applies the Logistic and sigmoid function on input x and is given by:

|

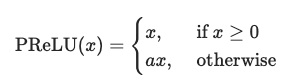

| torch.nn.PReLU() | It is used to apply parametric ReLU function as below:

|

| torch.nn.ReLU() | It applies ReLU function and takes the max between 0 and element :

ReLU(x)=max(0,x) |

| torch.nn.ReLU6() | It applies ReLU function and takes the input signal.

ReLU6(x)=min(max(0,x),6) |

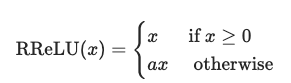

| torch.nn.RReLU() | It is used to apply randomized leaky ReLU function.

|

Non-Linear Activations (other)

| Class_Name | Description |

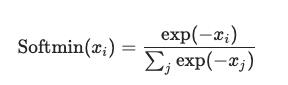

| torch.nn.Softmin() | This module is used to apply softmin function on N-Dimensional input tensor.

|

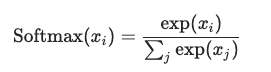

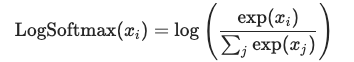

| torch.nn.Softmax() | This module is used to apply softmax function on N-Dimensional input tensor.

|

| torch.nn.Softmax2d() | This module is used to apply SoftMax function over features to each spatial location. |

| torch.nn.LogSoftmax() | This module is used to apply log of softmax() function on N-dimensional input tensor.

|

| torch.nn.AdaptiveLogSoftmaxWithLoss() | Adaptive softmax function gives an approximate strategy for the training model with large output. |

Utilities

| Class_Name | Description |

| torch.nn.utils.clip_grad_norm_() | It is clip gradient norm of an iterable parameter. |

| torch.nn.utils.clip_grad_value_() | It is clip gradient of an iterable parameter at specified value. |

| torch.nn.utils.parameters_to_vector() | It is used to convert the parameters to one vector. |

| torch.nn.utils.vector_to_parameters() | It is used to convert one vector to the parameters. |

| torch.nn.utils.prune.BasePruningMethod() | To create new pruning technique base pruning class is an abstract base class. |

| torch.nn.utils.prune.PruningContainer() | It is a container to hold the sequence of pruning method and keeps track of the order. |

| torch.nn.utils.prune.Identity() | It generates the pruning parametrization with a mask of ones. |

| torch.nn.utils.prune.RandomUnstructured() | It is pruning on currently unpruned tensor at random. |

| torch.nn.utils.prune.L1Unstructured() | It zero out the unpruned tensor with lowest L1-norm |

| torch.nn.utils.prune.RandomStructured() | It is used to prune an entire unpruned channel in a tensor at random. |

| torch.nn.utils.prune.LnStructured() | It is used to prune channel in a tensor on a Ln-norm. Quantity of pruning should be between 0 to 1. |

| torch.nn.utils.prune.global_unstructured() | It is used to globally prune tensor available in parameters. |

| torch.nn.utils.prune.is_pruned() | It checks whether module is pruned or not in forward_pre_hooks module. |

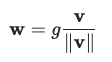

| torch.nn.utils.weight_norm() | Weight Normalization is applied on the parameter given by:

|

| torch.nn.utils.spectral_norm() | Spectral normalization of the parameter in the given module. |

| torch.nn.utils.remove_spectral_norm() | It removes the spectral normalization of the parameter in the given module. |

| torch.nn.utils.rnn.PackedSequence() | It is used in RNN models to holds the data and list of batch size of packed sequence. |

| torch.nn.utils.rnn.pack_padded_sequence() | It is used to pack the tensors containing padded sequences of variable length. |

| torch.nn.utils.rnn.pad_packed_sequence() | It is an inverse of pack_padded_sequence(). It pads packed batch of variable length sequences. |

| torch.nn.utils.rnn.pad_sequence() | It is stack of list of tensors of variable length with padding values. |

| torch.nn.utils.rnn.pack_sequence() | It is a list of tensors of variable length. |

| torch.nn.Flatten() | Contiguous range of dimensions are flatten into a tensor. |

Recommended Articles

This is a guide to torch.nn Module. Here we also discuss the introduction and modules and classes in torch.nn module along with a detailed explanation. you may also have a look at the following articles to learn more –