Updated June 29, 2023

What are Neural Networks?

Biological neural networks inspire the computing system to perform different tasks involving a vast amount of data, called artificial neural networks or ANN. Different algorithms from the changing inputs were used to understand the relationships in a given data set to produce the best results. The network is trained to produce the desired outputs, and different models are used to predict future results with the data. The nodes interconnect to mimic the functionality of the human brain. Other correlations and hidden patterns in raw data cluster and classify the data.

Understanding Neural Network

Neural networks are trained and taught like a child’s developing brain. They cannot be programmed directly for a particular task. Instead, they are trained in such a manner so that they can adapt according to the changing Input.

There are three methods or learning paradigms to teach a neural network.

- Supervised Learning

- Reinforcement Learning

- Unsupervised Learning

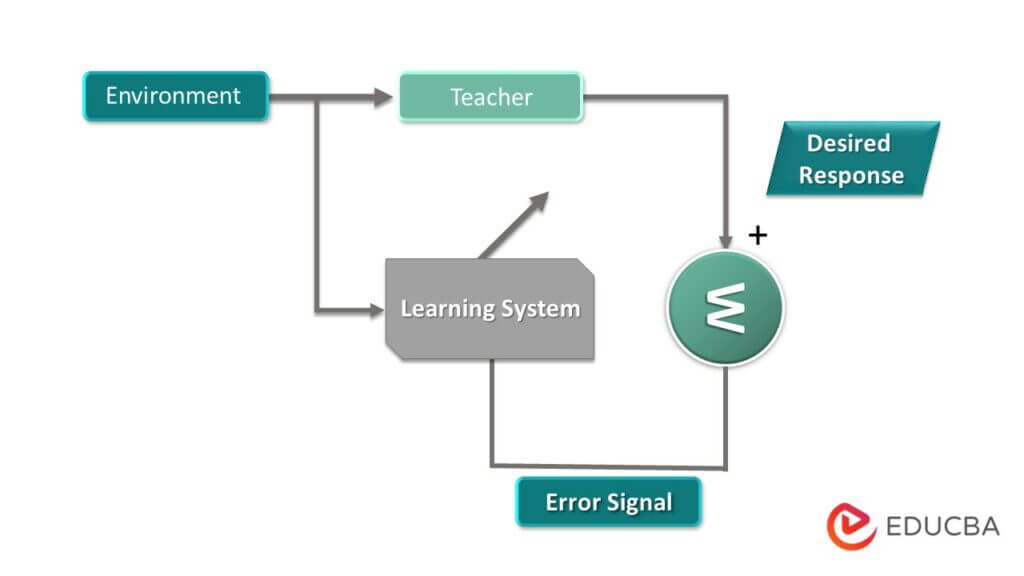

1. Supervised Learning

As the name suggests, supervised learning means in the presence of a supervisor or a teacher. It means a set of labeled data sets is already present with the desired output, i.e., the optimum action to be performed by the neural network, which is already present for some data sets. The machine is then given new data sets to analyze the training data sets and to produce the correct output.

It is a closed feedback system, but the environment is not in the loop.

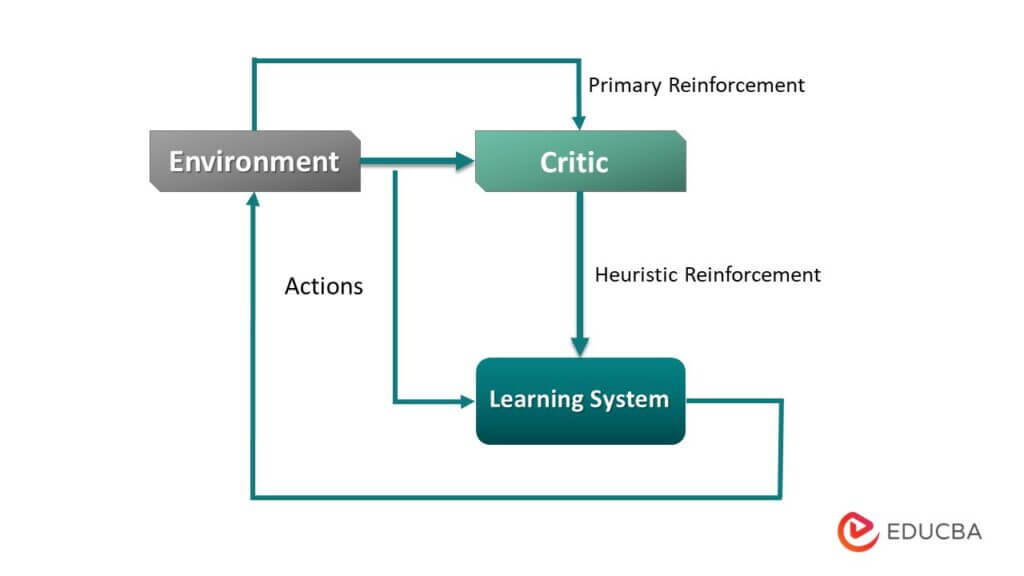

2. Reinforcement Learning

In this, learning of input-output mapping is done by continuous interaction with the environment to minimize the scalar index of performance. Instead of a teacher, a critic converts the primary reinforcement signal, i.e., the scalar Input received from the environment, into a heuristic reinforcement signal (higher quality reinforcement signal) scalar Input.

This learning aims to minimize the cost-to-function, i.e., the expected cumulative cost of actions taken over a sequence of steps.

3. Unsupervised Learning

As the name suggests, there is no teacher or supervisor available. The data is neither labeled nor classified, and no prior guidance is available to the neural network. In this, the machine has to group the provided data sets according to the similarities, differences, and patterns without any training provided beforehand.

Working with a Neural Network

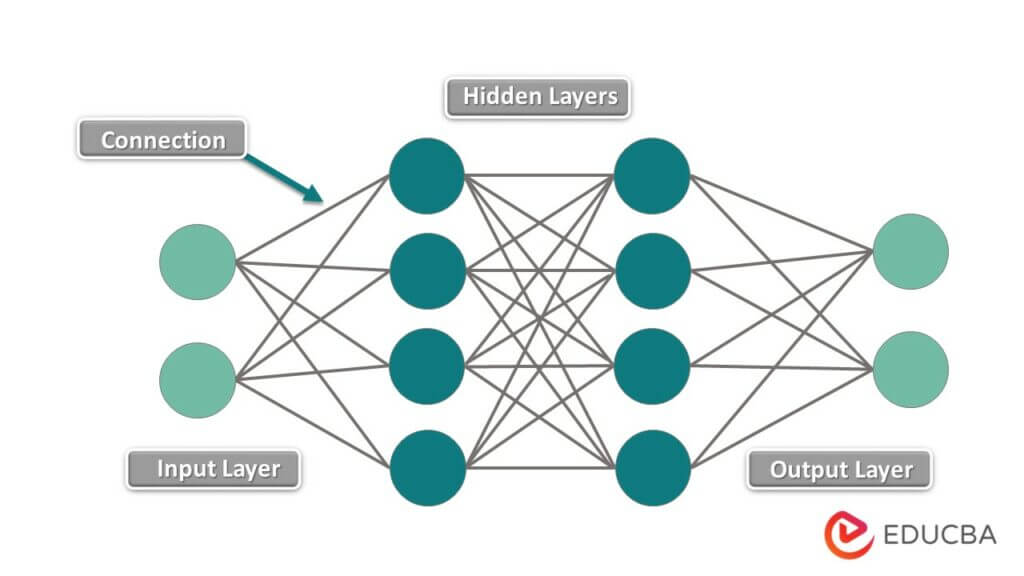

We know that a neural network has different layers, and each layer can perform a specific task or function. If we have a complex structure, then we require more layers. So this is one of the reasons why we call it a multilayer perceptron.

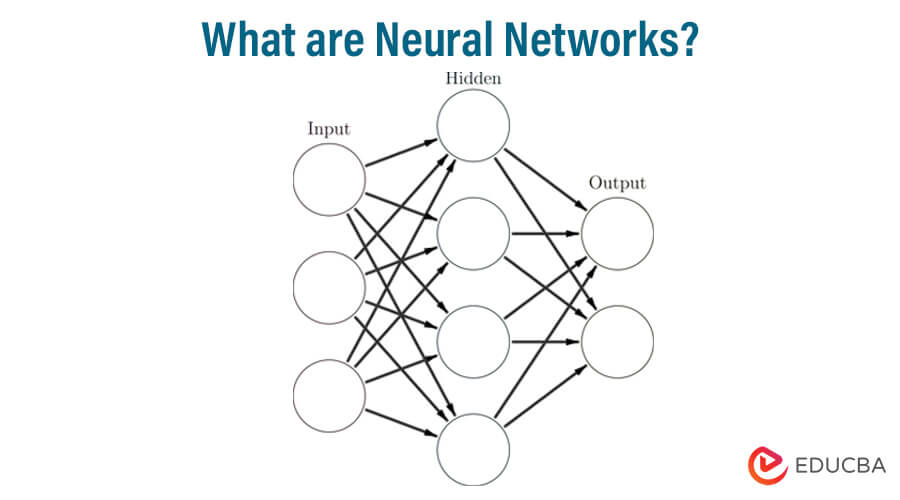

The node layer is the purest form of the neural network, which contains the three different layer types as below.

- Input layer

- Hidden layer

- Output layer

As the name stands, we can easily understand each layer performs a specific function, and this layer helps the node. Neural networks can have different numbers of hidden layers based on the requirements. The info layer gets the info signals and moves them to the following layer. If you know all about the direct relapse model, it will be much simpler to comprehend how a brain network works, as every one of the singular hubs can measure up to an interesting straight relapse model.

The hidden layer plays out all the back-end undertakings of computation. An organization could have zero hidden layers. Nonetheless, a brain network has something like one hidden layer. The resulting layer sends the end-product of the hidden layer’s computation.

Now let’s see how Perceptron Layers works as follows.

In the above point, we already discussed neural networks that use different perceptrons; with the help of this layer, we could create many perceptron neutrons. They are the basic unit that cooperates to shape a perceptron layer. These neurons get data in the arrangement of sources of info. You consolidate these mathematical contributions with an inclination and a gathering of loads, delivering a solitary result. For calculation, every neuron uses weights and bias. Then, at that point, the mixing capability utilizes the weight and the inclination to give a result. So we can generate an equation like the one below.

Equation= bias + weights * input

In the next step, we need to send the result of the above equation for the activation as below.

Final result = activation (Equation)

Now let’s understand the working of a neural network in short.

- Data is handled in the info layer, which moves it to the hidden layer.

- The interconnections between the two layers relegate loads to each info haphazardly.

- An inclination is added to each contribution after loads are duplicated with them separately.

- The weighted aggregate is moved to the actuation capability

- The enactment capability figures out which hubs it ought to fire for, including extraction

- The model applies an application capability to the result layer to convey the result

- The system changes the loads and back-engenders the result to limit mistakes.

What is Deep Learning?

Deep learning is one of the types of artificial intelligence and machine learning; deep learning behaves like a human brain, or we can say it processes the data like the human brain. In deep learning, we collect data from different resources in different complex patterns, such as photos and text, and make predictions, as we can also automate the other tasks that require the human brain.

The Architecture of a Neural Network

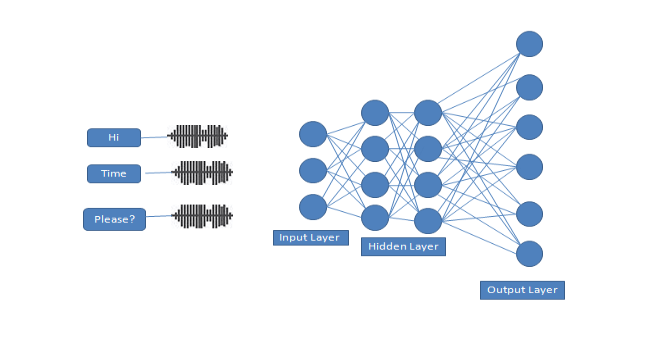

Let’s consider a speech recognition algorithm with a neural network as below.

There are five different types of neural networks, as below.

- Single-layer feed-forward network

- Multilayer feed-forward network

- Single node with own network

- Single-layer recurrent network

- Multiplayer recurrent network

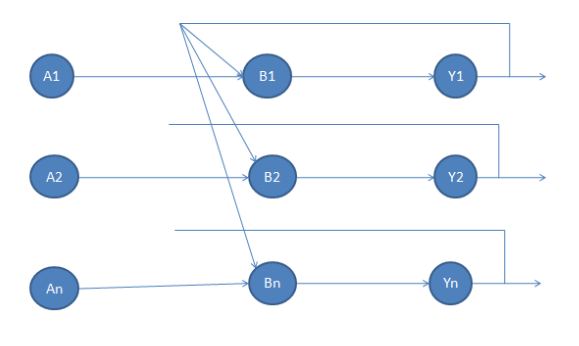

Now consider an example for a better understanding of single-layer recurrent networks as the below screenshot.

The above screenshot shows the different layers, sInputs Input, hidden, and output layers. Does the above screenshot show that the first network required the speech to recognize the Hi-Time Please text? Each word has a unique sound pattern during this process.

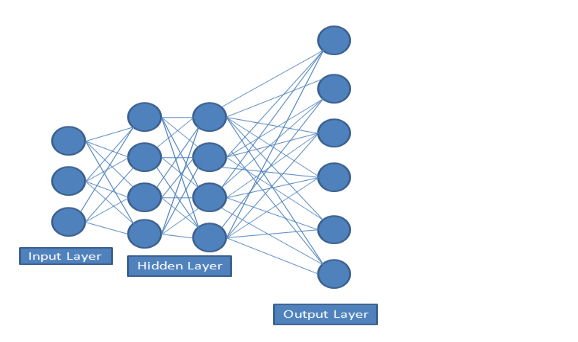

1. Single-layer feed-forward network

In this type, we have only two layers, the input Inpututput layers. Still, here we do not consider the input layer due to the computation performed—the single-layer feed-forward is shown in the below screenshot.

2. Multilayer feed-forward network

This type of neural network includes an additional hidden layer that serves as an internal layer and facilitates communication with the external layer. We can generate the intermediate computations result using a hidden layer, as shown in the screenshot below.

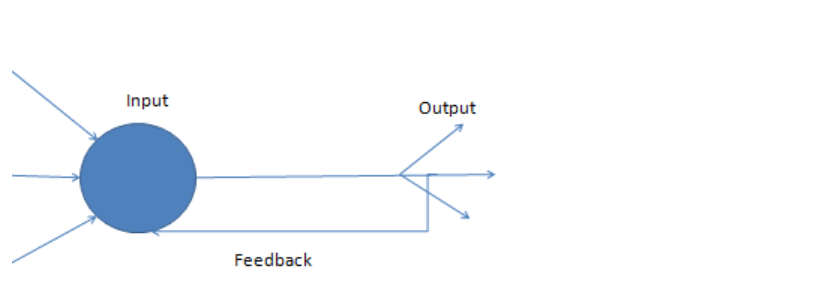

3. Single node with its network

In this layer, output directly reverses as input Input same layer, which means recurrent feedback with closed loops, as shown in the below screenshot.

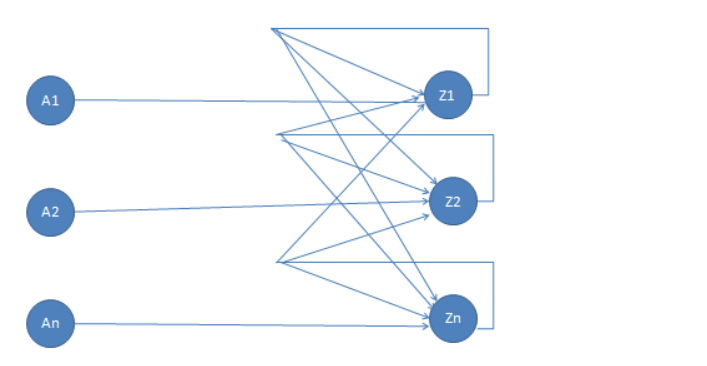

4. Single-layer recurrent network

This layer utilizes a single network with a feedback connection. A type of artificial neural network known as a recurrent neural network is one in which relations between nodes form a directed graph that follows a specific sequence. For a time sequence, this enables it to exhibit dynamic temporal behavior. RNNs use their internal state (memory) to process input sequences, unlike feedforward neural networks, as shown in the below screenshot.

5. Multilayer recurrent network

In this type, the output layer is directly connected to the same layer in the preceding layer, forming the recurrent multiplayer network shown in the screenshot below.

History of Neural Networks

| Year | Author | Research |

| Late 1940s -1943 | Warren S.McCulloch(American mathematician)and Walter Pitt(Neurophysiologist) | Tried to understand how the human brain generates complex patterns through the cells or neurons and attempted to develop a mathematical model of the brain to explain its function |

| late 1950s and early 1960s | Nathanial Rochester(IBM research laboratories) | Began to explore the potential of neural networks for pattern recognition |

| 1958 | Frank Rosenblatt(neuro-biologist of Cornell) | Proposed an algorithm and architectures for recognizing a pattern in electronic devices, known as “Perceptrons.” It is capable of learning and making decisions based on input data, and it was used in various applications, including image recognition. |

| 1982 | John Joseph Hopfield(an American scientist) | Discovered the Hopfield net. The neural network predicts the future behavior of a system based on its past. Its behavior has many applications in signal processing and signal classification. |

| 1989 | Yann LeCun(a French computer scientist) | Published producing a working neural network, such as speech recognition and image processing. |

Applications of Neural Network

- Recognition of handwriting: Neural networks convert written text into characters that machines can recognize.

- Stock Exchange prediction: The stock exchange is influenced by numerous factors, making it difficult to monitor and comprehend. On the other hand, a neural network can look at many of these factors and make daily price predictions, which would be helpful to stockbrokers.

- Traveling issues of sales: It’s about figuring out the best way to get from one city to another in a given area. Neural networks can address the issue of generating more revenue at lower costs.

- Image compression: It helps us to compress the data to store the encrypted form of the actual image.

Advantages of Neural Network

Given below are the advantages mentioned:

- Can work with incomplete information once trained.

- Have the ability to fault tolerance.

- Have a distributed memory.

- Can make machine learning.

- Parallel processing.

- Stores information on an entire network.

- Can learn non-linear and non-linear relationships.

- The ability to generalize, i.e., can infer unseen relationships after learning from some prior relationships.

Required Neural Network Skills

Given below are the neural network necessary skills:

- Knowledge of applied maths and algorithms.

- Probability and statistics.

- Distributed computing.

- Fundamental programming skills.

- Data modeling and evaluation.

- Software engineering and system design.

Why Should We Use Neural Networks?

- It helps to model the nonlinear and non-line relationships of the real world.

- They are used in pattern recognition because they can generalize.

- They have many applications, like text summarization, signature identification, handwriting recognition, etc.

- It can model data with high volatility.

Neural Networks Scope

It has a broad scope in the future. Researchers are constantly working on new technologies based on neural networks. Everything is converting into automation; hence they are very efficient in dealing with changes and can adapt accordingly. Due to an increase in new technologies, there are many job openings for engineers and neural network experts. Hence, neural networks will also prove to be a significant job provider.

How will this Technology help you in Career Growth?

There is massive career growth in the field of neural networks. The average salary of a neural network engineer ranges from $33,856 to $153,240 per year approximately.

Conclusion

From this article, we can understand the Neural Network. It provides the basic idea about the mid-level and higher-level concepts of Neural Networks. The neural network allows us to analyze large amounts of data while making it more human-readable. It can carry out any task with the least human involvement and is an excellent addition to your growing AI workflows.

Recommended Articles

This has been a guide to Neural Networks. Here we discussed the introduction, working, skills, career growth, and advantages of Neural Networks. You can also go through our other suggested articles to learn more –